A deep learning approach for automated scoring of the Rey–Osterrieth complex figure

Figures

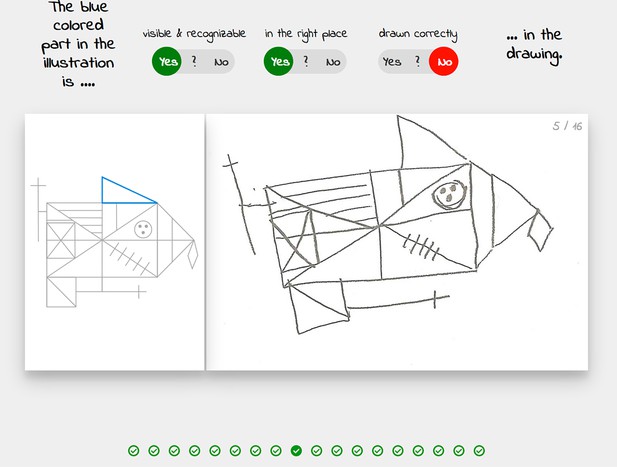

Overview of retrospective dataset.

(A) Rey–Osterrieth complex figure (ROCF) figure with 18 elements. (B) Demographics of the participants and clinical population of the retrospective dataset. (C) Examples of hand-drawn ROCF images. (D) The pie chart illustrates the proportion of the different clinical conditions of the retrospective dataset. (E) Performance in the copy and (immediate) recall condition across the lifespan in the retrospective dataset. (F) Distribution of the number of images for each total score (online raters).

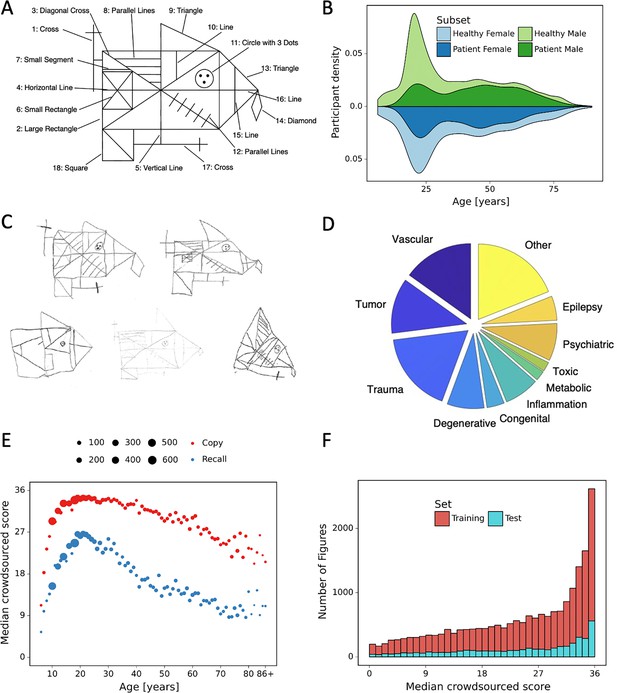

World maps depict the worldwide distribution of the origin of the data.

(A) Retrospective data. (B) Prospective data.

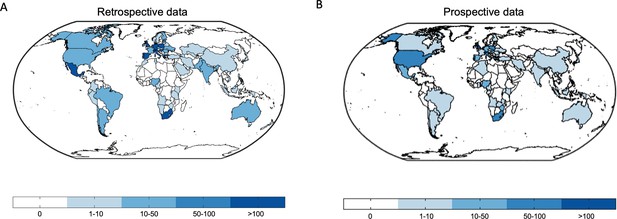

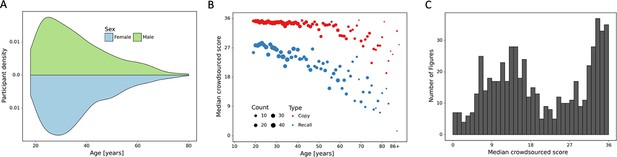

Overview of prospective dataset.

(A) Demographics of the participants of the prospectively collected data. (B) Performance in the copy and (immediate) recall condition across the lifespan in the prospectively collected data. (C) Distribution of number of images for each total score for the prospectively collected data.

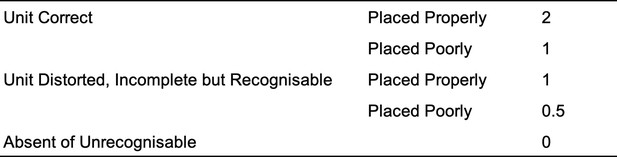

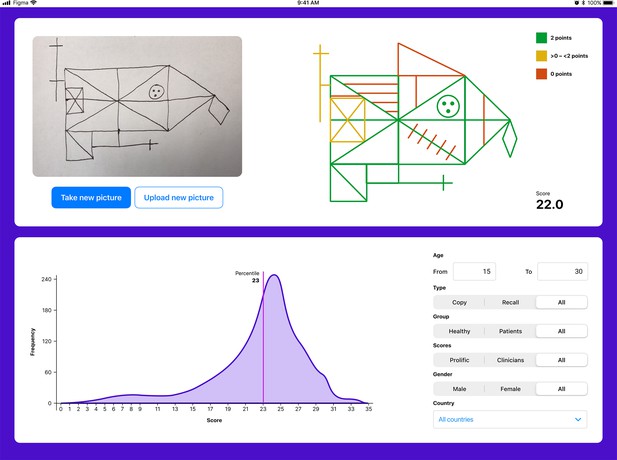

The user interface for the tablet- (and smartphone-) based application.

The application enables explainability by providing a score for each individual item. Furthermore, the total score is displayed. The user can also compare the individual with a choosable norm population.

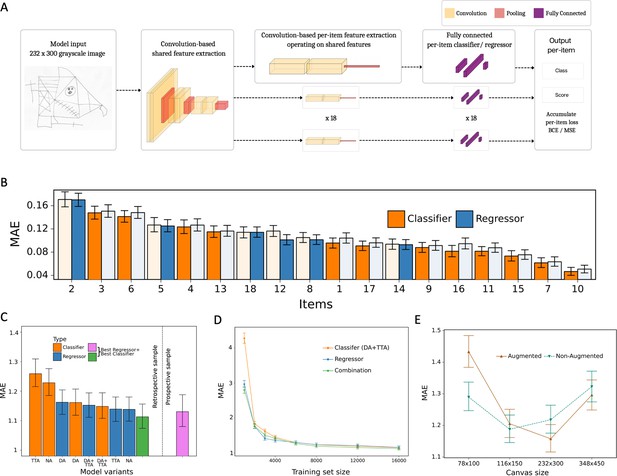

Model architecture and performance evaluation.

(A) Network architecture, constituted of a shared feature extractor and 18 item-specific feature extractors and output blocks. The shared feature extractor consists of three convolutional blocks, whereas item-specific feature extractors have one convolutional block with global max pooling. Convolutional blocks consist of two convolution and batch normalization pairs, followed by max pooling. Output blocks consist of two fully connected layers. ReLU activation is applied after batch normalization. After pooling, dropout is applied. (B) Item-specific mean absolute error (MAE) for the regression-based network (blue) and multilabel classification network (orange). In the final model, we determine whether to use the regressor or classifier network based on its performance in the validation dataset, indicated by an opaque color in the bar chart. In case of identical performance, the model resulting in the least variance was selected. (C) Model variants were compared and the performance of the best model in the original, retrospectively collected (green) and the independent, prospectively collected (purple) test set is displayed; Clf: multilabel classification network; Reg: regression-based network; NA: no augmentation; DA: data augmentation; TTA: test-time augmentation. (D) Convergence analysis revealed that after ~8000 images, no substantial improvements could be achieved by including more data. (E) The effect of image size on the model performance is measured in terms of MAE. The error bars in all subplots indicate the 95% confidence interval.

-

Figure 2—source data 1

The performance metrics for all model variants.

NA: non-augmented, DA: data augmentation is performed during training, TTA: test-time augmentation. The 95% confidence interval is shown in square brackets.

- https://cdn.elifesciences.org/articles/96017/elife-96017-fig2-data1-v1.xlsx

-

Figure 2—source data 2

Per-item and total performance estimates for the final model of the retrospective data.

Mean absolute error (MAE), mean squared error (MSE), and R² are estimated directly from the estimated scores.

- https://cdn.elifesciences.org/articles/96017/elife-96017-fig2-data2-v1.xlsx

-

Figure 2—source data 3

Per-item and total performance estimates for the final model with prospective data.

Mean absolute error (MAE), mean squared error (MSE), and R² are estimated directly from the estimated scores.

- https://cdn.elifesciences.org/articles/96017/elife-96017-fig2-data3-v1.xlsx

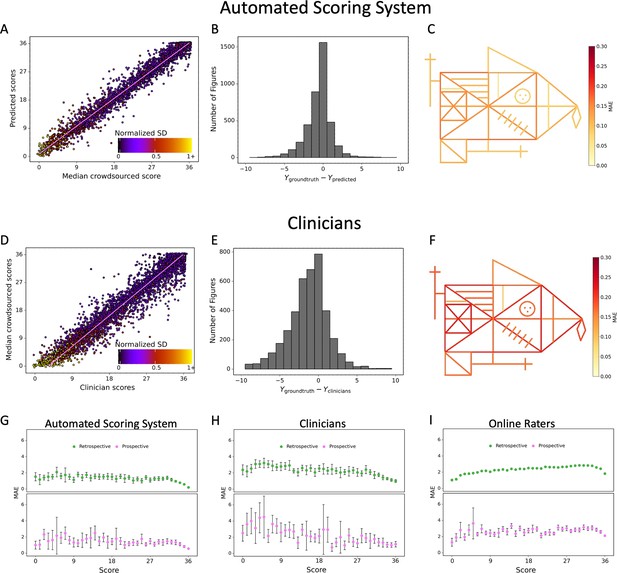

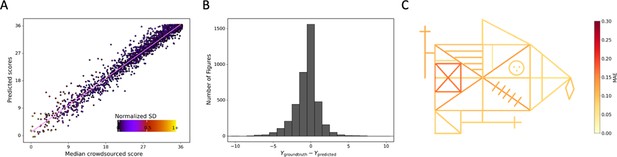

Contrasting the ratings of our model (A) and clinicians (D) against the ground truth revealed a larger deviation from the regression line for the clinicians.

A jitter is applied to better highlight the dot density. The distribution of errors for our model (B) and the clinicians ratings (E) is displayed. The mean absolute error (MAE) of our model (C) and the clinicians (F) is displayed for each individual item of the figure (see also Figure 2—source data 1). The corresponding plots for the performance on the prospectively collected data are displayed in Figure 3—figure supplement 1. The model performance for the retrospective (green) and prospective (purple) sample across the entire range of total scores for model (G), clinicians (H), and online raters (I) is presented. The error bars in all subplots indicate the 95% confidence interval.

-

Figure 3—source data 1

Performance per total score interval with retrospective data.

Thirty-seven intervals were evaluated, across the whole range of scores. We evaluated mean absolute error (MAE) and mean squared error (MSE) for the total score within each interval.

- https://cdn.elifesciences.org/articles/96017/elife-96017-fig3-data1-v1.xlsx

-

Figure 3—source data 2

Performance per total score interval with prospective data.

Thirty-seven intervals were evaluated, across the whole range of scores. We evaluated mean absolute error (MAE) and mean squared error (MSE) for the total score within each interval.

- https://cdn.elifesciences.org/articles/96017/elife-96017-fig3-data2-v1.xlsx

Detailed performance of the model on the prospective data.

Contrasting the ratings of our model. (A) Against the ground truth. A jitter is applied to better highlight the dot density. (B) The distribution of errors for our model on the prospective data is displayed. (C) The mean absolute error (MAE) of our model is displayed for each individual item of the figure (see also Figure 3—source data 2).

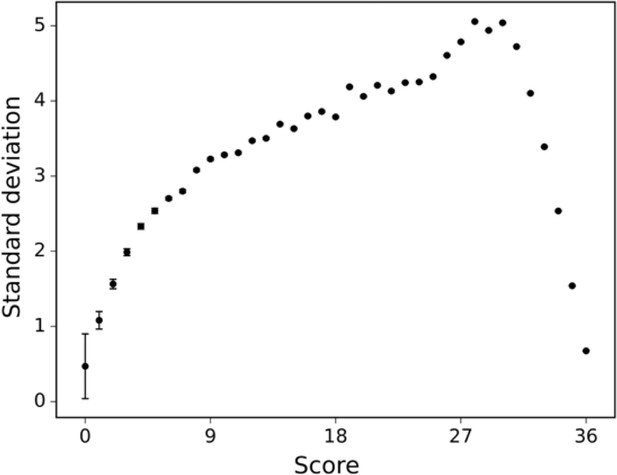

The standard deviation of the human raters is displayed across differently scored drawings.

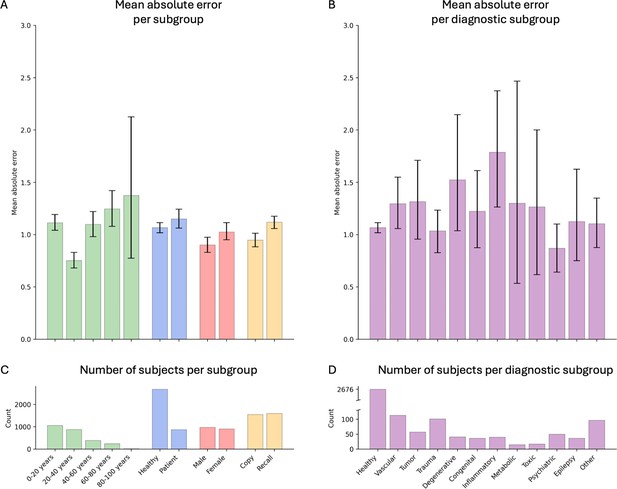

Model performance across ROCF conditions, demographics, and clinical subgroups in the retrospective dataset.

(A) Displayed are the mean absolute error and bootstrapped 95% confidence intervals of the model performance across different Rey–Osterrieth complex figure (ROCF) conditions (copy and recall), demographics (age and gender), and clinical statuses (healthy individuals and patients) for the retrospective data. (B) Model performance across different diagnostic conditions. (C, D) The number of subjects in each subgroup is depicted. The same model performance analysis for the prospective data is reported in Figure 4—figure supplement 1.

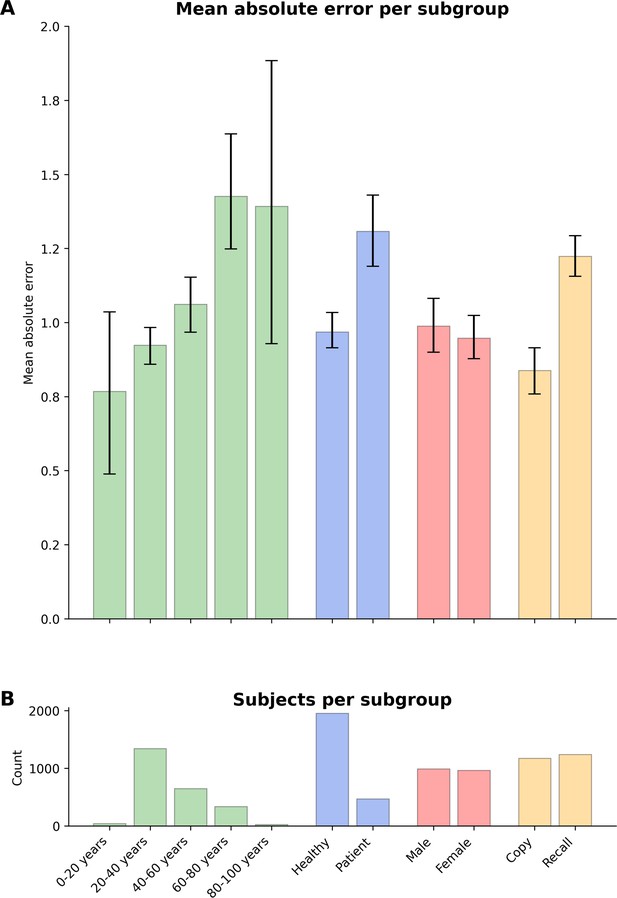

Model performance across ROCF conditions, demographics, and clinical subgroups in prospective dataset.

(A) Displayed are the mean absolute error and bootstrapped 95% confidence intervals of the model performance across different Rey–Osterrieth complex figure (ROCF) conditions (copy and recall), demographics (age and gender), and clinical statuses (healthy individuals and patients) for the prospective data. (B) The number of subjects in each subgroup is depicted. Please note, that we did not have sufficient information on the specific patient diagnoses in the prospective data to decompose the model performance for specific clinical conditions.

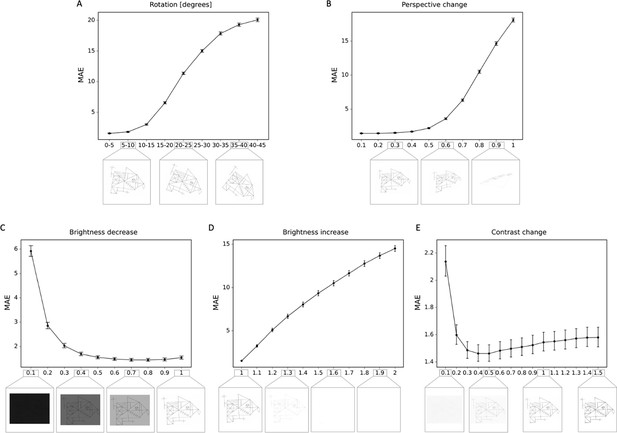

Robustness to geometric, brightness, and contrast variations.

The mean absolute error (MAE) is depicted for different degrees of transformations, including (A) rotations; (B) perspective change; (C) brightness decrease; (D) brightness increase; (E) contrast change. In addition examples of the transformed Rey–Osterrieth complex figure (ROCF) draw are provided. The error bars in all subplots indicate the 95% confidence interval.

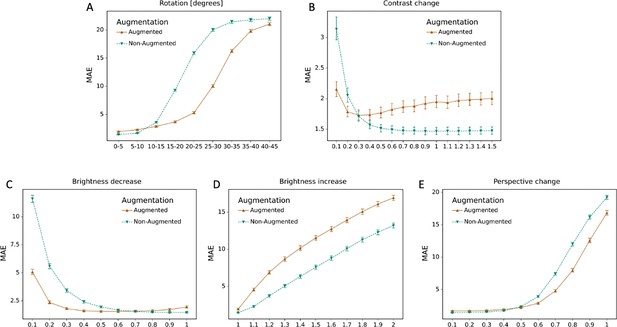

Effect of data augmentation.

The mean absolute error (MAE) for the model with data augmentation and without data augmentation is depicted for different degrees of transformations, including (A) rotations; (B) perspective change; (C) brightness decrease; (D) brightness increase; (E) contrast change.