Uncertainty-based inference of a common cause for body ownership

Peer review process

This article was accepted for publication as part of eLife's original publishing model.

History

- Version of Record updated

- Version of Record published

- Accepted Manuscript published

- Accepted

- Received

- Preprint posted

Decision letter

-

Virginie van WassenhoveReviewing Editor; CEA, DRF/I2BM, NeuroSpin; INSERM, U992, Cognitive Neuroimaging Unit, France

-

Tamar R MakinSenior Editor; University of Cambridge, United Kingdom

-

Zoltan DienesReviewer; University of Sussex, United Kingdom

-

Liping WangReviewer; Chinese Academy of Sciences, China

-

Mate AllerReviewer; MRC Cognition and Brain Sciences Unit, United Kingdom

In the interests of transparency, eLife publishes the most substantive revision requests and the accompanying author responses.

Decision letter after peer review:

Thank you for submitting your article "Uncertainty-based inference of a common cause for body ownership" for consideration by eLife. Your article has been reviewed by 3 peer reviewers, and the evaluation has been overseen by a Reviewing Editor and Tamar Makin as the Senior Editor. The following individuals involved in the review of your submission have agreed to reveal their identity: Zoltan Dienes (Reviewer #1); Liping Wang (Reviewer #2); Mate Aller (Reviewer #3).

The reviewers have discussed their reviews with one another, and the Reviewing Editor has drafted this to help you prepare a revised submission.

Essential revisions:

(1) A first reviewer highlights a major interpretation problem based on the 12 s asynchrony, contrasting a domain-general reasoning module with a body ownership one. The reviewer makes suggestions on how to accommodate and clearly refine the claims (e.g. explaining that a main observation is about a shift in criterion).

(2) Consistent with the above issue, a second reviewer highlights several empirical limitations and inconsistencies in the authors' analytical approach (points 2 to 5) with a lack of clear comparisons between the two tasks. These points need to be addressed as listed.

(3) The issue of inter-individual differences is being raised by two reviewers and several suggestions are made to provide a more transparent report of the BCI model and their consistency with authors' interpretations.

Reviewer #1 (Recommendations for the authors):

The authors explore the basis of the "rubber hand illusion" in which people come to feel a rubber hand as their own when it is stroked or tapped synchronously with their real hand. They show that when participants are exposed to lags in the tapping of the rubber and real hand, under conditions of different visual noise, people adjust their sense of ownership according to the manipulated variables. The degree of adjustment can be fitted by a Bayesian model, i.e. one in which evidence for ownership is construed as strength of evidence for the stroking being synchronous, and this is used to update the prior probability of there being a common cause for the sight and feeling of stroking.

The paper shows a lot of care in writing and study methodology. Setting up the equipment, the robotic device and the VR, required a lot of effort; the modeling and analysis have been conscientiously performed. The weakness for me is in the background framing of what is going on: Naturally, as is the authors' prerogative, they have taken a particular stance. However, they also say that these data may help distinguish different theories and approaches from their own preferred one. But no argument is made for how the data distinguish their interpretative approach from different ones. This is left as a promissory note. In fact, I think there is a rather different way of viewing the data.

The authors frame their work in terms of a mechanism for establishing bodily ownership. On the other hand, people may infer how feelings of ownership should vary given what is manipulated in the experiment. That is, if asynchrony is manipulated we know people view this as something that is meant to change their feeling of ownership (e.g. Lush 2020 Collabra). That is, the results may be based on general inference rather than any special ownership module. Consistently, reasoning in medical diagnosis and legal decisions can be fit by Bayesian models as well (e.g. Kitzis et al., Journal Math. Psych.; Shengelia and Lagnado, 2021, Frontiers in Psych). That is, the study could be brought under the research programme of showing people often reason in approximately Bayesian ways in all sorts of inference tasks, especially when concrete information is given trial by trial (e.g. Oaksford and Chater).

The results support the bare conclusions I stated, but what those conclusions mean is still up for grabs. I always welcome attempts to model theoretical assumptions, and the approach is more rigorous than many other experiments in that field. Hopefully it will set an example.

Modeling how inferences are formed when presented with different sources of information is an important task, whether or not those inferences reflect the general ability of people to reason, or else the specific processes of a particular model. For example, Fodor claimed that general reasoning was beyond the scope of science, but the fact that across many different inference tasks similar principles arise – roughly though not exactly Bayesian – indicates Fodor was wrong!

Specific comments:

i) The model can be described as Bayesian, but how different is it from a signal detection theory model, with adjustable vs fixed criteria, and a criteria offset for the RHI and asynchrony judgment task? In other words, rather than the generative model being an explicit model for the system, instead different levels of asynchrony simply produce certain levels of evidence for a time difference, as in an STD model. Then set up criteria to judge whether there was or was not a time difference. Adjust criteria according to prior probabilities, as is often done in SDT. That's it. Is this just a verbal rephrasing?

One thing on the framing of the model: Surely a constant offset over several taps is just as good evidence for a common cause no matter whether the offset is 0 or something else? But if the prior probability is specifically that common cause is the same hand is involved (which requires an offset close to 0), surely that prior probability is essentially zero? So how should the assumption of C1 be properly framed?

ii) lines 896-897"…temporally correlated visuotactile signals are a key driving factor behind the emergence of the rubber hand illusion Chancel and Ehrsson,202, …"

Cite findings that need some twisting to fit in with this view, e.g. the finding that imagining a hand is one's own creates SCRs to its being cut; and perhaps more easy to deal with but I think rather telling, no visual input of a hand is needed (Guterstan et al., 2013) and laser lights instead of brushes work just about as well (Durgin et al., 2013) as does stroking the air (Guterstam et al., 2016), making magnetic sensations akin to the magnetic hands suggestion in hypnosis. It seems the simplest explanation is that participants respond to what they perceive as what is meant to be relevant in the study manipulations. Suitable responses are constructed, based on genuine experience or otherwise, in accordance with the needs of the context. The very way the question is framed determines the answer according to principles of general inference (e.g. Lush and Seth, 2022, Nat Coms; Corneille and Lush, https://psyarxiv.com/jqyvx/).

iii) Provide means and SE's for conditions for synchrony judgment tasks.

iv) Discuss the alternative views of how the study could be interpreted as I have indicated above.

Reviewer #2 (Recommendations for the authors):

Using rubber hand illusion in humans, the study investigated the computational processes in self-body representation. The authors found that the subjects' behavior can be well captured by the Bayesian causal inference model, which is widely used and well described in multisensory integration. The key point in this study is that the body ownership perception was well predicted by the posterior probability of the visual and tactile signals coming from a common source. Although this notion was investigated before in humans and monkeys, the results are still novel:

1. This study directly measures illusions with the alternative forced-choice report instead of objective behavioral measurements (e.g., proprioceptive drift).

2. The visual sensory uncertainty was changed trial by trial to examine the contribution of sensory uncertainty in multisensory body perception. Combined with the model comparison results, these results support the superiority of a Bayesian model in predicting the emergence of the rubber hand illusion relative to the non-Bayesian model.

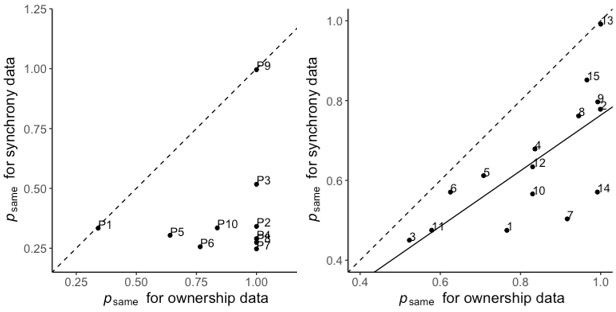

3. The authors investigated the asynchrony task in the same experiment to compare the computational processing in the RHI task and visual-tactile synchrony detection task. They found that the computational mechanisms are shared between these tasks, while the prior of common source is different.

In general, the conclusions are well supported, and the findings advanced our understanding of the computational principles of body ownership.

Main comments:

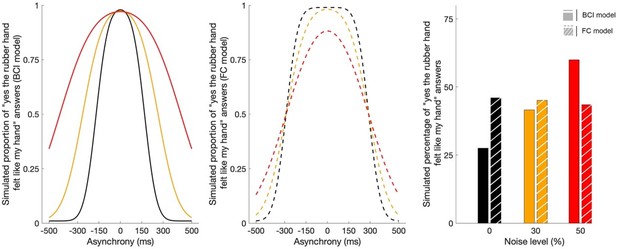

1. One of the critical points in this study is the comparison between the BCI model which takes the sensory uncertainty into account and the non-Bayesian (fixed-criterion) model. Therefore, I suggest the authors show the prediction results of both the BCI and non-Bayesian model in Figure 2 and compare the key hypothesis and the prediction results.

2. This study has two tasks: the ownership task and the asynchrony task. As the temporal disparity is the key factor, the criteria for determining the time disparities are important. The author claim that the time disparities used in the two tasks were determined based on the pilot experiments to maintain an equivalent difficulty level between the two tasks. If I understand correctly, the subjects were asked to report whether the rubber hand felt like my hand or not in the ownership task. Thus, there are no objective criteria of right or wrong. The authors should clarify how they define the difficulty of the two tasks and how to make sure the difficulty is equal. I think this is important because the time disparities in these two tasks were different, and the comparison of Psame in these tasks may be affected by the time disparities. Furthermore, the authors claimed that the ownership and visual-tactile synchrony perception had distinct multisensory processing according to the different Psame in the two tasks. Thus the authors should show further evidence to exclude the difference of Psame results from the chosen time disparities.

3. Related to the question above, the authors found that the same BCI model can reasonably predict the behavioral results in these two tasks with the same parameters (or only different Psame). They claimed that these two tasks shared similar results in multisensory causal inference. While in the following, they argued that there was a distinct multisensory perception in these two tasks because the Psame were different. If these tasks shared the same BCI computational approaches and used the posterior probability of common source to determine ownership and synchrony judgment, what is the difference between them?

4. The extension analysis showed that the Psame values from the two tasks were correlated across subjects. This is very interesting. However, since the uncertainty of timing perception (the sensory uncertainty of perceived time of the tactile stimuli on real hand and fake hand) was taken into account to estimate the posterior probability of common source in this study, the variance across subjects in ownership and synchrony task can only be interpreted by the Psame. In fact, the timing perception was considered as a Gaussian distribution in a modulated version of the BCI model for the agency (temporal binding) (R. Legaspi, 2019) and ownership (Samad, 2015). It will be more persuasive if the authors exclude the possibility that the individual difference of timing uncertainty cannot explain the variance across subjects.

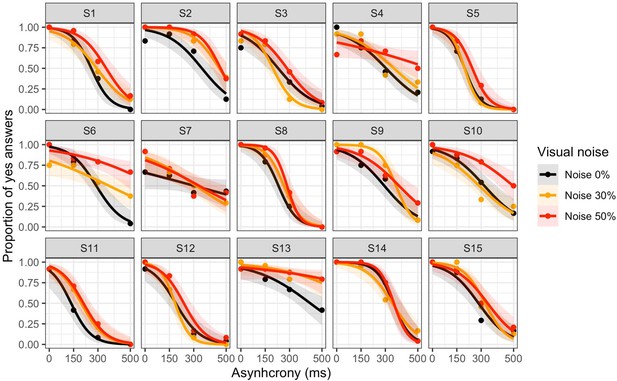

5. Please include the results of single-subject behavior in the asynchrony task. It is helpful to compare the behavioral pattern between these two tasks. The authors compared the Psame between ownership and asynchrony tasks. Still, they did not directly compare the behavioral results (e.g., the reported proportion of elicited rubber hand illusions and the reported proportion of perceived synchrony).

6. The analysis of model fitting seems to lack some details. If I understood it correctly, the authors repeated the fitting procedure 100 times (line 502), then averaged all repeats as the final results of each parameter? It is reported that "the same set of estimated parameters at least 31 times for all participants and models". What does this sentence mean? Can the authors show more details about the repeats of the model result?

7. In Figure 3A, was there an interaction effect between the time disparity and visual noise level?

8. Line 624, the model comparison results suggested that the subjects have the same standard deviation as the true stimulus distribution. I encourage the authors to directly compare the BCI* model predicted uncertainty (σ s) to the true stimulus uncertainty, which will make this conclusion more convincing.

9. How did the authors fit the BCI model to the combined dataset from both ownership and synchrony tasks? What is the cost function when fitting the combined dataset?

10. As shown in the supplementary figures, the variations of ownership between the three visual noise levels varied widely among subjects and the predicted visual sensory sigmas (Appendix 1 – Table 2). The ownership in the three visual noise levels correlated with the individual difference of visual uncertainty?

11. The statements of the supplementary figures are confusing. For example, it is hard to determine which one is the "Supplementary File 1. A" in line 249?

12. Line 1040, it is hard to follow how to arrive at this equation from the previous formulas. Please give some more details and explanations.

Reviewer #3 (Recommendations for the authors):

This study investigated the computational mechanisms underlying the rubber hand illusion. Combining a detection-like task with the rubber hand illusion paradigm and Bayesian modelling, the authors show that human behaviour regarding body ownership can be best explained by a model based on Bayesian causal inference which takes into account the trial-by-trial fluctuations in sensory evidence and adjusts its predictions accordingly. This is in contrast with previous models which use a fixed criterion and do not take trial-by-trial fluctuations in sensory evidence into account.

The main goal of the study was to test whether body ownership is governed by a probabilistic process based on Bayesian causal inference (BCI) of a common cause. The secondary aim was to compare the body ownership task with a more traditional multisensory synchrony judgement task within the same probabilistic framework.

The objective and main question of the study is timely and interesting. The authors developed a new version of the rubber hand illusion task in which participants reported their perceived body ownership over the rubber hand on each trial. With the manipulation of visual uncertainty through augmented reality glasses they were able to assess whether trial-by-trial fluctuation in sensory uncertainty affects body ownership – a key prediction of the BCI model.

This behavioural paradigm opens up the intriguing possibility of testing the BCI model for body ownership at a neural level with fMRI or EEG (e.g., as in Rohe and Noppeney (2015, 2016) and Aller and Noppeney (2019)).

I was impressed by the methodological rigour, modelling and statistical methods of the paper. I was especially glad to see the modelling code validated by parameter recovery. This greatly increases one's confidence that good coding practices were followed. It would be even more reassuring if the analysis code were made publicly available.

The data and analyses presented in the paper support the key claims. The results represent a relevant contribution to our understanding of the computational mechanisms of body ownership. The results are adequately discussed in light of a broader body of literature. Figures are well designed and informative.

Main points:

1. Line 298: It is not clear if all 5 locations were stimulated in each 12 s stimulation phase or they were changed only between stimulation phases. Please clarify.

2. Line 331: "The 7 levels of asynchrony appeared with equal frequencies in pseudorandom order". I assume this was also true to the noise conditions, i.e., they also appeared in pseudorandom order with equal frequencies and not e.g., blocked. Could you please make this explicit here?

3. Line 348: Was the pilot study based on an independent sample of participants from the main experiment? Please also include standard demographics data (mean+/-SD age, sex) from the pilot study.

4. Line 406: From the standpoint of understanding the inference process at a high level, the crucial step of how prior probabilities are combined with sensory evidence to compute posterior probabilities is missing from the equations. More precisely it is not exactly missing, but it is buried inside the definition of K (line 416) if I understand correctly. I think it would make it easier for non-experts to follow the thought process if Equation 5 from Supplementary material would be included here.

5. Line 511: There are different formulations of BIC, could you please state explicitly the formula you used to compute it? Please also state the formula for AIC.

6. Line 512: "Badness of fit": Interesting choice of word, I completely understand why it is chosen here, however perhaps I would use "goodness of fit" instead to avoid confusion and for the sake of consistency with the rest of the paper.

7. Figure 4: I think the title could be improved here, e.g., "Model predictions of behavioural results for body ownership" or something similar. Details in the current title (mean +/- sem etc.) could go in the figure legend text.

I am a bit confused about why the shaded overlays from the model fits are shaped as oblique polygons? This depiction hints that there is a continuous increase in the proportion of "yes" answers in the neighbourhood of each noise level. Aren't these model predictions based on a single noise level value?

The mean model predictions are not indicated in the figure only the +/- SEM ranges marked by the shaded areas.

Line 261: Given that participants' right hand was used consistently, I am wondering if it makes any difference if the dominant or non-dominant hand is used to elicit the rubber hand illusion? If this is a potential source of variability it would be useful to include information on the handedness of the participants in the methods section.

Line 314: Please use either stimulation "phase" or "period" consistently across the manuscript.

Line 422: The study by Körding et al., (2007) is about audiovisual spatial localization, not synchrony judgment as is referenced currently. Please correct.

Line 529: Perhaps the better paper to cite here would be Rigoux et al., (2014) as this is an improvement over Stephan et al., (2009) and also this is the paper that introduces the protected exceedance probability which is used here.

Figure 3 and potentially elsewhere: Please overlay individual data points on bar graphs. There is plenty of space to include these on bar graphs and would provide valuable additional information on the distribution of data.

Figure 5A: Please consider increasing the size of markers and their labels for better visibility (i.e., similar size as in panels B and C).

Line 608,611, 639-640, and potentially elsewhere: Please indicate what values are stated in the form XX +/- YY. I assume they represent mean +/- SEM, but this must be indicated consistently throughout the manuscript.

[Editors’ note: further revisions were suggested prior to acceptance, as described below.]

Thank you for resubmitting your work entitled "Uncertainty-based inference of a common cause for body ownership" for further consideration by eLife. Your revised article has been evaluated by Tamar Makin (Senior Editor) and a Reviewing Editor.

The manuscript has been improved but there are some remaining issues that need to be addressed, as outlined below:

In particular, there was a consensus that the potential contribution of demand characteristics should be discussed, rather than dismissed. We also ask that you discuss the potential utility of a more simple model (signal detection theory). Please see more details below.

1) The authors added a paragraph detailing why the minimised (in their opinion) the contributions of demand characteristics. They argue that the theory that subjects respond to demand characteristics "cannot explain the specifically shaped psychometric curves with respect to the subtle stepwise asynchrony manipulations and the widening of this curve by the noise manipulation as predicted by the causal inference model." We were not fully convinced by this argument and wonder why you would want to categorically rule this possibility out.

Reviewer 1 wrote back: This claim is false. The authors should spell out the alternative theory in terms of subjects using general purpose Bayesian inference to infer what is required. Subjects do not need to know how general purpose inference works; they just need to use it. Does the fact that information was delivered trial by trial over many trials rule out general purpose Bayesian inference? On the contrary, it supports it. Bayesian updating is particularly suited to trial by trial situations (e.g. see general learning and reasoning models by Cristoph Mathys), as illustrated by the author's own model. The authors' model could be a general purpose model of Bayesian inference, shown by its applicability to the asynchrony judgment task. The fact that the number of asynchrony levels may not have been noticed by subjects is likewise irrelevant; subjects do not need to know this. Indeed, the authors point out that the asynchrony judgment task was easier than the RHI task, so that the authors needed to use a smaller asynchrony range for this task than the RHI one. That is, subjects' ability to discriminate asynchronies is shown by the authors' data to be more than enough to allow task-appropriate RHI judgments. (Even if successive differences between asynchrony levels were below a jnd, one would still of course still get a well formed psychophysical function over an appropriate span of asynchronies; so subjects could still use asynchrony in lawful ways as part of general reasoning, i.e. apart from any specific self module.)

(In their cover letter the authors bring up other points that are covered by the fact that subjects do not need to know how e.g. inference and imagination work in order to use them. It is well established that response to imaginative suggestions involves the neurophysiology underlying the corresponding subjective experience (e.g https://psyarxiv.com/4zw6g/ for review); imagining a state of affairs will create appropriate fMRI or SCR responses without the subject knowing how either fMRI or SCRs work.) In sum, none of the arguments raised by the authors actually count against the alternative theory of general inference in response to demand characteristics (followed by imaginative absorption), which remains a simple alternative explanation.

Following a discussion we ask that to address the Reviewer's perspective, you acknowledge the possibility that demand characteristics are contributing to participants performance.

2) "How the a priori probabilities of a common cause under different perceptive contexts are formed remains an open question."

Reviewer 1: One plausible possibility is that in a task emphasizing discrimination (asynchrony task) vs emphasizing integration (RHI) subjects infer different criteria are appropriate; namely in the former, one says "same" less readily.

and

3) "temporally correlated visuotactile signals are a key driving factor behind the emergence of the rubber hand illusion"

Reviewer 1: On the theory that the RH effect is produced by whatever manipulation appears relevant to subjects, of course in this study, where asynchrony was clearly manipulated, asynchrony would come up as relevant. So the question is, are there studies where visual-tactile asynchrony is not manipulated, but something else is, so subjects become responsive to something else? And the answer is yes. Guterstam et al., obtained a clear RH ownership effect and proprioceptive drift for brushes stroking the air i.e. not touching the rubber hand Durgin et al., obtained a RH effect with laser pointers, i.e. no touch involved either. The authors may think the latter effect will not replicate; but potentially challenging results still need to be cited.

Here we do not ask that you provide an extensive literature review. Instead, we simply ask you that you acknowledge in the discussion that task differences might influence participants performance (similar to our request above).

4) On noise effects.

Reviewer 1: If visual noise increases until 100% of pixels are turned white, the ratio of likelihoods for C=1 vs C=2 must go to 1 (as there is no evidence for degree of asynchrony) so the probability of saying "yes" goes to p_same, no matter the actual asynchrony (which by assumption cannot be detected at all in this case). p-same is estimated as.8 in the RHI condition. Yet as noise increases, p(yes) actually increases higher than 0.8 in the -150 to +150 asynchrony range (Figure 2). Could an explanation be given of why noise increases p(yes) other than the apparent explanation I just gave (that p(yes) moves towards p(same) as the relative evidence for the different causal processes reduces)?

The deeper issue is that it seems as visual noise increases, the probability that subjects say the rubber hand is their own increases and becomes less sensitive to asynchrony. In the limit it means if one had very little visual information, one just knew a rubber hand had been placed near you on the table, you would be very likely to say it feels like your own hand (maybe around the level of p-same), just because you felt some stroking on your own hand. But if the reported feeling of the rubber hand were the output of a special self processing system, the prior probability of a rubber hand slapped down on the table being self must be close to 0; and it must remain close to zero even if you felt some stroking of your hand and saw visual noise. But if the tendency to say "it felt like my own hand" was an experience constructed by realizing the paradigm called for this, then a high baseline probability of saying a rubber hand is self could well be high – even in the presence of a lot of visual noise.

Please consider that this effect may bear on the two different explanations.

5) The authors reject an STD model in the cover letter on the grounds subjects would not know where to place their criterion on a trial by trial basis taking into account sensory uncertainty.

Reviewer 1: Why could not subjects attempt to roughly keep p(yes) the same across uncertainties? If the authors say this is asking subjects to keep track of too much, note in the Bayesian model the subjects need an estimate of a variance for each uncertainty to work out the corresponding K. That seems to be asking for even more from subjects. The authors should acknowledge the possibility of a simple STD model and what if anything hangs on using these different modelling frameworks.

We feel that a brief mention of this possibility will benefit the community when considering how to leverage your interesting work in future studies.

6) A few proofing notes that have been picked up by Reviewer 3 (these are not comprehensive, so please read over the manuscript again more carefully):

1. Main points 1 and 3: The changes in response to these points as indicated in the response to reviewers are not exactly incorporated in the main manuscript file. Could you please correct?

2. Main point 4: in the main manuscript file there is an unnecessary '#' symbol at the end of the equation, please remove.

3. Main point 7: the title for figure 2 in the updated manuscript does not match the title indicated in the response to reviewers. I think the latter would be a better choice.

4. Supplements for figures 2, 3, 4: It seems that after re-numbering these figures, the figure legends for their supplement figures have not been updated and they still show the original numbering. Could you please update?

https://doi.org/10.7554/eLife.77221.sa1Author response

Essential revisions:

(1) A first reviewer highlights a major interpretation problem based on the 12 s asynchrony, contrasting a domain-general reasoning module with a body ownership one. The reviewer makes suggestions on how to accommodate and clearly refine the claims (e.g. explaining that a main observation is about a shift in criterion).

We have considered the first reviewer’s comments very carefully and provided detailed responses to all his comments; we also explicitly discuss these issues in the new version of the manuscript.

However, we disagree with the comment that there is a major interpretational problem with our experimental asynchrony manipulation. We are also puzzled by the recommendation that we need to “refine the claims” and that “the main observation is about a shift in criterion” because all three reviewers stated that our results support our main conclusions, and none of the reviewers claimed that our main finding is about a shift in criterion, as far as we can see: reviewer 2 discusses some issues related to the comparisons of the two tasks and inter-individual differences that we replied to, and Reviewer 1 wants us to consider a couple of alternative interpretations. We addressed the latter concern and detailed why we disagree with reviewer 1’s suggestion that demand characteristics and domain-general reasoning is a reasonable alternative explanation for our key findings.

Our main findings are the specifically shaped psychometric curves with respect to the subtle stepwise asynchrony manipulations and the widening of this curve by the noise manipulation as predicted by the causal inference model. We do not see how this finding can be explained by demand characteristics or a domain general cognitive reasoning strategy. In brief, the “computational” hypothesis is hidden from the naïve participants and can probably not be figured out spontaneously by them in the current detection task with over 500 randomized trials. Note further that we analyze and model each individual subject’s illusion detections and we find very good replication of our key modeling results between individual participants. There are also many features of the task design and the experimental procedures that reduce the risk of demand characteristics, e.g., use of robots and the experimenter being blind to the noise manipulation. Critically, the shortest asynchrony we used (150 ms) was short enough that most participants did not perceive it reliably as asynchronous, indeed previous work in the literature identified the crossmodal threshold to detect visuotactile asynchrony around 200 ms, which was confirmed by the analysis of our own asynchrony detection task data showing that participants did not detect the +/- 150 ms asynchrony above chance level, and the participants did not know how many different asynchrony levels were tested (as revealed in post-experiment interviews). Another crucial point is that no feedback was given to the participants about task performance, so they could not learn to map their responses to the different experimental manipulations. Cognitive bias might, of course, influence our data as in any perceptual decision paradigm, but our critical argument is that in the current study, such effects will most likely affect the conditions globally – across conditions – and very unlikely to explain specific changes in the psychometric curves that fitted our causal inference model’s predictions.

We also disagree with some of reviewer 1’s more general theoretical comments about the rubber hand illusion, where he seems to imply that the illusion might be nothing more than a combination of demand characteristics and domain-general reasoning, perhaps supplemented perhaps by hypnotic suggestibility. Such strong claims are not supported by the previous rubber hand illusion literature or the current study’s results. We have criticized Peter Lush’s and reviewer 1’s controversial claims about the rubber hand illusion in other publications (Ehrsson et al., 2022 Nature Communications; Slater and Ehrsson. 2022 Frontiers in Human Neuroscience), and in the current response letter we revisit and further clarify some of this debate with respect to the current study’s specific findings and present results from additional analyses. We are also attaching materials from two other ongoing studies that further support the current study’s main conclusion. In one model-based fMRI study, using the current rubber hand illusion detection task, we showed that the hand ownership detection decisions are associated with increased activity in specific multisensory brain areas. And in one behavioral study, we used signal detection theory in a rubber hand illusion discrimination task which supports the perceptual nature of the decision processes.

(2) Consistent with the above issue, a second reviewer highlights several empirical limitations and inconsistencies in the authors' analytical approach (points 2 to 5) with a lack of clear comparisons between the two tasks. These points need to be addressed as listed.

We have addressed all the empirical issues raised by the second reviewer in our point-by-point responses. Thanks to these constructive remarks, we think our analytical approach is now more clearly presented. We also justified in more detail our computational approach and explained the strengths and limitations of comparing the two tasks at the computational level.

(3) The issue of inter-individual differences is being raised by two reviewers and several suggestions are made to provide a more transparent report of the BCI model and their consistency with authors' interpretations.

We addressed all the concerns of reviewers related to inter-individual differences and used many of their recommendations to make changes in the manuscript to make the reporting of the models and the consistency of the result with respect to our conclusions more transparent. We also present several additional analyses and figures in the point-to-point response letter to further clarify these points. We hope that all our results regarding the BCI model and the issues related to inter-individual differences are now presented clearly and transparently.

We thank the reviewers and the editor for the valuable feedback and exciting discussions, and we are confident that the manuscript has been improved by taking into account all various concerns, suggestions, and positive feedback.

Reviewer #1 (Recommendations for the authors):

The authors explore the basis of the "rubber hand illusion" in which people come to feel a rubber hand as their own when it is stroked or tapped synchronously with their real hand. They show that when participants are exposed to lags in the tapping of the rubber and real hand, under conditions of different visual noise, people adjust their sense of ownership according to the manipulated variables. The degree of adjustment can be fitted by a Bayesian model, i.e. one in which evidence for ownership is construed as strength of evidence for the stroking being synchronous, and this is used to update the prior probability of there being a common cause for the sight and feeling of stroking.

The paper shows a lot of care in writing and study methodology. Setting up the equipment, the robotic device and the VR, required a lot of effort; the modeling and analysis have been conscientiously performed. The weakness for me is in the background framing of what is going on: Naturally, as is the authors' prerogative, they have taken a particular stance. However, they also say that these data may help distinguish different theories and approaches from their own preferred one. But no argument is made for how the data distinguish their interpretative approach from different ones. This is left as a promissory note. In fact, I think there is a rather different way of viewing the data.

The authors frame their work in terms of a mechanism for establishing bodily ownership. On the other hand, people may infer how feelings of ownership should vary given what is manipulated in the experiment. That is, if asynchrony is manipulated we know people view this as something that is meant to change their feeling of ownership (e.g. Lush 2020 Collabra). That is, the results may be based on general inference rather than any special ownership module. Consistently, reasoning in medical diagnosis and legal decisions can be fit by Bayesian models as well (e.g. Kitzis et al., Journal Math. Psych.; Shengelia and Lagnado, 2021, Frontiers in Psych). That is, the study could be brought under the research programme of showing people often reason in approximately Bayesian ways in all sorts of inference tasks, especially when concrete information is given trial by trial (e.g. Oaksford and Chater).

The results support the bare conclusions I stated, but what those conclusions mean is still up for grabs. I always welcome attempts to model theoretical assumptions, and the approach is more rigorous than many other experiments in that field. Hopefully it will set an example.

Modeling how inferences are formed when presented with different sources of information is an important task, whether or not those inferences reflect the general ability of people to reason, or else the specific processes of a particular model. For example, Fodor claimed that general reasoning was beyond the scope of science, but the fact that across many different inference tasks similar principles arise – roughly though not exactly Bayesian – indicates Fodor was wrong!

We thank the reviewer for his positive remarks on the methods, modeling results, and the writing of our paper. We are more than happy to discuss the alternative ways of interpreting the data that the reviewer is bringing up in this report. We agree with the reviewer that we conducted our study to test a particular hypothesis and computational model about the rubber hand illusion, and that our study was not designed to try to distinguish between different theories. So in the manuscript, we will stay focused on the conclusions that are directly supported by our results and avoid unnecessary theoretical speculation.

The reviewer’s central critical point is that our results might stem from ‘demand characteristics’, i.e., that participants may infer how feelings of ownership should vary given the experimental manipulations and then use a general reasoning strategy to generate the behavioral responses to meet these expectancies. In other words, rather than performing perceptual decisions based on a genuine illusion experience, the participants may simply be reporting as they think the researchers want them to report. In our response below, we will first analyze this concern in detail, and we will argue that it is very unlikely that demand characteristics and a general reasoning strategy can explain our findings. Next, we will consider some of the more general theoretical points raised by the reviewer, including the recent studies by Lush and colleagues (Lush, 2020; Lush et al., 2020). Here we will argue that the current study’s main findings are well-protected against the kind of cognitive effects reported in Lush’s articles. Despite some theoretical disagreements about rubber hand illusion (Ehrsson et al., 2022; Slater and Ehrsson, 2022), we think our positions converge on the importance of developing new methods to extend existing computational frameworks to several domains of human cognition and perception.

Demand characteristics and a general cognitive reasoning strategy

The reviewer’s central concern is that the current data emerge from a general cognitive inference process driven by cognitive expectancies that the participants develop about the different conditions. But what would these expectations be, more precisely? We used seven steps of visuotactile asynchrony (0 ms, +/- 150 ms, +/- 300 ms and +/- 500ms) and these differed in small temporal intervals. This means that it is difficult for the participants to separate them and keep track of which stimuli that belongs to which trial type among the hundreds of fully randomized trials in our psychophysics experiments. Critically, our hypotheses are “hidden” and correspond to specific psychometric curves that change in an unintuitive way by the noise manipulation. Thus, it is very difficult for the participants to develop meaningful understanding about what the study is supposed to demonstrate and what they should feel on any given trial.

Importantly, even asynchrony as short as 150 ms that leads to significantly different detection of the rubber hand illusion (- 150 ms versus 0 ms conditions: t = -3.31, df = 14, p = 0.0052, 95% CI = [-2.75; -0.59]; + 150 ms versus 0 ms conditions: t = -3.251, df = 14, p = 0.0059, 95% CI = [-3.65; -0.75]). However, the perceptual threshold for visuotactile asynchrony is above 200 ms (e.g., 211 ± 59.9 ms (mean ± SD) in Costantini et al., 2016; 302 ± 35 ms (mean ± SD) in Shimada et al., 2014; in our own synchrony detection task, participants did not detect the +/-150 ms asynchrony above chance level: 50% detection threshold = 179 +/- 47 ms (mean ± SD)). Thus, in the +/- 150 ms trials the participants are exposed to very subtle manipulations of asynchrony that most participants will not reliably perceive as asynchronous. Consequently, in our view it is implausible that participants form different expectations for different asynchrony trials that they do not even experience as different. Note further that the participants were never informed about how many levels of asynchrony that was used in the current study or told anything about the temporal intervals. Indeed, informal interviews at the end of the experimental sessions suggest the participants never realized that seven levels of asynchrony were used (most participants guessed three or four). So, we think it is very unlikely that they could develop the kind of precise cognitive expectations that would be required in order to even be able to generate the hypothesized curves of behavioral responses based using cognitive reasoning strategy.

The concern with demand characteristics becomes even more implausible when the visual noise conditions are taken into consideration. The causal inference model predicts that increasing sensory uncertainty by increasing visual noise should lead to a specific widening of the psychometric curves so that greater asynchronies are tolerated in the illusion; and the data fit the causal inference model’s predictions well (and better than a fixed-criteria model). We think that it is very implausible that the naïve participants in our study could figure out this hidden computational hypothesis by themselves. In informal interviews after the experiments, some participants spontaneously reported that they thought the noisier visual information should degrade the rubber hand illusion, but most participants had no idea what the noise was supposed to do. Even experts in our field rarely correctly predicted the impact of the visual noise: this work has been presented in two conferences (Body Representation Network – 2021; ASSC24 – 2021) and during two invited seminars (LICÉA – Paris Nanterre, 2022; LPNC – Grenoble Alpes University, 2022); when academic colleagues were first introduced to the task at these meetings most of them expected the visual noise to work against the illusion, i.e., lead to “a weaker illusion”, which is opposite of our results and does not capture the graded effect. Furthermore, it is critical to again note that participants never receive any feedback about their behavior; they are never told if they are right or wrong. Thus, with the trial-to-trial random variation in sensory noise and visuotactile asynchrony in the absence of feedback, it is impossible for the participants to learn to map their answers onto the specific predictions of the model.

It is also relevant to point out here that the experimenter was always out-of-sight of the participant and that all visuotactile stimulation was produced by two robots. Thus, the participant could not pick up putative subtle social cues from the experimenter that could potentially serve as an implicit source of information about the performance or the hypotheses. Moreover, the experimenter was blind to the visual noise condition.

If we look a bit more closely at the psychophysics task itself, please note that it is based on a huge number of trials presented in a fully randomized order, and with relatively little time after each trial to give the classification response. This experimental design makes the use of a reasoning strategy difficult. It is improbable the participants could keep track of the 12 randomized repetitions of the 42 conditions tested in the current experiment, which sums up to over 500 trials in a single experiment, which is required in order to generate response patterns based on a cognitive reasoning strategy to “simulate” the causal inference model’s fit. In addition, such a cognitive strategy based on domain-general reasoning would put great demands on working memory, long-term memory, analytical reasoning skills, as well as knowledge about probabilistic principles of perception. In addition to thinking about each trial, they would simultaneously have to remember the approximate number of yes/no responses for each asynchrony level in their previous history of responses and how the frequencies of responses on each of 7 different asynchrony conditions and three levels of noise should change according to Bayesian probabilistic principals of causal inference. We are not sure that this is even theoretically possible with the current paradigm and recall that our participants were naive participants recruited from outside the department who had nothing to gain from even attempting to perform such an unpleasantly demanding cognitive task. It is much easier for the participants just to follow the instruction and base their response on each trial on the rubber hand illusion feeling.

In addition to the above arguments, also note that we analyze and model our data on each individual participant individually. Thus, even if we assume that there were a few participants who could figure out the specific hypotheses and that also had the motivation, determination, theoretical knowledge, and cognitive capacity to simulate behavior to meet our computational predictions against task instruction (a super version of “the good subject”), it cannot explain that we observed good model fits in the large majority of participants (pseudoR2 above 0.60 for 11 out 15 participants). Importantly, when comparing our different models, we added to our AIC/BIC analysis a protected exceedance probability analysis (Rigoux et al., 2014). This type of analysis includes participant has a random factor, i.e., it takes into account the possibility that the goodness of fit of one model would be mostly due to a few “perfect” subjects while other participants followed another model or a random distribution, by computing the posterior probability that one model occurs more frequently than any other model in the set, above and beyond chance. The results of this type of analyses highlighted the relevance and dominance of our main model across our whole sample. In addition to these specific results, most participants in experimental behavioral studies like the current one simply want to follow task instructions and “the good subject” seems to be relatively rare: in reviewing the classic literature on demand characteristics in social psychology experiments, Weber and Cook (1972) concluded that evidence for demand characteristics in experimental psychological studies was weak and ambiguous in most cases and convincing evidence for instances where “the good subject” explained the results were lacking. The current analysis approach based on individual participants’ perception also means that our results cannot stem from weak demand characteristics or cognitive reasoning effects occurring in individual subjects that are then aggregated into a “false” group-level effect.

So, in sum, we do not see how expectations, demand characteristics, and high-level domain-general reasoning can explain the current study’s main results or constitute a plausible alternative interpretation for the conclusion that body ownership in the rubber hand illusion is governed by Bayesian causal inference of sensory evidence. Although cognitive bias might lead to “global” changes in decision criteria, as we will discuss further below, such possible effects cannot, in our view, explain the specific shapes of the psychometrics curves related to the subtle asynchrony manipulation or the changes in these curves occurring when sensory uncertainty is manipulated.

The fact that reasoning at a “high cognitive level” (e.g., medical and judicial decisions) can be described as a near-Bayesian general inferential process does not exclude the existence of specific perceptual modules. Similar probabilistic computational decision principles may govern cognition and perception (e.g., Shams and Beierholm, 2022). However, this does not mean that if a perceptual decision task follows Bayesian probabilistic principles, it must be based on high-level cognition. The idea that automatic perceptual decisions related to the multisensory binding problem are implemented in the brain’s perceptual systems and operate according to Bayesian causal inference principles is rather well established in the literature on multisensory perception (Aller and Noppeney, 2019; Körding et al., 2007; Rohe et al., 2019; Rohe and Noppeney, 2016, 2015; Shams and Beierholm, 2010). In the current study, we extend this principle that is relevant for illusory and non-illusory audio-visual perceptual effects to the case of a multisensory bodily illusion.

Lush’s studies

The reviewer states that “if asynchrony is manipulated we know people view this as something that is meant to change their feeling of ownership” and cite Lush 2020 (Collabra). We disagree with this statement because we think it is too strong and too generalizing, and it is not clear how Lush’s findings relate to the current study’s results since Lush 2020 did not test the rubber hand illusion. In fact, we still know quite little about how conceptual knowledge about the rubber hand illusion influences subjective ratings of the illusion in actual experiments when naïve participants are instructed to report on their illusion feeling.

Lush (2020) has several limitations (Slater and Ehrsson, 2022), which we need to point out because it is relevant for the current discussion. First, Lush 2020 never tested the participants on the actual rubber hand illusion, so we do not know how the participant’s knowledge and expectations might have influenced their subjective ratings of illusion strength in an actual rubber hand illusion experiment. Second, the information and instructions provided to the participants in Lush 2020 are different from a typical rubber hand illusion experiment. In Lush 2020 the participants, who were psychology undergraduates, studied extensive written and video material about the rubber hand procedures (on their own laptops), including detailed information about the synchronous and asynchronous stimulation conditions, videos of the hidden real hand receiving the different types of tactile stimulation, and explicit information that the purpose of the rubber hand illusion was to “generate changes in experience”. The participants' task was to try to guess what people experience in the rubber hand illusion when shown the questionnaire statements that are used to quantify the illusion. In typical rubber hand illusion experiments, naïve participants are given minimal information about the procedures, and they are just instructed to report what they experience. Thus, these differences in task instruction (metacognitive evaluation versus rate a perceptual sensation) and the differences in the information about the rubber hand illusion may have created demand characteristics and expectations that are specific to Lush’s study and not representative of rubber hand illusion experiments in general. In other words, regardless of what the psychology undergraduates may or may not have thought about how the illusion works, this may not have substantially influenced their ratings of the illusion in a real rubber hand illusion experiment. Third, when the participants in Lush 2020 are given a rubber hand illusion questionnaire and asked to fill them out according to their expectancies, they report affirmative mean illusion ratings in both the synchronous and the asynchronous conditions. Noteworthy, this is qualitatively different from real rubber hand illusion experiments, where the illusion is typically clearly rejected in the asynchronous condition (negative scores in the order of mean -1 to -2; e.g., Kalckert and Ehrsson, 2014; Reader et al., 2021), suggesting that the participants’ guesses about the RHI were vague when it come to the specific effect of the asynchrony manipulation.

Interestingly, in Lush et al., (2020), the participants' expectations about what they were expected to experience in the synchronous and asynchronous conditions were registered before the rubber hand illusion (together with their trait suggestibility), and then the rubber hand illusion was tested and quantified with questionnaires (and proprioceptive drift). Importantly, expectations about what they expected to feel in the synchronous and the asynchronous conditions only had a very small effect on the questionnaire ratings from the actual rubber hand illusion experiment, only affecting the synchronous condition’s ratings a little and apparently not at all affecting the asynchronous condition (Slater and Ehrsson, 2022). Critically, the contribution was so small that it could effectively be ignored compared to the contribution of the visuotactile synchrony-asynchrony manipulation and trait suggestibility (Slater and Ehrsson, 2020). Furthermore, the contribution of visuotactile synchrony-asynchrony was two-to-three times more important than expectations and trait suggestibility combined. Thus, potential expectancies are unlikely to explain the current study’s main behavioural results. In addition to this, Lush et al., 2020, as well as recently reanalyzes of the same dataset (Ehrsson et al., 2022; Slater and Ehrsson, 2022), clearly show that the differences in illusion ratings between the synchronous and asynchronous conditions are unrelated to trait suggestibility (Lush et al., 2020; Ehrsson et al., 2022; Slater and Ehrsson, 2022). Thus, possible differences in trait suggestibility cannot explain the current study's main findings since these are based on asynchrony manipulation and differences between conditions, and such difference measures do not correlate with trait suggestibility (Lush et al., 2020; Ehrsson et al., 2022; Slater and Ehrsson, 2022). We think it was relevant to clarify this point here because suggestibility is a key concept in Lush’s articles.

To us, Lush and colleagues’ work are interesting because they inform us about individual differences in how trait suggestibility and cognitive expectancies may influence subjective illusion reports. There is no incompatibility between the arguments that the rubber hand illusion is a bodily illusion driven to a large extent by multisensory correlations, and at the same time, subjective rubber hand illusion reports can be modulated by top-down cognitive processes (Slater and Ehrsson, 2022) and show individual differences in illusion reports that are modulated by cognition. In the current study, we focus on the former multisensory perceptual aspects of the illusion at the level of individual subjects. We did not test Lush and colleagues’ hypotheses about demand characteristics, although the current computational modeling and psychophysics approach could be used for this purpose in future studies.

Cognitive bias

To be clear, we are not arguing that participants’ cognitive expectations cannot influence rubber hand illusion reports at all, or that the current dataset is completely free from cognitive biases, or that there could be no changes in decision criteria between some of the conditions in some of the participants. Our main argument is that such effects can probably not explain our main computational modeling results. It is possible that some participants adopt a more conservative decision criterion than other individuals in the detection task, while others use a more liberal criterion. Such differences in decision criteria could stem from many different postperceptual processes, including individual differences in trait suggestibility; but also differences in perceptual bias that can capture key perceptual aspects of perceptual illusions (Morgan et al., 1990). The effect of biases (cognitive or perceptual) could be accounted for by changes in the prior in our models. As such, a cognitive bias effect related to demand characteristics and expectations would most likely manifest itself as a global influence on all or some of conditions (Lush et al., 2020; Slater and Ehrsson, 2022), and not explain the specific patterns of trial-to-trial results we observed that fit with the causal inference model’s predictions.

Furthermore, a hypothetical change in decision criterion related to perceived synchrony (e.g., 0 ms and +/- 150 ms trials) or asynchrony (e.g., +/- 300 ms and +/- 500 ms) would not lead to the specific changes predicted by the causal inference model. Actually, such possible effects would correspond to a “fixed criteria” strategy rather than a Bayesian causal inference one. But the current study argues against such a fixed criteria strategy in our data because we formally compared the fit of the Bayesian causal inference model to our Fixed-Criterion (FC) model, and the causal inference model outperformed the FC model. In addition, and as already said, we have used a randomized trial design where all asynchrony conditions and the noise conditions are presented in random trial order. Such a design is considered to reduce the risk of adaptation and changes in decision criteria compared to designs where different conditions are presented in separate runs, and this was something we took into account when designing the study.

In conclusion, and after considering all the reviewer concerns, we still think that our conclusion that the rubber hand illusion is governed by Bayesian causal inference based on sensory evidence and sensory uncertainty is well-supported by the results. Our findings support this conclusion, and our results and conclusions are in line with our hypothesis and theoretical framework of the rubber hand as a multisensory bodily illusion, as well as the broader empirical and theoretical literature on causal inference in multisensory perception. We think reviewer 1’s suggestion that demand characteristic and a domain general cognitive strategy is an unlikely explanation for the current study’s main modeling findings, but we have made changes in the revised version of the manuscript to explicitly discuss this issue.

Specific comments:

(i) The model can be described as Bayesian, but how different is it from a signal detection theory model, with adjustable vs fixed criteria, and a criteria offset for the RHI and asynchrony judgment task? In other words, rather than the generative model being an explicit model for the system, instead different levels of asynchrony simply produce certain levels of evidence for a time difference, as in an STD model. Then set up criteria to judge whether there was or was not a time difference. Adjust criteria according to prior probabilities, as is often done in SDT. That's it. Is this just a verbal rephrasing?

The reviewer points toward an interesting discussion on the differences and potential overlap between two different computational frameworks and correctly points out that SDT and BCI sometimes lead to the same predictions at the behavioral level. However, we would argue that the scope of these two frameworks diverges and that the BCI framework is more relevant for the current study’s aims.

The SDT allows the estimation of decisional thresholds given one or several sensory inputs. From this standpoint, SDT can describe the thresholds used by the participants but does not explain how they are established. Under this approach, one could speculate that thresholds are quantitatively adjusted in two different tasks (e.g., under two different priors), which would require complexifying the initial SDT assumptions. However, it would be unrealistic to assume that the participants learn to adjust their SDT threshold from trial-to-trial, taking into account the level of sensory uncertainty.

On the contrary, the BCI framework is designed to be a more comprehensive, process-based approach: this framework explains how the perceptual thresholds are computed. This is not just “verbal rephrasing” but a fundamentally different approach to examine hypotheses about underlying computational principles. As a result, our BCI model efficiently captures the observed behavioral effect of visual noise on body ownership perception, including the variations in rubber hand illusion detection on a trial-to-trial basis.

Both approaches could be used in future psychophysics studies on bodily illusions, but with different purposes. For example, we are currently working on an SDT rubber hand illusion study based on a 2-AFC hand ownership task that we have developed in our lab (Chancel et al., 2021; Chancel and Ehrsson, 2020). Among this study's many interesting observations, relevant to mention here is that hand ownership sensitivity (d’) is significantly above zero for stimulation asynchrony of 50 ms, 100 ms and 200 ms, i.e., small asynchronies in a similar range as used in the current experiment. Note also significant ownership sensitivity for delays of 50 ms, which are too brief to be consciously perceived and therefore should not produce cognitive expectancies (Lanfranco et al., 2022 in preparation). Since hand ownership sensitivity measures (d’) and bias-free estimates of the participants’ rubber hand illusion discrimination behavior, this finding is in line with the current investigation’s Bayesian modeling results and interpretation.

One thing on the framing of the model: Surely a constant offset over several taps is just as good evidence for a common cause no matter whether the offset is 0 or something else? But if the prior probability is specifically that common cause is the same hand is involved (which requires an offset close to 0), surely that prior probability is essentially zero? So how should the assumption of C1 be properly framed?

The reviewer is correct that the temporal correlation between the visual and tactile stimulation in our experiment could promote multisensory integration due to the constant offset over several taps regardless of the visuotactile asynchrony (Parise and Ernst, 2016). Yet, the visuotactile delay also matters for multisensory perception (as discussed in Parise and Ernst, 2016), and that is the parameter that we manipulated in the current study keeping all other factors constant (except for the noise manipulation). Thus, both the correlations and the asynchrony matter, and the greater the asynchrony, the less information in favor of the rubber hand as being one’s own despite the visuotactile correlation.

Importantly, the inference of a common cause in models such as the one we are using takes into account the discrepancy between the sensory signals; thus, the magnitude of the offset matters. Especially since in the model we use, a common cause for vision and touch in our model (C = 1) means the same hand is involved as the source of visual and tactile inputs. And indeed, it requires the offset to be close to 0; that’s why we assume that the asynchrony s is always zero when C=1 (see appendix 1 for a detailed presentation, page 36 lines 1056 to 1059). A similar phrasing of the different causal scenarios was already proposed by Samad et al., (2015). In the future, more complex models could be developed that incorporate both temporal correlations and visuotactile delays, but this is beyond the aim of the current study.

(ii) lines 896-897"…temporally correlated visuotactile signals are a key driving factor behind the emergence of the rubber hand illusion Chancel and Ehrsson,202, …"

Cite findings that need some twisting to fit in with this view, e.g. the finding that imagining a hand is one's own creates SCRs to its being cut; and perhaps more easy to deal with but I think rather telling, no visual input of a hand is needed (Guterstan et al., 2013) and laser lights instead of brushes work just about as well (Durgin et al., 2013) as does stroking the air (Guterstam et al., 2016), making magnetic sensations akin to the magnetic hands suggestion in hypnosis. It seems the simplest explanation is that participants respond to what they perceive as what is meant to be relevant in the study manipulations. Suitable responses are constructed, based on genuine experience or otherwise, in accordance with the needs of the context. The very way the question is framed determines the answer according to principles of general inference (e.g. Lush and Seth, 2022, Nat Coms; Corneille and Lush, https://psyarxiv.com/jqyvx/).

The statement that “temporally correlated visuotactile signals are an important factor driving the emergence of the rubber hand illusion” is supported by a very large previous literature, as well as the current study’s findings. Note that we are not saying this is the only factor that drives the illusion (e.g., spatial congruence factors also contribute), only that it is an important factor.

We cited Chancel and Ehrsson (2020) because it is a highly relevant rubber hand illusion study given the similarities in paradigm and the manipulation of fine-grained temporal asynchronies of visual and tactile signals. Chancel and Ehrsson (2020) used seven levels of visuotactile asynchrony shorter than or equal to 200 ms, which means that the different visuotactile asynchronies were not clearly perceived by the participants and yet the collected data showed a significant relationship between the degree of visuotactile asynchrony and illusory rubber hand illusion as quantified in a discrimination task. Moreover, the illusory hand ownership discriminations were influenced by a manipulation of the distance between the participants’ hand and the rubber hand (5 cm change in lateral axis) in line with the spatial congruence principle of multisensory integration even though the participants did not notice this distance manipulation (which occurred between runs and out-of-sight of the participants, and confirmed in post-experimental interviews), and thus very unlikely to form high level cognitive expectations about the specific hypothesis related to this orthogonal and small spatial manipulation. Our statement that temporally correlated visuotactile signals are a key driving factor behind the rubber hand illusion comes from these observations, but as we said, also from a very large previous literature (e.g., Blanke et al., 2015; Botvinick and Cohen, 1998; Ehrsson, 2020; Ehrsson et al., 2004; Kilteni et al., 2015; Slater and Ehrsson, 2022; Tsakiris, 2010; Tsakiris and Haggard, 2005).

The statement under discussion is also supported by our own data. To illustrate this further we ran a new posthoc analysis. A mixed-effect logistic regression with participant as random effect confirms a significant effect of asynchrony (p <2e-16), as expected (see Author response image 1). A clear effect of asynchrony is seen in every participant, which is in line with our claim that this is key factor that drives the emergence of the rubber hand illusion.

Mixed-effect logistic regression with participant as random effect.

Dots represents individual responses, the curves are the regression fit, the shaded areas the 95% confidence interval.

We could very well have cited Guterstam et al., (2013) here because it is a well-controlled study with many experiments that support the conclusion that temporally correlated visuotactile signals play a critical role in bodily illusions such as the rubber hand illusion. In this study, Arvid Guterstam presented evidence from 10 separate experiments conducted on different groups of naive participants; 234 subjects in total (one explorative pilot experiment and nine experiments that would nowadays be called hypothesis testing experiments). Nine of the experiments included synchronous and asynchronous conditions, and all findings support an important role for synchronous visuotactile correlations in driving this version of the rubber hand illusion. Several additional control conditions were included in this study, such as various spatial manipulations related to the spatial rule of multisensory integration and a control condition involving a block of wood that eliminates the illusion due to shape incongruence and top-down factors. The outcome measures were questionnaire ratings, increases in SCR triggered by physical threats towards the illusory hand, changes in hand position sense towards the illusory hand (proprioceptive drift), as well as one functional magnetic resonance imaging experiment. Critically the synchronous illusion condition leads to greater illusion questionnaire ratings, greater threat-evoked SCR, greater proprioceptive drift, and greater BOLD signals in key areas related to multisensory integration of bodily signals (such as the posterior parietal cortex, premotor cortex, and cerebellum) than the asynchronous condition and the other control conditions. Thus, the findings from Guterstam et al., (2013) support the sentence under discussion above and are in line with the current study’s findings and conclusions.

Guterstam et al., (2016) examine a somewhat different perceptual phenomenon of a perceived causal connection (similar to a magnetic force field) between visual and tactile events close to one’s own hand in peripersonal space. Again, this study is well controlled and contains many experiments, controls and measures, and the study finds that synchronous visuotactile stimulation and a limited spatial extent of peripersonal space around the hand are critical factors for this visuotactile illusion effect to arise. This study’s findings are in line with the rubber hand illusion literature and the literature on multisensory integration in peripersonal space. The “similarity” to “magnetic hands suggestions” that the reviewer mentions makes no sense to us. Such hypnotic suggestions do not obey temporal and spatial rules of multisensory integration and do not depend on correlated visuotactile signals. Also, the procedures and instructions are very different.

We can’t say so much about the Durgin et al., 2007 study because we have not tried to replicate it. However, in an ongoing study, we have used a control condition where participants just look at a rubber hand while we shine a laser light on it without any tactile or other somatosensory stimulation delivered to the hidden real hand. We find low questionnaire ratings in this condition, significantly lower than typically observed in rubber hand illusion conditions with synchronous visuotactile stimulation and more similar to the control condition when participants just look at a rubber hand without any stimulation. Synchronous visuotactile stimulation leads to a significantly stronger rubber hand illusion than this latter condition when participants are just looking at the rubber hand without any visuotactile stimulation (e.g., Guterstam et al., 2019). We think more studies are needed before we can draw any strong conclusions from Durgin et al., (2007).

It is well-known in psychological research that SCR is an unspecific measure, and that different perceptual, emotional, and cognitive processes can influence SCR. Thus, just as in psychophysiological research in general, it is very important to have adequate control conditions and adopt a hypothesis-driven approach when using the SCR in bodily illusion research. SCR responses triggered by physical threats are ecologically valid because it probes basic emotional defense reactions triggered by bodily threats (Ehrsson et al., 2007; Graziano and Cooke, 2006). In our bodily illusion studies with threat-evoked SCR, we always use control conditions (e.g., Gentile et al., 2013; Guterstam et al., 2015) and only focus on illusion-condition specific increases in SCR triggered by the threat-stimulus compared to when identical threats are presented in the asynchronous and other control conditions; in addition, we sometimes use control stimuli like non-threatening objects (e.g., Guterstam et al., 2015; Petkova and Ehrsson, 2008). In addition, bear in mind that most naïve participants do not understand how SCR works. Thus, in our view it is very unlikely that naïve participants can voluntarily control their evoked SCR responses in a condition-specific manner to simulate physiological responses in experimental designs like the ones described above. Also note that physical threats toward the rubber hand elicit specific fMRI responses in areas related to pain anticipation and fear, such as the anterior insular cortex (Ehrsson et al., 2007; Gentile et al., 2013) and amygdala (Guterstam et al., 2015), and the stronger the illusion in the synchronous condition compared to the asynchronous (and other controls), the stronger these threat-evoked BOLD responses. This suggest that the threat-evoked SCR reflects centrally mediated emotional defense reactions that are triggered by perceived threats towards one’s own body.

Note that in the recent study by Lush and colleagues (Lush et al., 2021) no SCR recordings were conducted so the claims about possible links between cognitive expectancies and changes in SCR remain speculative. This study also suffers from the same limitations as the questionnaire study discussed above (Lush 2020) in that psychology students were asked to guess which of the two conditions – the synchronous conditions or the asynchronous conditions – they thought should produce the strongest SCR after reading and learning about the rubber hand illusion. But as said, SCR was not registered, and the rubber hand illusion was not tested. Thus, it is unclear how metacognitive guesses about the rubber hand illusion relate to condition-specific differences in threat-evoked SCR during an actual rubber hand illusion experiment in genuinely naïve participants. To the best of our knowledge, the potential influence of cognitive expectations on SCR has yet not been tested in a controlled bodily illusion experiment.

The reviewer brought up the topic of indirect measures of the rubber hand here, so we would like to make a couple of further clarifications. In rubber hand illusion studies, it is common to supplement the results from questionnaires and rating scales with more objective tests such as proprioceptive drift, the cross-modal congruence task, threat-evoked SRC, fMRI, and electrophysiology (EEG/ECoG)(see Slater and Ehrsson 2022 for a recent review). The results of these studies support the hypothesis that the rubber hand illusion is a multisensory body illusion and underscore the importance of visual and somatosensory signals. Take fMRI, for example. Numerous studies have found differences between the synchronous and asynchronous conditions in specific premotor and posterior parietal areas that are known to be involved in the integration of visual, tactile, and proprioceptive bodily signals (Brozzoli et al., 2012; Ehrsson et al., 2004; Gentile et al., 2013; Limanowski and Blankenburg, 2016). Moreover, the stronger the illusion-condition-specific differences in BOLD signals in these areas, the stronger the illusion as rated in questionnaires (illusion ratings synchronous minus asynchronous).

Extremely relevant to the present study, in a recent imaging study, which is currently under revision in a leading neuroscience journal, we used fMRI to scan 30 participants as they performed the current rubber hand illusion detection task (based on the same stepwise asynchrony manipulation) and fitted the responses to the current BCI model (Chancel et al., see attached abstract for a poster at the OHBM conference in Glasgow – June 2022). As expected, BOLD activity in the premotor and posterior parietal cortices was related to illusion detection at the level of individual participants and trials, and activity in the posterior parietal cortex reflected the Bayesian causal inference model’s predicted probability of illusion emergence based on each participant’s behavioral response-profile. These findings corroborate the current behavioral study’s findings and conclusions and suggest that the rubber hand illusion detection decisions involve activity in multisensory areas related to integration versus segregation of bodily multisensory information.

That mental imagery of one’s hand being cut may modulate SCR is unsurprising. Mental imagery can influence emotional processes and lead to changes in SCR recordings. Without knowing which study you refer to, and what control conditions were used, it is difficult for us to say much more. For example, Hägni et al., (2008) did not elicit a bodily illusion but compared passive viewing arms playing a ball game versus a mental imagery condition so the changes in SCR reported in this study could relate to different cognitive factors.

Last and not least, we disagree with the reviewer’s statement that “the simplest explanation (for the rubber hand illusion, our addition) is that participants respond to what they perceive as what is meant to be relevant in the study manipulation”. Given all the arguments we presented above and in our first response, we fail to see how this “simple” interpretation can explain our key findings while another simple explanation that can do this well is that participants simply report what they feel when they experience the illusion.