A neural network model of differentiation and integration of competing memories

eLife assessment

This paper presents important computational modeling work that provides a mechanistic account for how memory representations become integrated or differentiated (i.e., having distinct neural representations despite being similar in content). The authors provide convincing evidence that simple unsupervised learning in a neural network model, which critically weakens connections of units that are moderately activated by multiple memories, can account for three empirical findings of differentiation in the literature. The paper also provides insightful discussion on the factors contributing to differentiation as opposed to integration, and makes new predictions for future empirical work.

https://doi.org/10.7554/eLife.88608.3.sa0Important: Findings that have theoretical or practical implications beyond a single subfield

- Landmark

- Fundamental

- Important

- Valuable

- Useful

Convincing: Appropriate and validated methodology in line with current state-of-the-art

- Exceptional

- Compelling

- Convincing

- Solid

- Incomplete

- Inadequate

During the peer-review process the editor and reviewers write an eLife Assessment that summarises the significance of the findings reported in the article (on a scale ranging from landmark to useful) and the strength of the evidence (on a scale ranging from exceptional to inadequate). Learn more about eLife Assessments

Abstract

What determines when neural representations of memories move together (integrate) or apart (differentiate)? Classic supervised learning models posit that, when two stimuli predict similar outcomes, their representations should integrate. However, these models have recently been challenged by studies showing that pairing two stimuli with a shared associate can sometimes cause differentiation, depending on the parameters of the study and the brain region being examined. Here, we provide a purely unsupervised neural network model that can explain these and other related findings. The model can exhibit integration or differentiation depending on the amount of activity allowed to spread to competitors — inactive memories are not modified, connections to moderately active competitors are weakened (leading to differentiation), and connections to highly active competitors are strengthened (leading to integration). The model also makes several novel predictions — most importantly, that when differentiation occurs as a result of this unsupervised learning mechanism, it will be rapid and asymmetric, and it will give rise to anticorrelated representations in the region of the brain that is the source of the differentiation. Overall, these modeling results provide a computational explanation for a diverse set of seemingly contradictory empirical findings in the memory literature, as well as new insights into the dynamics at play during learning.

Introduction

As we learn, our neural representations change: The representations of some memories move together (i.e. they integrate), allowing us to generalize, whereas the representations of other memories move apart (i.e. they differentiate), allowing us to discriminate. In this way, our memory system plays a delicate balancing act to create the complicated and vast array of knowledge we hold. But ultimately, learning itself is a very simple process: strengthening or weakening individual neural connections. How can a simple learning rule on the level of individual neural connections account for both differentiation and integration of entire memories, and what factors lead to both outcomes?

Classic supervised learning models (Rumelhart et al., 1986; Gluck and Myers, 1993) provide one potential solution. These theories posit that the brain adjusts representations to predict outcomes in the world: When two stimuli predict similar outcomes, their representations become more similar; when they predict different outcomes, their representations become more distinct. Numerous fMRI studies have obtained evidence supporting these theories, by utilizing representational similarity analysis to track how the patterns evoked by similar stimuli change over time (e.g. Schapiro et al., 2013; Schapiro et al., 2016; Tompary and Davachi, 2017).

However, other studies have found that linking stimuli to shared associates can lead to differentiation rather than integration. For instance, in one fMRI study, differentiation was observed in the hippocampus when participants were tasked with predicting the same face in response to two similar scenes (i.e. two barns), so much so that the two scenes became less neurally similar to each other than they were to unrelated stimuli (Favila et al., 2016; for related findings, see Schlichting et al., 2015; Molitor et al., 2021; for reviews, see Brunec et al., 2020; Duncan and Schlichting, 2018; Ritvo et al., 2019).

Findings of this sort present a challenge to supervised learning models, which predict that the connection weights underlying these memories should be adjusted to make them more (not less) similar to each other. This kind of ‘similarity reversal’, whereby stimuli that have more features in common (or share a common associate) show less hippocampal pattern similarity, has now been observed in a wide range of studies (e.g. Favila et al., 2016; Schlichting et al., 2015; Molitor et al., 2021; Chanales et al., 2017; Dimsdale-Zucker et al., 2018; Wanjia et al., 2021; Zeithamova et al., 2018; Jiang et al., 2020; Fernandez et al., 2023; Wammes et al., 2022).

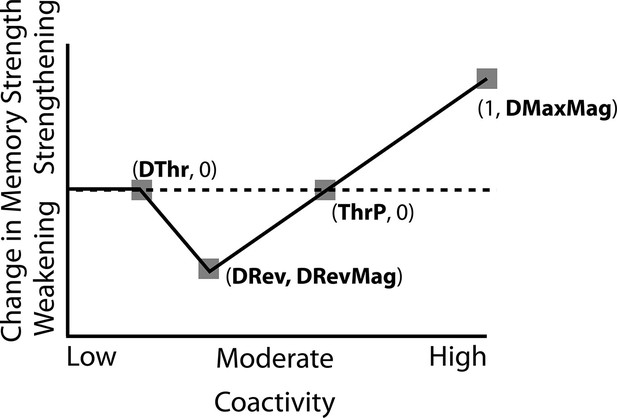

Nonmonotonic plasticity hypothesis

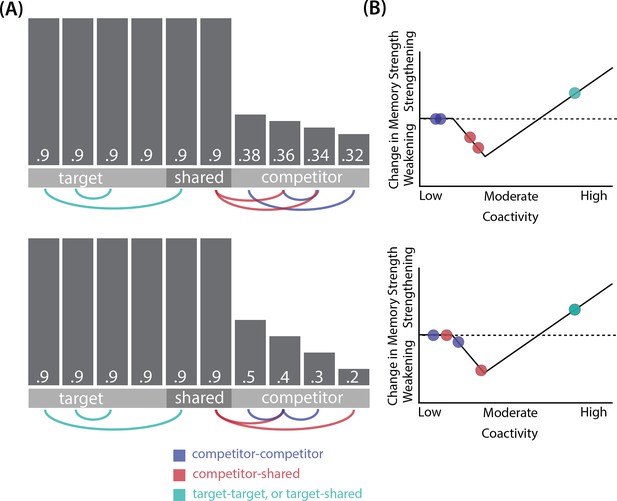

How can we make sense of these findings? Supervised learning algorithms cannot explain the aforementioned results on their own, so they need to be supplemented by other learning principles. We previously argued (Ritvo et al., 2019) that learning algorithms positing a U-shaped relationship between neural activity and synaptic weight change — where low levels of activity at retrieval lead to no change, moderate levels of activity lead to synaptic weakening, and high levels of activity lead to synaptic strengthening — may be able to account for these results; the Bienenstock-Cooper-Munro (BCM) learning rule (Bienenstock et al., 1982; Cooper, 2004) is the most well-known learning algorithm with this property, but other algorithms with this property have also been proposed (Norman et al., 2006; Diederich and Opper, 1987). We refer to the U-shaped learning function posited by this type of algorithm as the nonmonotonic plasticity hypothesis (NMPH; Detre et al., 2013; Newman and Norman, 2010).

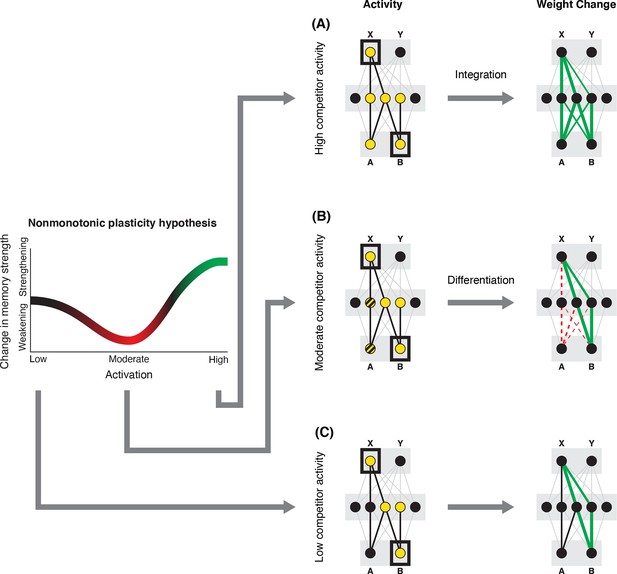

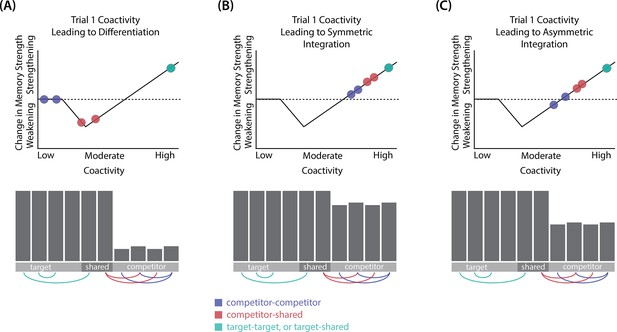

In addition to explaining how individual memories get stronger and weaker, the NMPH also explains how memory representations change with respect to each other as a function of competition during retrieval (Hulbert and Norman, 2015; Norman et al., 2006). If the activity of a competing memory is low while retrieving a target memory (Figure 1C), the NMPH predicts no representational change. If competitor activity is moderate (Figure 1B), the connections to the shared units will be weakened, leading to differentiation. If competitor activity is high (Figure 1A), the connections to the shared units will be strengthened, leading to integration. Consequently, the NMPH may provide a unified explanation for the divergent studies discussed above: Depending on the amount of excitation allowed to spread to the competitor (which may be affected by task demands, neural inhibition levels, and the similarity of stimuli, among other things), learning may result in no change, differentiation, or integration (for further discussion of the empirical justification for the NMPH, see the Learning subsection in the Methods).

Nonmonotonic plasticity hypothesis: A has been linked to X, and B has some initial hidden-layer overlap with A.

In this network, activity is allowed to spread bidirectionally. When B is presented along with X (corresponding to a BX study trial), activity can spread downward from X to the hidden-layer units associated with A, and also — from there — to the input-layer representation of A. (A) If activity spreads strongly to the input and hidden representations of A, integration of A and B occurs due to strengthening of connections between all of the strongly activated features (green connections indicate strengthened weights; AB integration can be seen by noting the increase in the number of hidden units receiving projections from both A and B). (B) If activity spreads only moderately to the input and hidden representations of A, differentiation of A and B occurs due to weakening of connections between the moderately activated features of A and the strongly activated features of B (green and red connections indicate weights that are strengthened and weakened, respectively; AB differentiation can be seen by noting the decrease in the number of hidden units receiving strong connections from both A and B — in particular, the middle hidden unit no longer receives a strong connection from A). (C) If activity does not spread to the features of A, then neither integration nor differentiation occurs. Note that the figure illustrates the consequences of differences in competitor activation for learning, without explaining why these differences would arise. For discussion of circumstances that could lead to varying levels of competitor activation, see the simulations described in the text.

© 2019, Elsevier Science & Technology Journals. Figure 1 was reprinted from Figure 2 of Ritvo et al., 2019 with permission. It is not covered by the CC-BY 4.0 license and further reproduction of this panel would need permission from the copyright holder.

Research goal

In this paper, we present a neural network model that instantiates the aforementioned NMPH learning principles, with the goal of assessing how well these principles can account for extant data on when differentiation and integration occur. In Ritvo et al., 2019, we provided a sketch of how certain findings could potentially be explained in terms of the NMPH. However, intuitions about how a complex system should behave are not always accurate in practice, and verbally stated theories can contain ambiguities or internal contradictions that are only exposed when building a working model. Building a model also allows us to generate more detailed predictions (i.e. we can use the model to see what follows from a core set of principles). Relatedly, there are likely boundary conditions on how a model behaves (i.e. it will show a pattern of results in some conditions but not others). Here, we use the model to characterize these boundary conditions, which (in turn) can be translated into new, testable predictions.

We use the model to simulate three experiments: (1) Chanales et al., 2021, which looked at how the amount of stimulus similarity affected the distortion of color memories; (2) Favila et al., 2016, which looked at representational change for items that were paired with the same or different associates; and (3) Schlichting et al., 2015, which looked at how the learning curriculum (whether pairs were presented in a blocked or interleaved fashion) modulated representational change in different brain regions. We chose these three experiments because each reported that similar stimuli (neurally) differentiate or (behaviorally) are remembered as being less similar than they actually are, and because they allow us to explore three different ways in which the amount of competitor activity can be modulated.

The model can account for the results of these studies, and it provides several novel insights. Most importantly, the model shows that differentiation must happen quickly (or it will not happen at all), and that representational change effects are often asymmetric (i.e. one item’s representation changes but the other stays the same).

The following section provides an overview of general properties of the model. Later sections describe how we implemented the model separately for the three studies.

Basic network properties

We set out to build the simplest possible model that would allow us to explore the role of the NMPH in driving representational change. The model was constructed such that it only used unsupervised, U-shaped learning and not supervised learning. Importantly, we do not think that unsupervised, U-shaped learning function is a replacement for error-driven learning; instead, we view it as a supplementary tool the brain uses to reduce interference of competitors (Ritvo et al., 2019). Nonetheless, we intentionally omitted supervised learning in order to explore whether unsupervised, U-shaped learning on its own would be sufficient to account for extant findings on differentiation and integration. Achieving a better understanding of unsupervised learning is an important goal for computational neuroscience, given that learning agents have vastly more opportunities to learn in an unsupervised fashion than from direct supervision (for additional discussion of this point, see, e.g., Zhuang et al., 2021).

Because we were specifically interested in the way competition affects learning, we decided to focus on the key moment after the memories have been formed, when they first come into competition with each other. Consequently, we pre-wired the initial connections into the network for each stimulus, rather than the allowing the connections to self-organize through learning (see Methods for details). Doing so meant we could have control over the exact level of competition between pairmates. We then used the model to simulate different studies, with the goal of assessing how different manipulations that affect competitor activity modulate representational change. In the interest of keeping the simulations simple, we modeled a single task from each study rather than modeling all of them comprehensively. Our goal was to qualitatively fit key patterns of results from each of the aforementioned studies. We fit the parameters of the model by hand as they are highly interdependent (see the Methods section for more details).

Model architecture

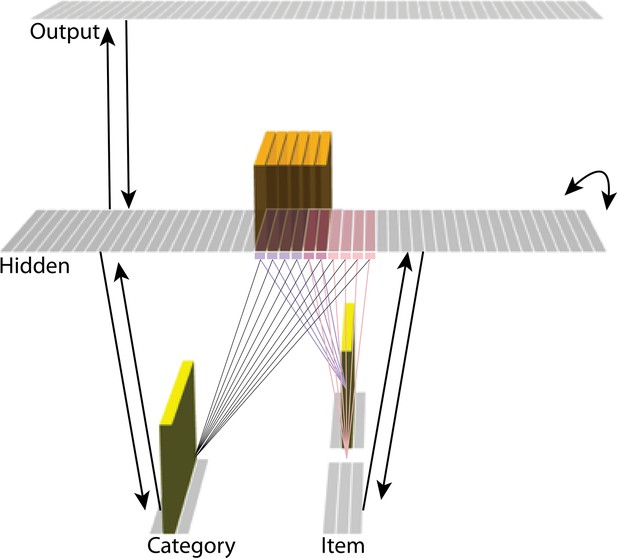

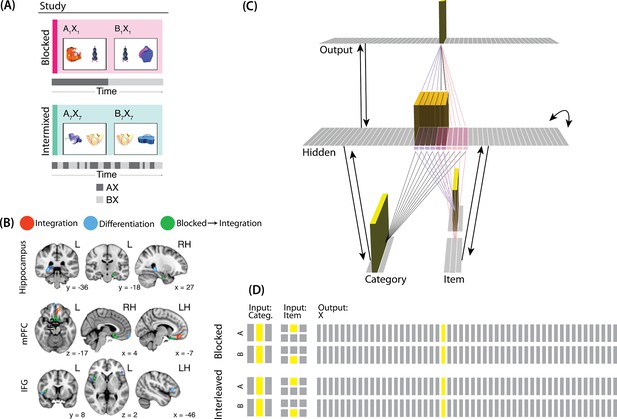

The model was built using the Emergent simulation framework (Aisa et al., 2008). All versions of the model have the same basic architecture (Figure 2; see the following sections for how the model was adapted for each version, and the Methods section for the details of all parameters).

Basic network architecture: The characteristics of the model common to all versions we tested, with hidden- and input-layer activity for pairmate A.

Black arrows indicate projections common to all versions of the model. All projections were fully connected. Pre-wired, stronger connections between the input A unit (top row of item layer) and hidden A units (purple) are shown, and pre-wired, stronger connections between the input B unit (bottom row of item layer) and hidden B units (pink) are shown. The category unit is pre-wired to connect strongly to all hidden A and B units. Hidden A units have strong connections to other hidden A units (not shown); the same is true for hidden B units. Pre-wired, stronger connections also exist between hidden and output layers (not shown). The arrangement of these hidden-to-output connections varies for each version of the model.

We wanted to include the minimal set of layers needed to account for the data. Importantly, the model is meant to be as generic as possible, so none of the layers are meant to correspond to a particular brain region (we discuss possible anatomical correspondences in the Discussion section). With those points in mind, we built the model to include four layers: category, item, hidden, and output. The category and item layers are input layers that represent the sensory or semantic features of the presented stimuli; generally speaking, we use the category layer to represent features that are shared across multiple stimuli, and we use the item layer to represent features that are unique to particular stimuli. The hidden layer contains the model’s internal representation of these stimuli, and the output layer represents either additional sensory features of the input stimulus, or else other stimuli that are associated with the input stimulus. In all models, the category and item layers are bidirectionally connected to the hidden layer; the hidden layer is bidirectionally connected to the output layer; and the hidden layer also has recurrent connections back to itself. All of these connections are modifiable through learning. Note that the hidden layer has modifiable connections to all layers in the network (including itself), which makes it ideally positioned to bind together the features of individual stimuli.

Each version of the model learns two pairmates, which are stimuli (binary vectors) represented by units in the input layers. The presentation of a stimulus involves activating a single unit in each of the input layers (i.e. the category and item layers). Pairmates share the same unit in the category layer, but differ in their item-layer unit.

We labeled the item-layer units such that the unit in the top row was associated with pairmate A and the unit in the bottom row was associated with pairmate B. Since either pairmate could be shown first, we refer to them as pairmate 1 or 2 when the order is relevant: Pairmate 1 is whichever pairmate is shown on the first trial and pairmate 2 is the item shown second.

All projections as described above have weak, randomly-sampled weight values, but we additionally pre-built some structured knowledge into the network. The item-layer input units have pre-wired, stronger connections to a selection of six pre-assigned ‘pairmate A’ hidden units and six pre-assigned ‘pairmate B’ units. The A and B hidden representations overlap to some degree (for instance, with two units shared, as shown in Figure 2). We decided to arrange the hidden layer along a single dimension so that the amount of overlap could be easily visualized and interpreted.

Hidden units representing each individual item start out strongly interconnected (that is, the six pairmate A units are linked together by maximally strong recurrent connections, as are the six pairmate B units). We also pre-wired some stronger connections between these hidden A and B units and the output units, but the setup of the hidden-to-output pre-wired connections depends on the modeled experiment.

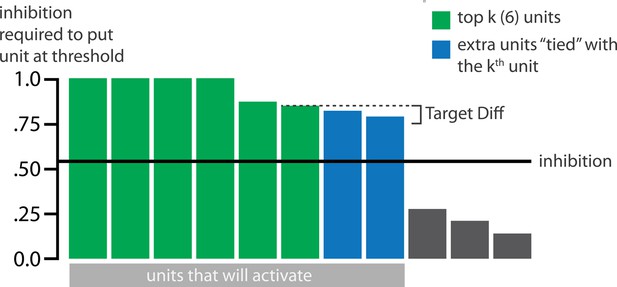

Inhibitory dynamics

Within a layer, inhibitory competition between units was enforced through an adapted version of the k-winners-take-all (kWTA) algorithm (O’Reilly and Munakata, 2000; see Methods) which limits the amount of activity in a layer to at most units. The kWTA algorithm provides a useful way of capturing the ‘set-point’ quality of inhibitory neurons without requiring the inclusion of these neurons directly.

The main method we use to allow activity to spread to the competitor is through inhibitory oscillations. Prior work has argued that inhibitory oscillations could play a key role in this kind of competition-dependent learning (Norman et al., 2006; Norman et al., 2007; Singh et al., 2022). Depending on how much excitation a competing memory is receiving, lowering the level of inhibition can allow competing memories that are inactive at baseline levels of inhibition to become moderately active (causing their connections to the target memory to be weakened) or even strongly active (causing their connections to the target memory to be strengthened). Our model implements oscillations through a sinusoidal function which lowers and raises inhibition over the course of the trial, allowing competitors to ‘pop up’ when inhibition is lower.

Learning

Connection strengths in the model between pairs of connected units and were adjusted at the end of each trial (i.e. after each stimulus presentation) as a U-shaped function of the coactivity of and , defined as the product of their activations on that trial. The parameters of the U-shaped learning function relating coactivity to change in connection strength (i.e. weakening / strengthening) were specified differently for each projection where learning occurs (bidirectionally between the input and hidden layers, the hidden layer to itself, and the hidden to output layer). Once the U-shaped learning function for each projection in each version of the model was specified, we did not change it for any of the various conditions. Details of how we computed coactivity and how we specified the U-shaped function can be found in the Methods section.

Competition

Constructing the network in this way allows us to precisely control the amount of competition between pairmates. There are several ways beside amplitude of oscillations to alter the amount of excitation spreading to the competitor. For instance, competitor activity could be modulated by altering the pre-wired weights to force the hidden-layer representations for A and B to share more units. Each version of the model (for each experiment) relies on a different method to modulate the amount of competitor activity.

Model of Chanales et al., 2021: repulsion and attraction of color memories

Key experimental findings

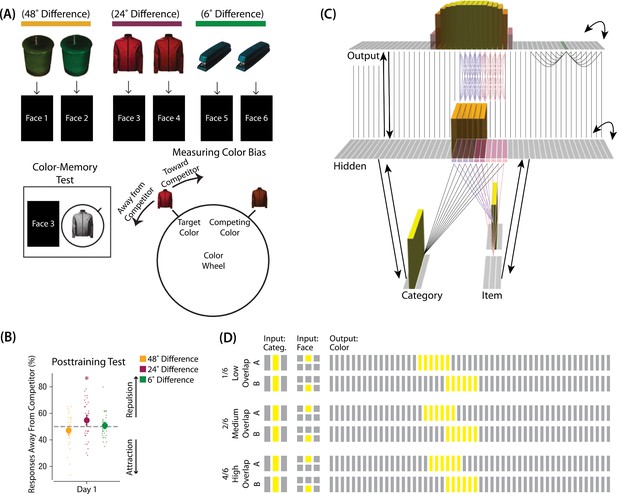

The first experiment we modeled was Chanales et al., 2021. The study was inspired by recent neuroimaging studies showing ‘similarity reversals’, wherein stimuli that have more features in common (or share a common associate) show less hippocampal pattern similarity (Favila et al., 2016; Schlichting et al., 2015; Molitor et al., 2021; Chanales et al., 2017; Dimsdale-Zucker et al., 2018; Wanjia et al., 2021; Zeithamova et al., 2018; Jiang et al., 2020; Wammes et al., 2022). Chanales et al., 2021 tested whether a similar ‘repulsion’ effect is observed with respect to how the specific features of competing events are retrieved. In their experiments, participants learned associations between objects and faces (Figure 3A). Specifically, participants studied pairs of objects that were identical except for their color value; each of these object pairmates was associated with a unique face. Participants’ memory was tested in several ways; in one of these tests — the color recall task — the face was shown as a cue alongside the colorless object, and participants were instructed to report the color of the object on a continuous color wheel.

Modeling Chanales et al., 2021.

(A) Participants in Chanales et al., 2021 learned to associate objects and faces (faces not shown here due to bioRxiv rules). The objects consisted of pairs that were identical except for their color value, and the difference between pairmate color values was systematically manipulated to adjust competition. Color memory was tested in a task where the face and colorless object were given as a cue, and participants had to use a continuous color wheel to report the color of the object. Color reports could be biased toward (+) or away from the competitor (-). (B) When color similarity was low (48°), color reports were accurate. When color similarity was raised to a moderate level (24°), repulsion occurred, such that color reports were biased systematically away from the competitor. When color similarity was raised further (6°), the repulsion effect was eliminated. (C) To model this study, we used the network structure described in Basic Network Properties, with the following modifications: This model additionally has a non-modifiable recurrent projection in the output layer, to represent the continuous nature of the color space: Each output unit was pre-wired with fixed, maximally strong weights connecting it to the seven units on either side of it (one such set of connections is shown to the output unit colored in green); background output-to-output connections (outside of these seven neighboring units) were set up to be fixed, weak, and random. The hidden layer additionally was initialized to have maximally strong (but learnable) one-to-one connections with units in the output layer, thereby ensuring that the color topography of the output layer was reflected in the hidden layer. Each of the six hidden A units were connected in an all-to-all fashion to the six pre-assigned A units in the output layer via maximally strong, pre-wired weights (purple lines). The same arrangement was made for the hidden B units (pink lines). Other connections between the output and hidden layers were initialized to lower values. In the figure, activity is shown after pairmate A is presented — the recurrent output-to-output connections let activity spread to units on either side. (D) We included six conditions in this model, corresponding to different numbers of shared units in the hidden and output layers. Three conditions are shown here. The conditions are labeled by the number of hidden/output units shared by A and B. Thus, one unit is shared by A and B in 1/6, two units are shared by A and B in 2/6, and so on. Increased overlap in the hidden and output layers is meant to reflect higher levels of color similarity in the experiment. We included overlap types from 0/6 to 5/6.

© 2021, Sage Publications. Figure 3A was adapted from Figures 1 and 3 of Chanales et al., 2021 with permission, and Figure 3B was adapted from Figure 3 of Chanales et al., 2021 with permission. These panels are not covered by the CC-BY 4.0 license and further reproduction of these panels would need permission from the copyright holder.

Chanales et al., 2021 found that, for low levels of pairmate color similarity (i.e. 72° and 48° color difference), participants were able to recall the colors accurately when cued with a face and an object. However, when color similarity was increased (i.e. 24° color difference), the color reports were biased away from the pairmate, leading to a repulsion effect. For instance, if the pairmates consisted of a red jacket and a red-but-slightly-orange jacket, repulsion would mean that the slightly-orange jacket would be recalled as less red and more yellow than it actually was. When the color similarity was increased even further (i.e. 6° color difference), the repulsion effects were eliminated (Figure 3B). For a related result showing repulsion with continuously varying face features (gender, age) instead of color, see Drascher and Kuhl, 2022.

Potential NMPH explanation

Typical error-driven learning would not predict this outcome, because it would adjust weights to align the guess more closely with the true outcome, leading to no repulsion effects. In contrast, the NMPH potentially explains the results of this study well; as the authors note, “the relationship between similarity and the repulsion effect followed an inverted-U-shape function, suggesting a sweet spot at which repulsion occurs” (Chanales et al., 2021).

The amount of color similarity provides a way of modulating the amount of excitation that can flow to the competitor’s neural representation. When color similarity is lower (i.e. 72° and 48°), the competing pairmate is less likely to come to mind. Consequently, the competitor’s activity will be on the low/left side of the U-shaped function (Figure 1C). No weakening or strengthening will occur for the competitor, and both pairmate representations remain intact.

However, when color similarity is higher (i.e. 24°), the additional color overlap means the memories compete more. When the red jacket is shown, the competing red-but-slightly-orange jacket may come to mind moderately, so it falls in the dip of the U-shaped function. If this occurs, the NMPH predicts that the two pairmates will show differentiation, resulting in repulsion in color space.

When color overlap is highest (i.e. 6°), the repulsion effect was eliminated. The NMPH could explain this result in terms of the competitor getting so much excitation from the high similarity that it falls on the high/right side of the U-shaped function, in the direction of integration (or ‘attraction’ for the behavioral reports of color). Chanales et al., 2021 did not observe an attraction effect, but this can be explained in terms of the similarity of the pairmates in this condition (at 6° of color separation, there is a limit on how much more similar the color reports could become, given the precision of manual responses).

Model set-up

Model architecture

To model this task (Figure 3C), we used the two input layers (i.e. category and item) to represent the colorless object and face associates, respectively. To represent a particular stimulus, we activated a single unit in each of the input layers; pairmates share the same unit in the object layer (i.e. ‘jacket’), but differ in the unit for the face layer (i.e. ‘face-for-jacket-A’ and ‘face-for-jacket B’). The output layer represents the color-selective units. Units that are closer to each other can be thought of as representing colors that are more similar to each other.

Knowledge built into the network

As described in Basic Network Properties, each of the two input face units is pre-wired to connect strongly to the six corresponding hidden units (either the six hidden A units, or the six hidden B units). In this version of our model, we added several extra pre-wired connections, specifically, recurrent output-to-output connections (although we did not include learning for this projection). Neighboring units were pre-wired to have stronger connections, to instantiate the idea that color is represented in a continuous way in the brain (Hanazawa et al., 2000; Komatsu et al., 1992).

The six hidden A units were connected to the corresponding six units in the output layer in an all-to-all fashion via maximally strong weights (such that each A hidden unit was connected to each A output unit); an analogous arrangement was made for the units representing pairmate B. Additionally, each non-pairmate unit in the hidden layer was pre-wired to have a maximally strong connection with the unit directly above it in the output layer. Arranging the units in this way (so the hidden layer matches the topography of the output layer) makes it easier to interpret the types of distortion that occur in the hidden layer.

Manipulation of competitor activity

In this experiment, competition is manipulated through the level of color similarity in each condition. We operationalized this by adjusting the level of overlap between the hidden (and output) color A and B units. One advantage of modeling is that, because there is no constraint on experiment length, we were able to sample a wider range of overlap types than the actual study, which was limited to three conditions per experiment. Instead, we used six overlap conditions in the model. For all conditions, the two pairmates were each assigned six units in the hidden layer. However, for the different overlap conditions, we pre-wired the weights from the input layers to the hidden layer so that the two pairmates differed in the number of hidden-layer units that were shared (note that this overlap manipulation was also reflected in the output layer, because of the pre-wired connections between the hidden and output layers described above).

We labeled the six conditions based on the number of overlapping units between the pairmates (which varied) and the number of total units per pairmate (which was always six): 0/6 overlap means that the two pairmates are side-by-side but share zero units; 1/6 overlap means they share one unit out of six each; 2/6 overlap means they share two units out of six each, and so on.

Task

The task simulated in the model is a simplified version of the paradigm used in Chanales et al., 2021. Specifically, we focused on the color recall task, where the colorless object was shown alongside the face cue, and the participant had to report the object’s color on a color wheel. To model this, the external input is clamped to the network (object and face units), and activity is allowed to flow through the hidden layer to the color layer so the network can make a color ‘guess’. We ran a test epoch after each training epoch so we could track the representations of pairmates A and B over time.

As described in Basic Network Properties, inhibitory oscillations allow units that are inactive at baseline levels of inhibition to ‘pop up’ toward the end of the trial. This is important for allowing potential competitor units to activate. There is no ‘correct’ color shown to the network, and all learning is based purely on an unsupervised U-shaped learning rule that factors in the coactivity of presynaptic and postsynaptic units (see Methods for parameter details).

Results

Effects of color similarity on color recall and neural representations

Chanales et al., 2021 showed that, as color similarity was raised, a repulsion effect occurred where the colors of pairmates were remembered as being less similar to each other than they were in reality. When color similarity was raised even further, this repulsion effect went away. In our model, we expected to find a similar U-shaped pattern as hidden-layer overlap increased.

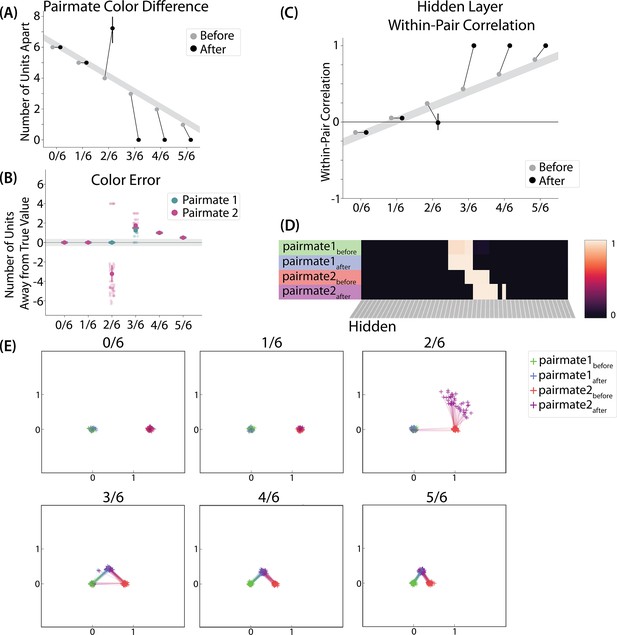

To measure repulsion, we operationalized the color memory ‘report’ as the center-of-mass of activity in the color output layer at test. Because the topography of the output layer is meaningful for this version of the model (with nearby units representing similar colors), we could measure color attraction and repulsion by the change in the distance between the centers-of-mass (Figure 4A): If A and B undergo attraction after learning, the distance between the color centers-of-mass should decrease. If A and B undergo repulsion, the distance should increase.

Model of Chanales et al., 2021 results.

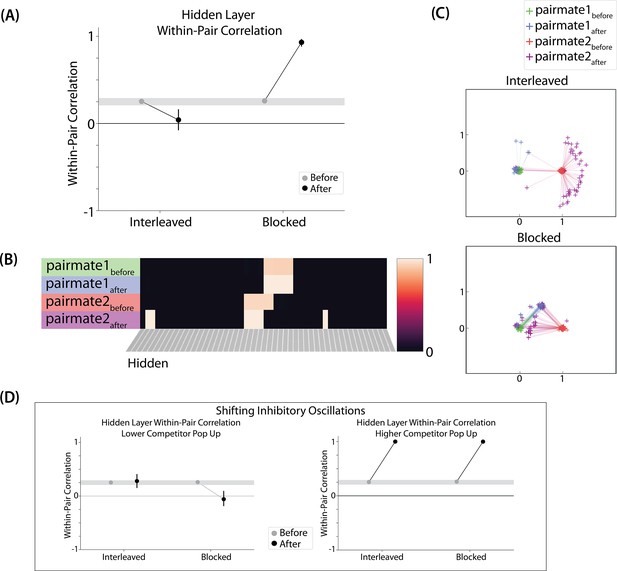

(A) The distance (number of units apart) between the centers-of-mass of output activity for A and B is used to measure repulsion vs. attraction. The gray bar indicates what the color difference would be after learning if no change happens from before (gray dots) to after (black dots) learning; above the gray bar indicates repulsion and below indicates attraction. For lower levels of overlap (0/6 and 1/6), color distance remains unchanged. For a medium level of overlap (2/6), repulsion occurs, shown by the increase in the number of units between A and B. For higher levels of overlap (3/6, 4/6, and 5/6), attraction occurs, shown by the decrease in the number of units between A and B. (B) Color error (output-layer distance between the ‘guess’ and ‘correct’ centers-of-mass) is shown for each pairmate and condition (negative values indicate repulsion). When repulsion occurs (2/6), the change is driven by a distortion of pairmate 2, whereas pairmate 1 is unaffected. (C) Pairmate similarity is measured by the correlation of the hidden-layer patterns before and after learning. Here, above the gray line indicates integration and below indicates repulsion. The within-pair correlation decreases (differentiation) when competitor overlap is moderate (2/6). Within-pair correlation increases (integration) when competitor overlap is higher (3/6, 4/6, and 5/6). (D) Four hidden-layer activity patterns from a sample run in the 2/6 condition are shown: pairmate 1before, pairmate 1after, pairmate 2before, and pairmate 2after. The subscripts refer to the state of the memory before/after the learning that occurs in the color recall task; pairmate 1 designates the first of the pairmates to be presented during color recall. Brighter colors indicate the unit is more active. In this run, pairmate 1 stays in place and pairmate 2 distorts away from pairmate 1. (E) Multidimensional scaling (MDS) plots for each condition are shown, to illustrate the pattern of representational change in the hidden layer. The same four patterns as in D are plotted for each run. MDS plots were rotated, shifted, and scaled such that pairmate 1before is located at (0,0), pairmate 2before is located directly to the right of pairmate 1before, and the distance between pairmate 1before and pairmate 2before is proportional to the baseline distance between the pairmates. A jitter was applied to all points. Asymmetry in distortion can be seen in 2/6 by the movement of pairmate 2after away from pairmate 1. In conditions that integrate, most runs lead to symmetric distortion, although some runs in the 3/6 condition lead to asymmetric integration, where pairmate 2 moves toward pairmate 1before. For panels A, B, and C, error bars indicate the 95% confidence interval around the mean (computed based on 50 model runs).

We found no difference in the color center-of-mass before and after training in the 0/6 and 1/6 conditions. As similarity increased to the 2/6 condition, the distance between the centers-of-mass increased after learning, indicating repulsion. When similarity increased further to the 3/6, 4/6, and 5/6 conditions, the distance between the centers-of-mass decreased with learning, indicating attraction. The overall pattern of results here mirrors what was found in the Chanales et al., 2021 study: Low levels of color similarity were associated with no change in color perception, moderate levels of color similarity were associated with repulsion, and the repulsion effect went away when color similarity increased further.

The only salient difference between the simulation results and the experiment results is that our repulsion effects cross over into attraction for the highest levels of similarity, whereas Chanales et al., 2021 did not observe attraction effects. It is possible that Chanales et al., 2021 would have observed an attraction effect if they had sampled the color similarity space more densely (i.e. somewhere between 24°, where they observed repulsion, and 6°, where they observed neither attraction nor repulsion). As a practical matter, it may have been difficult for Chanales et al., 2021 to observe attraction in the 6° condition given that color similarity was so high to begin with (i.e. there was no more room for the objects to ‘attract’).

We also measured representational change in the model’s hidden layer, by computing the Pearson correlation of the patterns of activity evoked by A and B in the hidden layer from before to after learning in the color recall test. If no representational change occurred, then this within-pair correlation should not change over time. In contrast, differentiation (or integration) would result in a decrease (or increase) in within-pair correlation over time.

We found that representational change tracked the repulsion/attraction results Figure 4C: Lower overlap (i.e. 0/6 and 1/6), which did not lead to any change in color recall, also did not lead to change in the hidden layer; moderate overlap (i.e. 2/6), which showed repulsion in color recall, also showed a decrease in within-pair correlation (differentiation); and higher overlap (3/6, 4/6, and 5/6), which showed attraction in color recall, also showed an increase in within-pair correlation (integration). The association between behavioral repulsion/attraction and neural differentiation/integration that we observed in the model aligns well with results from Zhao et al., 2021. They used a similar paradigm to Chanales et al., 2021 and found that the level of distortion of color memories was predicted by the amount of neural differentiation for those pairmates in parietal cortex (Zhao et al., 2021).

Crucially, we can inspect the model to see why it gives rise to the pattern of results outlined above. In the low-overlap conditions (0/6, 1/6), the competitor did not activate enough during target recall to trigger any competition-dependent learning (see Video 1). When color similarity increased in the 2/6 condition, the competitor pop-up during target recall was high enough for co-activity between shared units and unique competitor units to fall into the dip of the U-shaped function, severing the connections between these units. On the next trial, when the competitor was presented, the unique parts of the competitor activated in the hidden layer but — because of the severing that occurred on the previous trial — the formerly shared units did not. Because the activity ‘set point’ for the hidden layer (determined by the kWTA algorithm) involves having 6 units active, and the unique parts of the competitor only take up 4 of these 6 units, this leaves room for activity to spread to additional units. Given the topographic projections in the output layer, the model is biased to ‘pick up’ units that are adjacent in color space to the currently active units; because activity cannot flow easily from the competitor back to the target (as a result of the aforementioned severing of connections), it flows instead away from the target, activating two additional units, which are then incorporated into the competitor representation. This sequence of events (first a severing of the shared units, then a shift away from the target) completes the process of neural differentiation, and is what leads to the behavioral repulsion effect in color recall (because the center-of-mass of the color representation has now shifted away from the target) — the full sequence of events is illustrated in Video 2. Lastly, when similarity increased further (to 3/6 units or above), competitor pop-up increased — the co-activity between the shared units and the unique competitor units now falls on the right side of the U-shaped function, strengthening the connections between these units (instead of being severed). This strengthening leads to neural integration and behavioral attraction in color space, as shown in Video 3.

Model of Chanales et al., 2021 1/6 (low overlap) condition.

This video illustrates how the competitor does not pop up given low levels of hidden-layer overlap, so no representational change occurs.

Model of Chanales et al., 2021 2/6 (medium overlap) condition.

This video illustrates how the competitor pops up moderately and differentiates given medium levels of hidden-layer overlap.

Model of Chanales et al., 2021 3/6 (high overlap) condition.

This video illustrates how the competitor pops up strongly and integrates given high levels of hidden-layer overlap.

In summary, this version of our model qualitatively replicates the key pattern of results in Chanales et al., 2021, whereby increasing color similarity led to a repulsion effect in color recall, which went away as similarity increased further. The reasons why the model gives rise to these effects align well with the Potential NMPH Explanation provided earlier: Higher color similarity increases competitor activity, which first leads to differentiation of the underlying representations, and then — as competitor activity increases further — to integration of these representations.

Asymmetry of representational change

A striking feature of the model is that the repulsion effect in the 2/6 condition is asymmetric: One pairmate anchors in place and the other pairmate shifts its color representation. Figure 4B illustrates this asymmetry, by tracking how the center-of-mass for both pairmates changes over time in the output layer. Specifically, for each pairmate, we calculated the difference between the center-of-mass at the end of learning compared to the initial center-of-mass. A distortion away from the competitor is coded as negative, and toward the competitor is positive. When repulsion occurred in the 2/6 condition, the item shown first (pairmate 1) anchored in place (i.e., the final color report was unchanged and accurate), whereas the item shown second (pairmate 2) moved away from its competitor.

This asymmetry was also observed in the hidden layer (Figure 4D and E). In Figure 4E, we used multidimensional scaling (MDS) to visualize how the hidden-layer representations changed over time. MDS represents patterns of hidden-layer activity as points in a 2D plot, such that the distance between each pair of points corresponds to the Euclidean distance between their hidden-layer patterns. We made an MDS plot for four patterns per run: the initial hidden-layer pattern for the item shown first (pairmate 1before), the initial hidden-layer pattern for the item shown second (pairmate 2before), the final hidden-layer pattern for the item shown first (pairmate 1after), and the final hidden-layer pattern for the item shown second (pairmate 2after). Since the 0/6 and 1/6 conditions did not give rise to representational change, the MDS plot unsurprisingly shows that the before and after patterns of both pairmates remain unchanged. For the 2/6 condition (which shows differentiation), the pairmate 1before item remains clustered around pairmate 1after. However, pairmate 2after distorts, becoming relatively more dissimilar to pairmate 1, indicating that the differentiation is driven by pairmate 2 distorting away from its competitor. This asymmetry in differentiation arises for reasons described in the previous section — when pairmate 2 pops up as a competitor during recall of pairmate 1, the unique units of pairmate 2 are severed from the units that it (formerly) shared with pairmate 1, allowing it to acquire new units elsewhere given the inhibitory set point. After pairmate 2 has shifted away from pairmate 1, they are no longer in competition, so there is no need for pairmate 1 to adjust its representation and it stays in its original location in representational space (see Video 2).

In our model, the asymmetry in differentiation manifested as a clear order effect — pairmate 1 anchors in place and pairmate 2 shifts away from pairmate 1. It is important to remember, however, this effect is contingent on pairmate 2 popping up as a competitor when pairmate 1 is first shown as a target. If the dynamics had played out differently then different results might be obtained. For example, if pairmate 2 does not pop up as a competitor when pairmate 1 is first presented, and instead pairmate 1 pops up as a competitor when pairmate 2 is first presented, we would expect the opposite pattern of results (i.e. pairmate 2 will anchor in place and pairmate 1 will shift away from pairmate 2). We return to these points, and their implications for empirically testing the model’s predictions about asymmetry, in the Discussion section.

Two kinds of integration

We also observed that integration could take two different forms, symmetric and asymmetric (Figure 4B and E). In this version of the model, the symmetric integration is more common. This can be seen in the MDS plots for conditions 3/6, 4/6, and 5/6: Both pairmate 1after and pairmate 2after mutually move toward each other. This is because both pairmates end up connecting to all the units that were previously connected to either pairmate individually. Essentially, the hidden units for pairmate 1 and pairmate 2 are ‘tied’ in terms of strength of excitation, so they are all allowed to be active at once (see Activity and inhibitory dynamics in the Methods). However, in the 3/6 condition, some runs show asymmetric integration where pairmate 2 distorts toward pairmate 1. (Figure 4E).

Whether symmetric or asymmetric integration occurs depends on the relative strengths of connections between pairs of unique competitor units (competitor-competitor connections) compared to connections between unique competitor units and shared units (competitor-shared connections) after the first trial (Figure 5; note that the figure focuses on connections between hidden units, but the principle also applies to connections that span across layers). Generally, coactivity between unique competitor units (competitor-competitor coactivity) is less than coactivity between unique competitor units and shared units (competitor-shared coactivity), which is less than coactivity between unique target units and shared units (target-shared coactivity). In the 2/6 condition (Figure 5A), competitor-competitor coactivities fall on the left side of the U-shaped function, and remain unchanged. In the 4/6 and 5/6 conditions, because competitor activity is so high, competitor-competitor coactivities fall on the right side of the U-shaped function (Figure 5B). It follows, then, that there is some overlap amount where competitor-competitor coactivities fall in the dip of the U-shaped function (Figure 5C). This is what happens in some runs in the 3/6 condition: On trial 1, some competitor-competitor connections (i.e. internal connections within pairmate 2) are severed, while — at the same time — competitor-shared connections are strengthened. On the next trial, when the model is asked to recall pairmate 2, activity flows out of the pairmate 2 representation into the shared units, which are connected to the representations of both pairmate 1 and pairmate 2. Because the representation of pairmate 2 has been weakened by the severing of its internal connections, while the representation of pairmate 1 is still strong, the representation of pairmate 1 outcompetes the representation of pairmate 2 (i.e. pairmate 1 receives substantially more excitation via recurrent connections than pairmate 2) and the neural pattern in the hidden layer ‘flips over’ to match the original pairmate 1 representation. This hidden-layer pattern is then associated with the pairmate 2 input, resulting in asymmetric integration — from this point forward, both pairmate 2 and pairmate 1 evoke the original pairmate 1 representation (see Video 4).

Schematic of coactivities on Trial 1.

Generally, coactivity between pairs of units that are unique to the competitor (competitor-competitor coactivity) is less than coactivity between unique competitor units and shared units (competitor-shared coactivity), which is less than target-target, target-shared, or shared-shared coactivity. The heights of the vertical bars in the bottom row indicate activity levels for particular hidden units. The top row plots coactivity values for a subset of the pairings between units (those highlighted by the arcs in the bottom row), illustrating where they fall on the U-shaped learning function. (A) Schematic of the typical arrangement of coactivity in the hidden-hidden connections and item-hidden connections in the 2/6 condition. The competitor units do not activate strongly. As a result, the competitor-shared connections are severed because they fall in the dip of the U-shaped function; this, in turn, leads to differentiation. (B) Typical arrangement of coactivity in the higher overlap conditions, 4/6 and 5/6. Here, the competitor units are highly active. Consequently, all connection types fall on the right side of the U-shaped function, leading to integration. Specifically, all units connect to each other more strongly, leading units previously associated with either pairmate A or B to join together. (C) As competitor pop-up increases, moving from the situation depicted in panel A to panel B, intermediate levels of competitor activity can result in competitor-competitor coactivity levels falling into the dip of the U-shaped function. If enough competitor-competitor connections weaken, while competitor-shared connections strengthen, this imbalance can lead to an asymmetric form of integration where pairmate 2 moves toward pairmate 1 (see text for details). This happens in some runs of the 3/6 overlap condition.

Two different types of integration.

This video illustrates how integration can either be symmetric or asymmetric depending on the amount of competitor pop-up.

Thus, two kinds of integration can occur — one where both pairmates pick up all units that initially belonged to either pairmate (symmetric), and one where pairmate 2 moves toward pairmate 1 (asymmetric). Generally, as competitor activity is raised, competitor-competitor coactivity is raised, and it is more likely the integration will become symmetric.

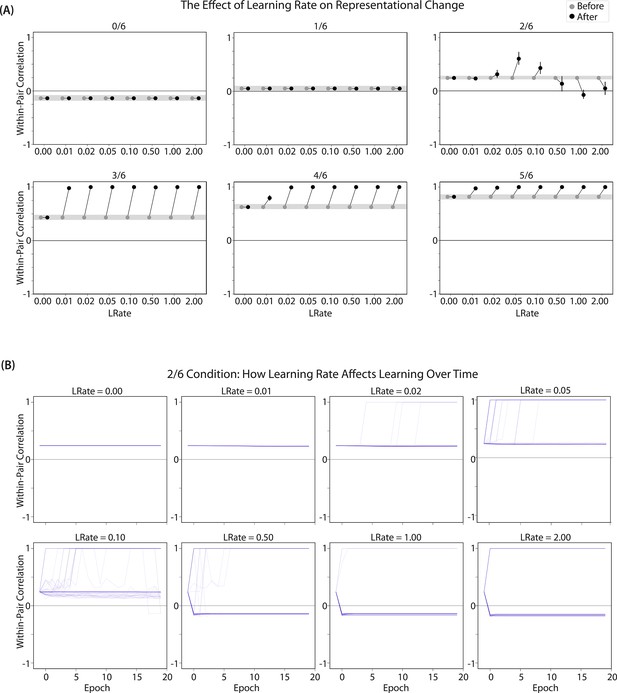

Differentiation

We found that differentiation requires a high learning rate. In our model, the change in connection weights on each trial is multiplied by a learning rate (LRate), which is usually set to 1. Lowering the LRate value, consequently, leads to smaller learning increments. When we cycle through LRate values for all projections other than the output-to-output connection (where LRate is zero), we find that differentiation fails to occur in the 2/6 condition if LRate is too low (Figure 6A): If the connections between competitor (pairmate 2) and shared units are not fully severed on Trial 1, the (formerly) shared units may still receive enough excitation to strongly activate when the model is asked to recall pairmate 2 on Trial 2. This can lead to two possible outcomes: Sometimes the activity pattern in the hidden layer ends up matching the original pairmate 2 representation (i.e. the shared units are co-active with the unique pairmate 2 units), resulting in a re-forming of the original representation. In other cases, asymmetric integration occurs: If connections between pairmate 2 units and shared units have weakened somewhat, while the connections between shared units and pairmate 1 units are still strong, then spreading activity from the (re-activated) shared units on Trial 2 can lead to the original pairmate 1 hidden-layer representation outcompeting the original pairmate 2 hidden-layer representation, in which case the pairmate 2 inputs will become associated with the original pairmate 1 hidden-layer representation. See Video 5 for illustrations of both possible outcomes. A useful analogy may be escape velocity from astrophysics. Spaceships need to be going a certain speed to escape the pull of gravity. Similarly, the competitor representation needs to get a certain distance away from its pairmate in one trial, or else it will get pulled back in.

Learning rate and representational change.

(A) The learning rate (LRate) parameter was adjusted and the within-pair correlation in the hidden layer was calculated for each overlap condition. In each plot, the gray horizontal bar indicates baseline similarity (prior to NMPH learning); values above the gray bar indicate integration and values below the gray bar indicate differentiation. Error bars indicate the 95% confidence interval around the mean (computed based on 50 model runs). The default LRate for simulations in this paper is 1. In the low overlap conditions (0/6 and 1/6), adjusting the LRate has no impact on representational change. In the 2/6 condition, differentiation does not occur if the LRate is lowered. In the high overlap conditions (3/6, 4/6, and 5/6), integration occurs regardless of the LRate (assuming it is set above zero). (B) For each LRate value tested, the within-pair correlation over time in the 2/6 condition is shown, where each purple line is a separate run (darker purple lines indicate many lines superimposed on top of each other). When LRate is set to 0.50 or higher, some model runs show abrupt, strong differentiation, resulting in negative within-pair correlation values; these negative values indicate that the hidden representation of one pairmate specifically excludes units that belong to the other pairmate.

Effects of lowering learning rate.

This video illustrates how differentiation can fail to occur when the learning rate is too low.

A corollary of the fact that differentiation requires a high learning rate is that, when it does happen in the model, it happens abruptly. After competitor-shared connections are weakened, this can have two possible effects. If the learning rate is high enough to sever the competitor-shared connections, differentiation will be evident the next time the competitor is presented. If the amount of weakening is insufficient, the formerly shared units will be reactivated and no differentiation will occur. The abruptness of differentiation can be seen in Figure 6B, which shows learning across trials for individual model runs (with different random seeds) as a function of LRate: When the learning rate is high enough to cause differentiation, it always happens between the first and second epochs of training. The prediction that differentiation should be abrupt is also supported by empirical studies. For instance, Wanjia et al., 2021 showed that behavioral expressions of successful learning are coupled with a temporally abrupt, stimulus-specific decorrelation of CA3/dentate gyrus activity patterns for highly similar memories.

In contrast to these results showing that differentiation requires a large LRate, the integration effects observed in the higher-overlap conditions do not depend on LRate. Once two items are close enough to each other in representational space to strongly coactivate, a positive feedback loop ensues: Any learning that occurs (no matter how small) will pull the competitor closer to the target, making them even more likely to strongly coactivate (and thus further integrate) in the future.

Pairs of items that differentiate show anticorrelated representations

Figure 6B also highlights that, for learning rates where robust differentiation effects occur in aggregate (i.e. there is a reduction in mean pattern similarity, averaging across model runs), these aggregate effects involve a bimodal distribution across model runs: For some model runs, learning processes give rise to anticorrelated representations, and for other model runs the model shows integration; this variance across model runs is attributable to random differences in the initial weight configuration of the model and/or in the order of item presentations across training epochs. The aggregate differentiation effect is therefore a function of the proportion of model runs showing differentiation (here, anticorrelation) and the proportion of model runs showing integration. The fact that differentiation shows up as anticorrelation in the model’s hidden layer relates to the learning effects discussed earlier: Unique competitor units are sheared away from (formerly) shared units, so the competitor ends up not having any overlap with the target representation (i.e. the level of overlap is less than you would expect due to chance, which mathematically translates into anticorrelation). We return to this point and discuss how to test for anticorrelation in the Discussion section.

Take-home lessons

Our model of Chanales et al., 2021 shows that the NMPH can explain the results observed in the study, namely that moderate color similarity can lead to repulsion and that, if color similarity is increased beyond that point, the repulsion is eliminated. Furthermore, our model shows how the behavioral changes in this paradigm are linked to differentiation and integration of the underlying neural representations. The simulations also enrich our NMPH account of these phenomena in several ways, beyond the verbal account provided in Ritvo et al., 2019. In particular, the simulations expose some important boundary conditions for when representational change can occur according to the NMPH (e.g. that differentiation depends on a large learning rate, but integration does not), and the simulations provide a more nuanced account of exactly how representations change (e.g. that differentiation driven by the NMPH is always asymmetric, whereas integration is sometimes asymmetric and sometimes symmetric; and that, when differentiation occurs on a particular model run, it tends to give rise to anticorrelated representations in the model’s hidden layer).

There are several aspects of Chanales et al., 2021 left unaddressed by our model. For instance, they interleaved the color recall task (simulated here) with an associative memory test (not simulated here) where a colored object appeared as a cue and participants had to select the associated face. Our goal was to show how the simplest form of their paradigm could lead to the distortion effects that were observed; future simulations can assess whether these other experiment details affect the predictions of the model.

Model of Favila et al., 2016: similar and different predictive associations

Key experimental findings

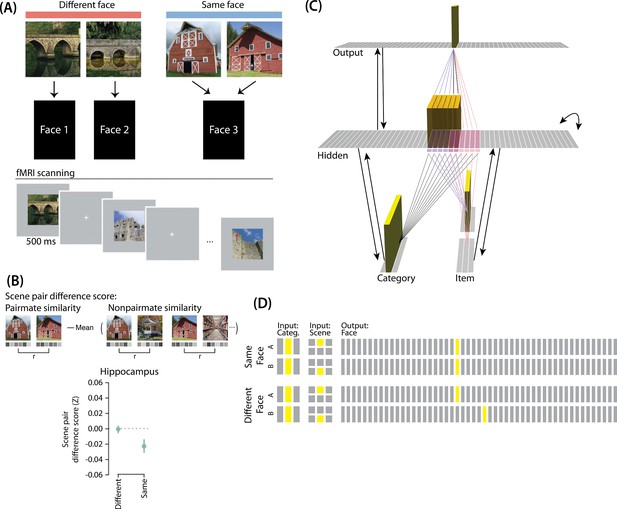

Favila et al., 2016 provided neural evidence for differentiation following competition, using a shared associate to induce competition between pairmates. In this study (Figure 7A), participants were instructed to learn scene-face associations. Later, during the repeated face-test task, participants were shown a scene and asked to pick the correct face from a bank of faces. Scenes were made up of highly similar pairs (e.g. two bridges, two barns). Sometimes, two paired scenes predicted the same face, sometimes different faces, and sometimes no face at all (in the latter case, the paired scenes appeared in the study task and not the face-test task). Participants were never explicitly told that some scene pairs shared a common face associate.

Modeling Favila et al., 2016.

(A) Participants learned to associate individual scenes with faces (faces not shown here due to bioRxiv rules). Each scene had a pairmate (another, similar image from the same scene category, e.g., another barn), and categories were not re-used across pairs (e.g. if the stimulus set included a pair of barns, then none of the other scenes would be barns). Pairmates could be associated with the same face, different faces, or no face at all (not shown). Participants were scanned while looking at each individual scene in order to get a measure of neural representations for each scene. This panel was adapted from Figure 1 of Favila et al., 2016. (B) Neural similarity was measured by correlating scene-evoked patterns of fMRI activity. A scene pair difference score was calculated by subtracting non-pairmate similarity from pairmate similarity; this measure shows the relative representational distance of pairmates. Results for the different-face and same-face condition in the hippocampus are shown here: Linking scenes to the same face led to a negative scene pair difference score, indicating that scenes became less similar to each other than they were to non-pairmates (differentiation). This panel was adapted from Figure 2 of Favila et al., 2016. (C) To model this study, we used the same basic structure that was described in Basic Network Properties. In this model, the category layer represents the type of scene (e.g. barn, bridge, etc.), and the item layer represents an individual scene. The output layer represents the face associate. Activity shown is for pairmate A in the same-face condition. Category-to-hidden, item-to-hidden and hidden-to-hidden connections are pre-wired similarly to the 2/6 condition of our model of Chanales et al., 2021 (see Figure 2). The hidden A and B units have random, low-strength connections to all output units, but are additionally pre-wired to connect strongly to either one or two units in the output layer. In the different-face condition, hidden A and B units are pre-wired to connect to two different face units, but in the same-face condition, they are pre-wired to connect to the same face unit. (D) This model has two conditions: same face and different face. The only difference between conditions is whether the hidden A and B units connect to the same or different face unit in the output layer.

When the two scenes predicted different faces, the hippocampal representations for each scene were relatively orthogonalized — hippocampal representations of the two pairmate scenes were just as similar to each other as to non-pairmate scenes. However, when the two scenes predicted the same face, differentiation resulted, such that the hippocampal representations of the two pairmate scenes were less similar to each other than to non-pairmate scenes (Figure 7B).

Potential NMPH explanation

As noted earlier, these results contradict supervised learning models, which predict that pairing two stimuli with the same associate would lead to integration, not differentiation. The NMPH, however, can potentially explain these results: Linking the pairmates to a shared face associate provides an additional pathway for activity to spread from the target to the competitor (i.e. activity can spread from scene A, to the shared face, to scene B). If competitor activity falls on the left side of the U-shaped function in the different-face condition, then the extra spreading activity in the same-face condition could push the activity of the competitor into the ‘dip’ of the function, leading to differentiation.

Model set up

Model architecture

Our model of Chanales et al., 2021 can be adapted for Favila et al., 2016. Instead of mapping from an object and face to a color, as in Chanales et al., 2021, the Favila et al., 2016 study involves learning mappings between scenes and faces. Also, the way in which competition is manipulated is different across the studies: In Chanales et al., 2021, competition is manipulated by varying the similarity of the stimuli (specifically, the color difference of the objects), whereas in Favila et al., 2016, competition is manipulated by varying whether pairmate scenes are linked to the same vs. different face.

To adapt our model for Favila et al., 2016, the interpretation of each layer must be altered to fit the new paradigm (Figure 7C). The category layer now represents the category for the scene pairmates (e.g. barn, bridge, etc.). The item layer represents the individual scene (e.g. where A represents barn 1 and B represents barn 2). Just as before, the input for each stimulus is composed of two units (one in each of the two input layers); the category-layer unit is consistent for A and B, but the item-layer unit differs.

The output layer in this model represents the face associate, such that each individual output-layer unit could be thought of as a single face. For this model, the ordering of units in the output layer is not meaningful — two units next to each other in the output layer are no more similar than to any other units.

Knowledge built into the network

As before, we were interested in the moment that competition first happens, so we pre-wired connections as if some initial learning had occurred. We pre-wired the connections between the hidden layer and both input layers (and from hidden layer to itself) to be similar to the 2/6 condition of our model of Chanales et al., 2021, so there is some baseline amount of overlap between A and B (i.e. reflecting the similar-looking scenes).

To mimic the learning of scene-face associates, all hidden A units are connected to a single unit in the face layer, and all hidden B units are connected to a single unit in the face layer. In the different-face condition, A and B hidden units are connected to different face units, to reflect that the two scenes were predictive of two separate faces. In the same-face condition, A and B hidden units connect to the same face unit, to reflect that A and B predict the same face.

Manipulation of competitor activity

Competitor activity is modulated by the similarity of predictive consequences in this version of the model — that is, whether the hidden units for pairmates A and B are pre-wired to connect strongly to the same unit or different units in the output layer. Stronger connections to the same face unit should provide an extra conduit for excitation to flow to the competitor units.

Task

The task performed by the model was to guess the face associated with each scene. We clamped the external input for the scenes and allowed activity to spread through the hidden layer to the output layer so it could make a guess for the correct face. No correct answer was shown, and no error-driven learning was used. Although the exact parameter values used in this simulation were slightly different from the values used in the previous simulation (see Methods for details), inhibition and oscillations were implemented in the same way as before.

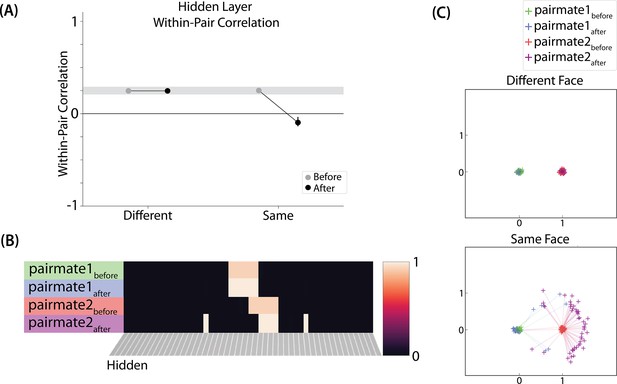

Results

For this study, the key dependent measure was representational change within the hidden layer. Specifically, we sought to capture the hippocampal pattern similarity results reported by Favila et al., 2016.

Differentiation and integration

The different-face condition in this model led to no representational change (see Video 6) whereas the same-face condition led to differentiation (see Video 7), as measured using within-pair correlation (Figure 8A). Differentiation is indicated by the fact that new units are added that did not previously belong to either pairmate. In this version of the model, the topography of the hidden layer is not meaningful other than the units assigned to A and B, so the new units that are added to the representation could be on either side. The reason why the model shows differentiation in the same-face condition (but not in the different-face condition) aligns with the Potential NMPH Explanation provided earlier: The shared face associate in the same-face condition provides an conduit for extra activity to spread to the competitor scene pairmate, leading to moderate activity that triggers differentiation. Note also that the exact levels of differentiation that are observed in the different-face and same-face conditions are parameter dependent; for an alternative set of results showing some differentiation in the different-face condition (but still less than is observed in the same-face condition), see Figure 8—figure supplement 1.

Model of Favila et al., 2016 different face condition.

This video illustrates how no representational change occurs in the different face condition of our simulation of Favila et al., 2016.

Model of Favila et al., 2016 same face condition.

This video illustrates how differentiation occurs in the same face condition of our simulation of Favila et al., 2016.

Model of Favila et al., 2016 results.

(A) Within-pair correlation between A and B hidden layer representations before and after learning. Error bars indicate the 95% confidence interval around the mean (computed based on 50 model runs). In the same-face condition, the within-pair correlation is reduced after learning, indicating differentiation. (B) Activity patterns of both pairmates in the hidden layer before and after learning for a sample “same-face” run are shown. Asymmetry in distortion can be seen in how pairmate 1’s representation is unchanged and pairmate 2 picks up additional units that did not previously belong to either item (note that there is no topography in the hidden layer in this simulation, so we would not expect the newly-acquired hidden units to fall on one side or the other of the layer). (C) MDS plots for each condition illustrate representational change in the hidden layer. The differentiation in the same-face condition is asymmetric: Pairmate 2after generally moves further away from pairmate 1 in representational space, while pairmate 1 generally does not change.

Nature of representational change

The representational change in the hidden layer shows the same kind of asymmetry that occurred in our model of Chanales et al., 2021; Figure 8B and C: In the same-face condition, pairmate 1 typically anchors in place, whereas pairmate 2 acquires new units that did not previously belong to either either pairmate (Figure 8B), resulting in it shifting away from pairmate 1 (Figure 8C). Although this pattern is present on most runs, the MDS plot also shows that some runs fail to differentiate and instead show integration (see the Discussion for an explanation of how conditions that usually lead to differentiation may sometimes lead to integration instead). Note that the neural measure of differentiation used by Favila et al., 2016 does not speak to the question of whether representational change was symmetric or asymmetric in their experiment — to measure the (a)symmetry of representation change, it is necessary to take ‘snapshots’ of the representations both before and after learning (e.g. Schapiro et al., 2012), but the method used by Favila et al., 2016 only looked at post-learning snapshots (comparing the neural similarity of pairmates and non-pairmates). We return in the Discussion to this question of how to test predictions about asymmetric differentiation.

Figure 8—figure supplement 1 also indicates that, as in our simulation of Chanales et al., 2021, individual model runs where differentiation occurs show anticorrelation between the pairmate representations, and gradations in the aggregate level of differentiation that is observed across conditions reflect differences in the proportion of trials showing this anticorrelation effect.

Differentiation requires a high learning rate

As in our model of Chanales et al., 2021, we again found that a high LRate is needed for differentiation. Specifically, lowering LRate below its standard value (e.g, to a value of 0.10) eliminated the differentiation effect in the same-face condition. Changing the learning rate did not impact the different-face condition. In this condition, the pop-up is low enough that all competitor-shared connections on Trial 1 fall on the left side of the U-shaped function, so no weight change occurs, regardless of the learning rate setting.

Take-home lessons

This simulation demonstrates that the NMPH can explain the results of Favila et al., 2016, where learning about stimuli that share a paired associate can lead to differentiation. The model shows how linking items with the same or different associates can modulate competitor activity and, through this, modulate representational change. As in our simulation of Chanales et al., 2021, we found that the NMPH-mediated differentiation was asymmetric, manifested as anticorrelation between pairmate representations on individual model runs, and required a high learning rate, leading to abrupt representational change.

Model of Schlichting et al., 2015: blocked and interleaved learning

Key experimental findings

For our third simulation, we focused on a study by Schlichting et al., 2015. This study examined how the learning curriculum affects representational change in different brain regions. Participants learned to link novel objects with a common associate (i.e. AX and BX). Sometimes these associates were presented in a blocked fashion (all AX before any BX) and sometimes they were presented in an interleaved fashion (Figure 9A).

Modeling Schlichting et al., 2015.

(A) Participants in Schlichting et al., 2015 learned to link novel objects with a common associate (i.e., AX and BX). Sometimes these associates were learned in a blocked design (i.e. all AX before any BX), and sometimes they were learned in an interleaved design. The items were shown side-by-side, and participants were not explicitly told the structure of the shared items. Before and after learning, participants were scanned while observing each item alone, in order to get a measure of the neural representation of each object. This panel was adapted from Figure 1 of Schlichting et al., 2015. (B) Some brain regions (e.g. right posterior hippocampus) showed differentiation for both blocked and interleaved conditions, some regions (e.g. left mPFC) showed integration for both conditions, and other regions (e.g. right anterior hippocampus) showed integration in the blocked condition but differentiation in the interleaved condition. This panel was adapted from Figures 3, 4 and 5 of Schlichting et al., 2015. (C) To model this study, we used the network structure described in Basic Network Properties, with the following modifications: The structure of the network was similar to the same-face condition in our model of Favila et al., 2016 (see Figure 7), except we altered the connection strength from units in the hidden layer to the output unit corresponding to the shared item (item X) to simulate what would happen depending on the learning curriculum. In both conditions, the pre-wired connection linking the item B hidden units to the item X output unit is set to .7. In the interleaved condition, the connection linking the item A hidden units to the item X output unit is set to .8, to reflect some amount of initial AX learning. In the blocked condition, the connection linking the item A hidden units to the item X output unit is set a higher value (0.999), to reflect extra AX learning. (D) Illustration of the input and output patterns, which were the same for the blocked and interleaved conditions (the only difference in how we modeled the conditions was in the initial connection strengths, as described above).

The analysis focused on the hippocampus, the medial prefrontal cortex (mPFC), and the inferior frontal gyrus (IFG). Some brain regions (e.g. right posterior hippocampus) showed differentiation for both blocked and interleaved conditions, some regions (e.g. left mPFC) showed integration for both conditions, and others (e.g. right anterior hippocampus) showed integration in the blocked condition but differentiation in the interleaved condition (Figure 9B).

Potential NMPH explanation

The NMPH can potentially explain how the results differ by brain region, since the overall level of inhibition in a region can limit the competitor’s activity. For instance, regions that tend to show differentiation (like posterior hippocampus) have sparser activity (Barnes et al., 1990). Higher inhibition in these areas could cause the activity of the competitor to fall into the moderate range, leading to differentiation. For regions with lower inhibition, competitor activity may fall into the high range, leading to integration.

The result that some regions (e.g. right anterior hippocampus) show differentiation in the interleaved condition and integration in the blocked condition could also be explained by the NMPH. By the first BX trial, we would expect that the connections between A and X would be much stronger in the blocked condition (after many AX trials) compared to the interleaved condition (after one or a few AX trials). This stronger A-X connection could allow more activity to flow from B through X to the A competitor. Consequently, competitor activity in the blocked condition would fall farther to the right of the U-shaped function compared to the interleaved condition, allowing for integration in the blocked condition but differentiation in the interleaved condition.

Model set up

Model architecture