The recurrent temporal restricted Boltzmann machine captures neural assembly dynamics in whole-brain activity

Figures

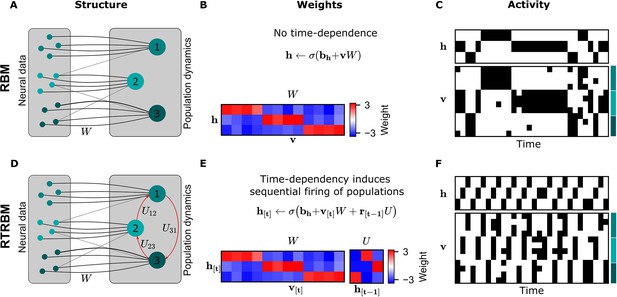

The recurrent temporal RBM (RTRBM) extends the restricted Boltzmann machine (RBM) by additionally accounting for temporal interactions of the neural assemblies.

(A) A schematic depiction of an RBM with visible units (neurons) on the left, and hidden units (neural assemblies) on the right. The visible and hidden units are connected through a set of weights . (B) An example matrix where a subset of visible units is connected to one hidden unit. Details of the equations in panel B and E are given in Materials and methods. (C) Hidden and visible activity traces were generated by sampling from the RBM. Due to its static nature, the RBM samples do not exhibit any sequential activation pattern, but merely show a stochastic exploration of the population activity patterns. (D) Schematic depiction of an RTRBM. The RTRBM formulation matches the static connectivity of the RBM, but extends it with the weight matrix to model temporal dependencies between the hidden units. (E) In the present example, assembly 1 excites assembly 2, assembly 2 excites assembly 3, and assembly 3 excites assembly 1, while the remaining connections were set to 0. (F) Hidden and visible activity traces were generated by sampling from the RTRBM. In contrast to the RBM samples, the RTRBM generates samples featuring a sequential firing pattern. It is able to do so due to the temporal weight matrix which enables modeling temporal dependencies.

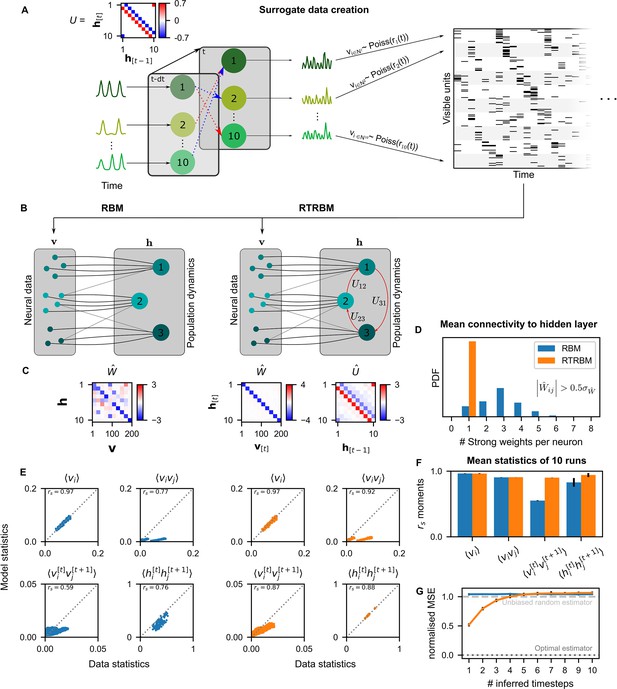

The recurrent temporal RBM (RTRBM) outperforms the restricted Boltzmann machine (RBM) on sequential statistics on simulated data.

(A) Simulated data generation: Hidden Units () interact over time to generate firing rate traces which are used to sample a Poisson train. For example, assembly 1 drives assembly 2 and inhibits assembly 10, both at a single time-step delay. (B) Schematic depiction of the RBM and RTRBM trained on the simulated data. (C) For the RBM, the aligned estimated weight matrix contains spurious off-diagonal weights, while the RTRBM identifies the correct diagonal structure (top). For the assembly weights (left), the RTRBM also converges to similar aligned estimated temporal weights (right). (D) The RTRBM attributes only a single strong weight to each visible unit ((, where is the standard deviation of )), consistent with the specification in , while in the RBM multiple significant weights get assigned per visible units. (E) The RBM and RTRBM perform similarly for concurrent (, ) statistics, but the RTRBM provides more accurate estimates for sequential (, ) statistics. In all panels, the abscissa refers to the data statistics in the test set, while the ordinate shows data sampled from the two models,, respectively. (F) The trained RTRBM and the RBM yield similar concurrent moments, but the RTRBM significantly outperformed the RBM on time-shifted moments (see text for details on statistics). (G) The RTRBM achieved significantly lower normalized mean squared error (nMSE) when predicting ahead in time from the current state in comparison to RBMs for up to four time-steps.

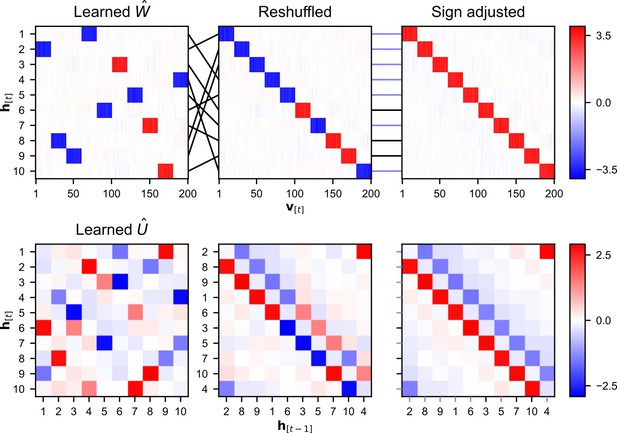

Alignment of weight matrices after learning.

An alignment procedure is used to be able to enable comparison between the estimated temporal weight matrix and the original temporal matrix used to generate the data. Details on the alignment procedure can be found in alignment of the estimated temporal weight matrix. (A) The link between hidden units and assemblies of visible units can often be clearly identified within the weight matrix, making it possible to define an ordering of the hidden units such that it is in line with the ordering of the assemblies. This leads to a shuffling of the rows in the matrix. The sign of the mean weight between an assembly and its matched hidden unit is used to identify inverse relations. (B) The reordering of the hidden units leads to a reshuffling of both the rows and the columns of the matrix. The sign of an entry is switched when the two hidden units have an opposing sign to their matched assembly of visible units.

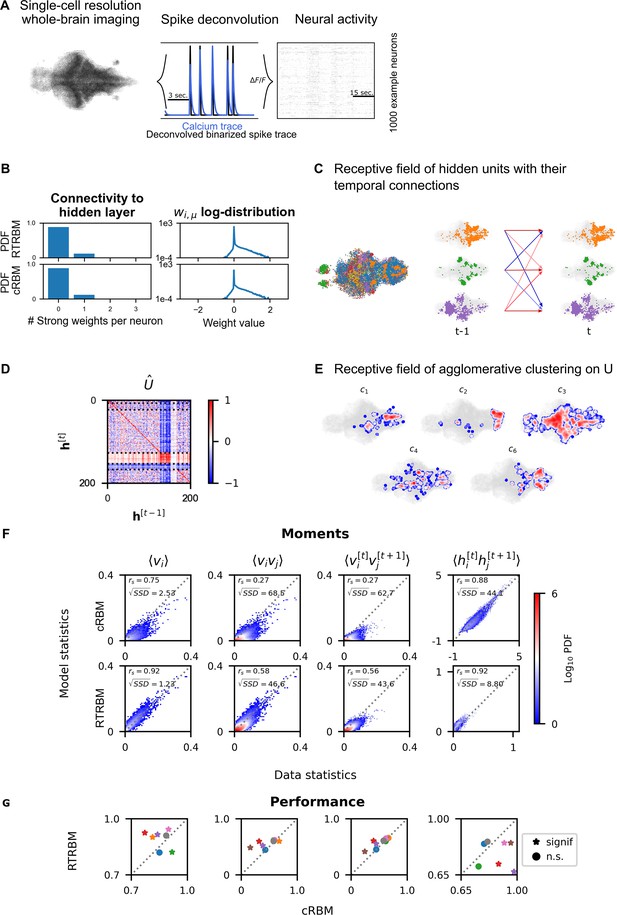

Recurrent temporal RBM (RTRBM) often outperforms the compositional restricted Boltzmann machine (cRBM) on zebrafish data.

(A) Whole-brain neural activity of larval zebrafish was imaged via Calcium-indicators using light-sheet microscopy at single neuron resolution (left). Calcium activity (middle, blue) is deconvolved by blind, sparse deconvolution to obtain a binarized spike train (middle, black). The binarized neural activity of 1000 randomly chosen neurons (right). (B) Left: Distribution of all visible-to-hidden weights. Here, a strong weight is determined by proportional thresholding, . Here is set such that 5000 neurons have a strong connection towards the hidden layer. Right: log-weight distribution of the visible to hidden connectivity. (C) The RTRBM extracts sample assemblies (color indicates assembly) by selecting neurons based on the previously mentioned threshold. Visible units with stronger connections than this threshold for a given hidden unit are included. Temporal connections (inhibitory: blue, excitatory: red) between assemblies are depicted across time-steps. (D) Temporal connections between the assemblies are sorted by agglomerative clustering (dashed lines separate clusters, colormap is clamped to ). Details on the clustering method can be found in Materials and methods. (E) Corresponding receptive fields of the clusters identified in (D), where the visible units with strong weights are selected similarly to (B). The receptive field of cluster 5 has been left out as it contains only a very small number of neurons with strong weights based on the proportional threshold. (F) Comparative analysis between the cRBM (bottom row) and RTRBM (top row) on inferred model statistics and data statistics (test dataset). Compared in terms of Spearman correlations and sum square difference. From left to right: the RTRBM significantly outperformed the cRBM on the mean activations (), pairwise neuron-neuron interactions (), time-shifted pairwise neuron-neuron interactions (), and time-shifted pairwise hidden-hidden interactions () for example fish 4. (G) The methodology in panel F is extended to analyze datasets from eight individual fish, each color representing one individual fish. Spearman correlation and the assessment of significant differences between both models are determined using a bootstrap method (see Materials and methods for details).

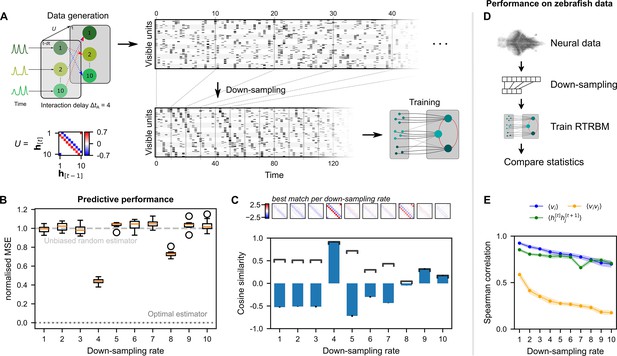

Neural interaction timescale can be identified via recurrent temporal RBM (RTRBM) estimates over multiple timescales.

(A) Training paradigm. Simulated data is generated as in Figure 2, but with temporal interactions between populations at a delay of time-steps. This data is downsampled according to a downsampling rate by taking every -th time-step (shown here is ), and used for training different RTRBMs. (B) Performance of the RTRBM for various down-sampling rates measured as the normalized mean squared error (MSE) in predicting the visible units one time-step ahead ( models per ). Dotted line shows the mean estimate of the lower bound ± SEM () due to inherent variance in the way the data is generated (see Materials and methods). Dashed gray line indicates the theoretical performance of an uninformed, unbiased estimator . (C) Cosine similarity between the interaction matrix and the aligned learned matrices , both z-scored. Bars and errorbars show mean and standard deviation, respectively, across the models per . Dark lines show absolute values of the mean cosine similarity. Shown above are the matrices with the largest absolute cosine similarity per down-sampling rate. (D) The same procedure as in (A) is performed on neural data in order to find the effect of down-sampling here. (E) Spearman correlation of three important model statistics across different down-sampling rates for neural data from example fish 4, similar to Figure 3F. Dots and shaded areas indicate mean and two times standard deviation, determined using a bootstrap method (see Materials and methods for details).