Barcoded bulk QTL mapping reveals highly polygenic and epistatic architecture of complex traits in yeast

Abstract

Mapping the genetic basis of complex traits is critical to uncovering the biological mechanisms that underlie disease and other phenotypes. Genome-wide association studies (GWAS) in humans and quantitative trait locus (QTL) mapping in model organisms can now explain much of the observed heritability in many traits, allowing us to predict phenotype from genotype. However, constraints on power due to statistical confounders in large GWAS and smaller sample sizes in QTL studies still limit our ability to resolve numerous small-effect variants, map them to causal genes, identify pleiotropic effects across multiple traits, and infer non-additive interactions between loci (epistasis). Here, we introduce barcoded bulk quantitative trait locus (BB-QTL) mapping, which allows us to construct, genotype, and phenotype 100,000 offspring of a budding yeast cross, two orders of magnitude larger than the previous state of the art. We use this panel to map the genetic basis of eighteen complex traits, finding that the genetic architecture of these traits involves hundreds of small-effect loci densely spaced throughout the genome, many with widespread pleiotropic effects across multiple traits. Epistasis plays a central role, with thousands of interactions that provide insight into genetic networks. By dramatically increasing sample size, BB-QTL mapping demonstrates the potential of natural variants in high-powered QTL studies to reveal the highly polygenic, pleiotropic, and epistatic architecture of complex traits.

Editor's evaluation

This impressive study not only expands the identification of small-effect QTL, but also reveals epistatic interactions at an unprecedented scale. The approach takes advantage of DNA barcodes to increase the scale of genetic mapping studies in yeast by an order of magnitude over previous studies, yielding a more complete and precise view of the QTL landscape and confirming widespread epistatic interactions between the different QTL.

https://doi.org/10.7554/eLife.73983.sa0Introduction

In recent years, the sample size and statistical power of genome-wide association studies (GWAS) in humans has expanded dramatically (Bycroft et al., 2018; Eichler et al., 2010; Manolio et al., 2009). Studies investigating the genetic basis of important phenotypes such as height, BMI, and risk for diseases such as schizophrenia now involve sample sizes of hundreds of thousands or even millions of individuals. The corresponding increase in power has shown that these traits are very highly polygenic, with a large fraction of segregating polymorphisms (hundreds of thousands of loci) having a causal effect on phenotype (Yang et al., 2010; International Schizophrenia Consortium et al., 2009). However, the vast majority of these loci have extremely small effects, and we remain unable to explain most of the heritable variation in many of these traits (the ‘missing heritability’ problem; Manolio et al., 2009).

In contrast to GWAS, quantitative trait locus (QTL) mapping studies in model organisms such as budding yeast tend to have much smaller sample sizes of at most a few thousand individuals (Steinmetz et al., 2002; Bloom et al., 2013; Burga et al., 2019; Mackay and Huang, 2018; Bergelson and Roux, 2010). Due to their lower power, most of these studies are only able to identify relatively few loci (typically at most dozens, though see below) with a causal effect on phenotype. Despite this, these few loci explain most or all of the observed phenotypic variation in many of the traits studied (Fay, 2013).

The reasons for this striking discrepancy between GWAS and QTL mapping studies remain unclear. It may be that segregating variation in human populations has different properties than the between-strain polymorphisms analyzed in QTL mapping studies, or the nature of the traits being studied may be different. However, it is also possible that the discrepancy arises for more technical reasons associated with the limitations of GWAS and/or QTL mapping studies. For example, GWAS studies suffer from statistical confounders due to population structure, and the low median minor allele frequencies in these studies limit power and mapping resolution (Bloom et al., 2013; Sohail et al., 2019; King et al., 2012; Consortium, 2012). These factors make it difficult to distinguish between alternative models of genetic architecture, or to detect specific individual small-effect causal loci. Thus, it may be the case that the highly polygenic architectures apparently observed in GWAS studies are at least in part artifacts introduced by these confounding factors. Alternatively, the limited power of existing QTL mapping studies in model organisms such as budding yeast (perhaps combined with the relatively high functional density of these genomes) may cause them to aggregate numerous linked small-effect causal loci into single large-effect ‘composite’ QTL. This would allow these studies to successfully explain most of the observed phenotypic heritability in terms of an apparently small number of causal loci, even if the true architecture was in fact highly polygenic (Fay, 2013).

More recently, numerous studies have worked to advance the power and resolution of QTL mapping studies, and have begun to shed light on the discrepancy with GWAS (Bloom et al., 2013; Bloom et al., 2015; She and Jarosz, 2018; Bloom et al., 2019; Cubillos et al., 2011). One direction has been to use advanced crosses to introduce more recombination breakpoints into mapping panels (She and Jarosz, 2018). This improves fine-mapping resolution and under some circumstances may be able to resolve composite QTL into individual causal loci, but it does not in itself improve power to detect small-effect alleles. Another approach is to use a multiparental cross (Cubillos et al., 2013) or multiple individual crosses (e.g. in a round-robin mating; Bloom et al., 2019). Several recent studies have constructed somewhat larger mapping panels with this type of design (as many as 14,000 segregants; Bloom et al., 2019); these offer the potential to gain more insight into trait architecture by surveying a broader spectrum of natural variation that could potentially contribute to phenotype. However, because multiparental crosses reduce the allele frequency of each variant (and in round-robin schemes each variant is present in only a few matings), these studies also have limited power to detect small-effect alleles. Finally, several recent studies have constructed large panels of diploid genotypes by mating smaller pools of haploid parents (e.g. a 384 × 104 mating leading to 18,126 F6 diploids; Jakobson and Jarosz, 2019). These studies are essential to understand potential dominance effects. However, the ability to identify small-effect alleles scales only with the number of unique haploid parents rather than the number of diploid genotypes, so these studies also lack power for this purpose. Thus, previous studies have been unable to observe the polygenic regime of complex traits or to offer insight into its consequences.

Here, rather than adopting any of these more complex study designs, we sought to increase the power and resolution of QTL mapping in budding yeast simply by dramatically increasing sample size. To do so, we introduce a barcoded bulk QTL (BB-QTL) mapping approach that allows us to construct and measure phenotypes in a panel of 100,000 F1 segregants from a single yeast cross, a sample size almost two orders of magnitude larger than the current state of the art (Figure 1A). We combined several recent technical advances to overcome the challenges of QTL mapping at the scale of 100,000 segregants: (i) unique DNA barcoding of every strain, which allows us to conduct sequencing-based bulk phenotype measurements; (ii) a highly multiplexed sequencing approach that exploits our knowledge of the parental genotypes to accurately infer the genotype of each segregant from low-coverage (<1x) sequence data; (iii) liquid handling robotics and combinatorial pooling to create, array, manipulate, and store this segregant collection in 96/384-well plates; and (iv) a highly conservative cross-validated forward search approach to confidently infer large numbers of small-effect QTL.

Cross design, genotyping, phenotyping, and barcode association.

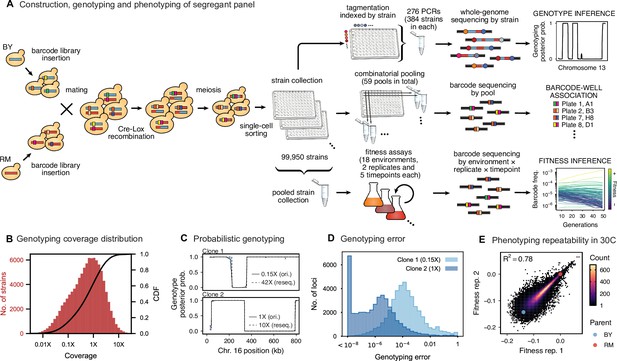

(A) Construction, genotyping, and phenotyping of segregant panel. Founding strains BY (blue) and RM (red) are transformed with diverse barcode libraries (colored rectangles) and mated in bulk. Cre recombination combines barcodes onto the same chromosome. After meiosis, sporulation, and selection for barcode retention, we sort single haploid cells into 96-well plates. Top: whole- genome sequencing of segregants via multiplexed tagmentation. Middle: barcode-well association by combinatorial pooling. Bottom: bulk phenotyping by pooled competition assays and barcode frequency tracking. See Figure 1—figure supplements 1–3, and Materials and methods for details. (B) Histogram and cumulative distribution function (CDF) of genotyping coverage of our panel (Figure 1—source data 1). (C) Inferred probabilistic genotypes for two representative individuals from low coverage (solid) and high coverage (dashed) sequencing, with the genotyping error (difference between low and high coverage probabilistic genotypes) indicated by shaded blue regions (Figure 1—source data 2). (D) Distribution of genotyping error by SNP for the two individuals shown in (C). (E) Reproducibility of phenotype measurements in 30 C environment (see Figure 1—figure supplement 4 for other environments). Here, fitness values are inferred on data from each individual replicate assay. For all other analyses, we use fitness values jointly inferred across both replicates (see Appendix 2, Figure 1—source data 3).

-

Figure 1—source data 1

Genotyping coverage of all strains in our panel.

- https://cdn.elifesciences.org/articles/73983/elife-73983-fig1-data1-v2.txt

-

Figure 1—source data 2

Inferred genotype for resequenced clones in Chr XVI.

- https://cdn.elifesciences.org/articles/73983/elife-73983-fig1-data2-v2.txt

-

Figure 1—source data 3

Replicate fitness measurements in 30C.

- https://cdn.elifesciences.org/articles/73983/elife-73983-fig1-data3-v2.txt

Using this BB-QTL approach, we mapped the genetic basis of 18 complex phenotypes. Despite the fact that earlier lower-powered QTL mapping studies in yeast have successfully explained most or all of the heritability of similar phenotypes with models involving only a handful of loci, we find that the increased power of our approach reveals that these traits are in fact highly polygenic, with more than a hundred causal loci contributing to almost every phenotype. We also exploit our increased power to investigate widespread patterns of pleiotropy across the eighteen phenotypes, and to analyze the role of epistatic interactions in the genetic architecture of each trait.

Results

Construction of the barcoded segregant panel

To generate our segregant collection, we began by mating a laboratory (BY) and vineyard (RM) strain (Figure 1A), which differ at 41,594 single-nucleotide polymorphisms (SNPs) and vary in many relevant phenotypes (Bloom et al., 2013). We labeled each parent strain with diverse DNA barcodes (a random sequence of 16 nucleotides), to create pools of each parent that are isogenic except for this barcode (12 and 23 pools of ∼1000 unique barcodes in the RM and BY parental pools, respectively). Barcodes are integrated at a neutral locus containing Cre-Lox machinery for combining barcodes, similar to the ‘renewable’ barcoding system we introduced in recent work (Nguyen Ba et al., 2019). We then created 276 sets by mating all combinations of parental pools to create heterozygous RM/BY diploids, each of which contains one barcode from each parent. After mating, we induce Cre-Lox recombination to assemble the two barcodes onto the same chromosome, creating a 32-basepair double barcode. After sporulating the diploids and selecting for doubly-barcoded haploid MATa offspring using a mating-type specific promoter and selection markers (Tong et al., 2001), we used single-cell sorting to select ∼100,000 random segregants and to array them into individual wells in 1,104 96-well plates. Because there are over 1 million possible barcodes per set, and only 384 offspring selected per set, this random sorting is highly unlikely to select duplicates, allowing us to produce a strain collection with one uniquely barcoded genotype in each well that can be manipulated with liquid handling robotics. Finally, we identified the barcode associated with each segregant by constructing orthogonal pools (e.g. all segregants in a given 96-well plate, all segregants in row A of any 96-well plate, all segregants from a set, etc.), and sequencing the barcode locus in each pool. This combinatorial pooling scheme allows us to infer the barcode associated with each segregant in each individual well, based on the unique set of pools in which a given barcode appears (Erlich et al., 2009).

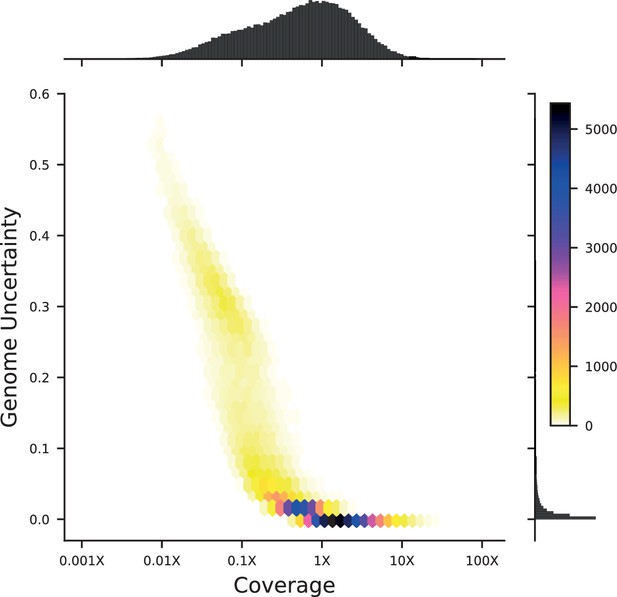

Inferring segregant genotypes

We next conducted whole-genome sequencing of every strain using an automated library preparation pipeline that makes use of custom indexed adapters to conduct tagmentation in 384-well plates, after which samples can be pooled for downstream library preparation (Figure 1A). To limit sequencing costs, we infer segregant genotypes from low-coverage sequencing data (median coverage of 0.6 x per segregant; Figure 1B). We can obtain high genotyping accuracy despite such low coverage due to our cross design: because we use an F1 rather than an advanced cross, we have a high density of SNPs relative to recombination breakpoints in each individual (>700 SNPs between recombination breakpoints on average). Exploiting this fact in combination with our knowledge of the parental genotypes, we developed a Hidden Markov Model (HMM) to infer the complete segregant genotypes from this data (see Appendix 1). This HMM is similar in spirit to earlier imputation approaches (Arends et al., 2010; Marchini and Howie, 2010; Bilton et al., 2018); it infers genotypes at unobserved loci (and corrects for sequencing errors and index-swapping artifacts) by assuming that each segregant consists of stretches of RM and BY loci, separated by relatively sparse recombination events. We note that this model produces probabilistic estimates of genotypes (i.e. the posterior probability that segregant genotypes is either RM or BY at each SNP; Figure 1C), which we account for in our analysis below.

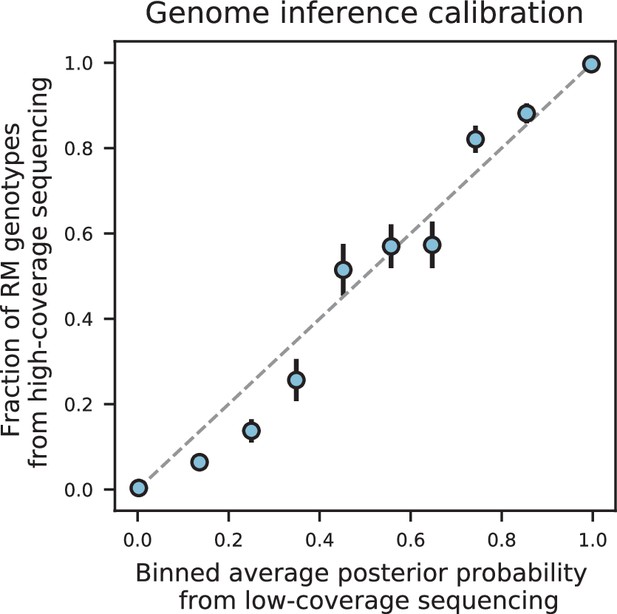

We assessed two key aspects of the performance of this sequencing approach: the confidence with which it infers genotypes, and the accuracy of the genotypes assigned. We find that at 0.1 x coverage and above, our HMM approach confidently assigns genotypes at almost all loci (posterior probability of >92% of the inferred genotype at >99% of loci; see Appendix 1—figure 2 and Appendix 1 for a discussion of our validation of these posterior probability estimates). Loci not confidently assigned to either parental genotype largely correspond to SNPs in the immediate vicinity of breakpoints, which cannot be precisely resolved with low-coverage sequencing (we note that these uncertainties do affect mapping resolution, as the precise location of breakpoints is important for this purpose; see Appendix 1-1.4 for an extensive discussion and analysis of this uncertainty). To assess the accuracy of our genotyping, we conducted high-coverage sequencing of a small subset of segregants and compared the results to the inferred genotypes from our low-coverage data. We find that the genotyping is accurate, with detectable error only very near recombination breakpoints (Figure 1C and D). In addition, we find that our posterior probabilities are well calibrated (e.g. 80% of the loci with an RM posterior probability of 0.8 are indeed RM; see Appendix 1). We also note that, as expected, most SNPs are present across our segregant panel at an allele frequency of 0.5 (Figure 1—figure supplement 3), except for a few marker loci that are selected during engineering of the segregants.

Barcoded bulk phenotype measurements

Earlier QTL mapping studies in budding yeast have typically assayed phenotypes for each segregant in their mapping panels independently, primarily by measuring colony sizes on solid agar plates (Steinmetz et al., 2002; Bloom et al., 2013; Bloom et al., 2015; She and Jarosz, 2018; Bloom et al., 2019; Jakobson and Jarosz, 2019). These colony size phenotypes can be defined on a variety of different solid media, but while they are relatively high throughput (often conducted in 384-well format), they are not readily scalable to measurements of 100,000 segregants.

Here, we exploit our barcoding system to instead measure phenotypes for all segregants in parallel, in a single bulk pooled assay for each phenotype. The basic idea is straightforward: we combine all segregants into a pool, sequence the barcode locus to measure the relative frequency of each segregant, apply some selection pressure, and then sequence again to measure how relative frequencies change (Smith et al., 2009). These bulk assays are easily scalable and can be applied to any phenotype that can be measured based on changes in relative strain frequencies. Because we only need to sequence the barcode region, we can sequence deeply to obtain high-resolution phenotype measurements at modest cost. In addition, we can correct sequencing errors because the set of true barcodes is known in advance from combinatorial pooling (see above). Importantly, this system allows us to track the frequency changes of each individual in the pool, assigning a phenotype to each specific segregant genotype. This stands in contrast to ‘bulk segregant analysis’ approaches that use whole-genome sequencing of pooled segregant panels to track frequency changes of alleles rather than individual genotypes (Ehrenreich et al., 2010; Michelmore et al., 1991); our approach increases power and allows us to study interaction effects between loci across the genome.

Using this BB-QTL system, we investigate eighteen complex traits, defined as competitive fitness in a variety of liquid growth conditions (‘environments’), including minimal, natural, and rich media with several carbon sources and a range of chemical and temperature stressors (Table 1). To measure these phenotypes, we pool all strains and track barcode frequencies through 49 generations of competition. We use a maximum likelihood model to jointly infer the relative fitness of each segregant in each assay—a value related to the instantaneous exponential rate of change in frequency of a strain during the course of the assay (Figure 1A, lower-right inset; see Appendix 2). These measurements are consistent between replicates (average between replicate assays of 0.77), although we note that the inherent correlation between fitness and barcode read counts means that errors are inversely correlated with fitness (Figure 1E; Figure 1—figure supplement 4). While genetic changes such as de novo mutations and ploidy changes can occur during bulk selection, we estimate their rates to be sufficiently low such that they impact only a small fraction of barcode lineages and thus do not significantly bias the inference of QTL effects over the strain collection (see Appendix 2).

Phenotyping growth conditions.

Summary of the eighteen competitive fitness phenotypes we analyze in this study. All assays were conducted at 30 °C, except when stated otherwise. YP: 1% yeast extract, 2% peptone. YPD: 1% yeast extract, 2% peptone, 2% glucose. SD: synthetic defined medium, 2% glucose. YNB: yeast nitrogen base, 2% glucose. Numbers of inferred additive QTL and epistatic interactions are also shown.

| Name | Description | Additive QTL | Epistatic QTL |

|---|---|---|---|

| 23 C | YPD, 23 °C | 112 | 185 |

| 25 C | YPD, 25 °C | 134 | 189 |

| 27 C | YPD, 27 °C | 149 | 255 |

| 30 C | YPD, 30 °C | 159 | 247 |

| 33 C | YPD, 33 °C | 147 | 216 |

| 35 C | YPD, 35 °C | 117 | 250 |

| 37 C | YPD, 37 °C | 128 | 265 |

| sds | YPD, 0.005% (w/v) SDS | 175 | 263 |

| raff | YP, 2% (w/v) raffinose | 167 | 221 |

| mann | YP, 2% (w/v) mannose | 169 | 341 |

| cu | YPD, 1 mM copper(II) sulfate | 143 | 225 |

| eth | YPD, 5% (v/v) ethanol | 149 | 247 |

| suloc | YPD, 50 µM suloctidil | 173 | 314 |

| 4NQO | SD, 0.05 µg/ml 4-nitroquinoline 1-oxide | 153 | 394 |

| ynb | YNB, w/o AAs, w/ ammonium sulfate | 145 | 303 |

| mol | molasses, diluted to 20% (w/v) sugars | 111 | 235 |

| gu | YPD, 6 mM guanidinium chloride | 185 | 277 |

| li | YPD, 20 mM lithium acetate | 83 | 42 |

Modified stepwise cross-validated forward search approach to mapping QTL

With genotype and phenotype data for each segregant in hand, we next sought to map the locations and effects of QTL. The typical approach to inferring causal loci would be to use a forward stepwise regression (Bloom et al., 2013; Arends et al., 2010). This method proceeds by first computing a statistic such as -value or LOD score for each SNP independently, to test for a statistical association between that SNP and the phenotype. The most-significant SNP is identified as a causal locus, and its estimated effect size is regressed out of the data. This process is then repeated iteratively to identify additional causal loci. These iterations proceed until no loci are identified with a statistic that exceeds a predetermined significance threshold, which is defined based on a desired level of genome-wide significance (e.g. based on a null expectation from permutation tests or assumptions about the numbers of true causal loci). However, although this approach is fast and simple and can identify large numbers of QTL, it is not conservative. Variables added in a stepwise approach do not follow the claimed or -distribution, so using p-values or related statistics as a selection criterion is known to produce false positives, especially at large sample sizes or in the presence of strong linkage (Smith, 2018). Because our primary goal is to dissect the extent of polygenicity by resolving small-effect loci and decomposing ‘composite’ QTL, these false positives are particularly problematic and we therefore cannot use this traditional approach.

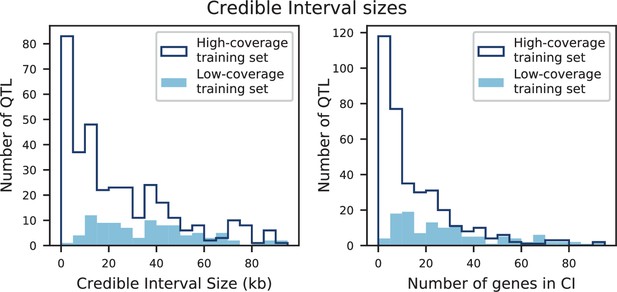

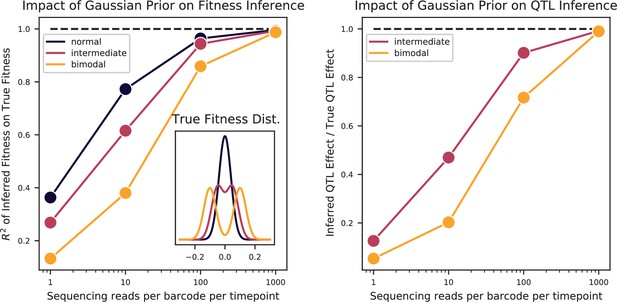

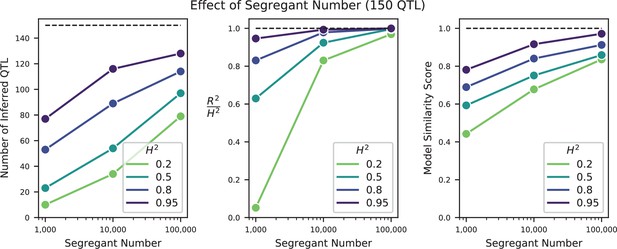

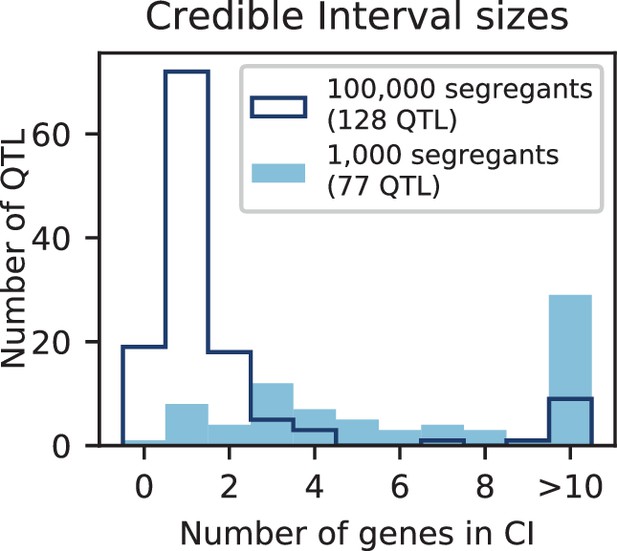

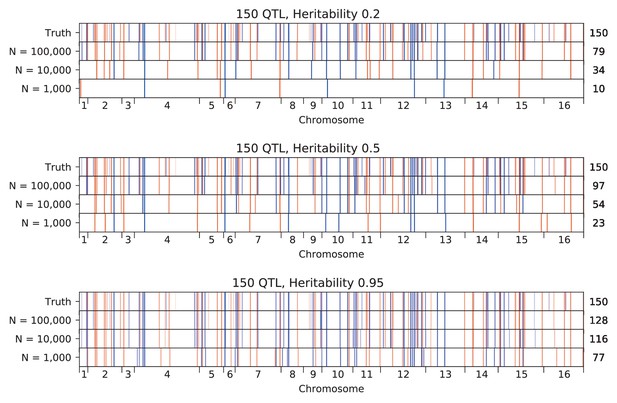

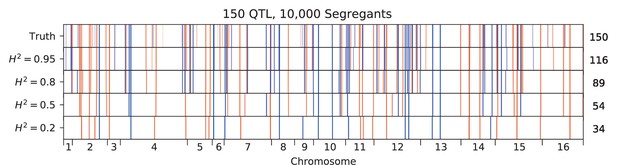

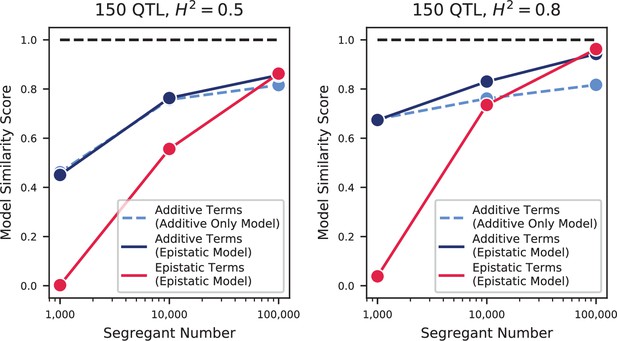

Fortunately, due to the high statistical power of our study design, we are better positioned to address the question of polygenicity using a more conservative method with lower false discovery rate. To do so, we carried out QTL mapping through a modified stepwise regression approach, with three key differences compared to previous methods (see Appendix 3 for details). First, we use cross-validation rather than statistical significance to terminate the model search procedure, which reduces the false positive rate. Specifically, we divide the data into training and test sets (90% and 10% of segregants respectively, chosen randomly), and add QTL iteratively in a forward stepwise regression on the training set. We terminate this process when performance on the test set declines, and use this point to define an L0-norm sparsity penalty on the number of QTL. We repeat this process for all possible divisions of the data to identify the average sparsity penalty, and then use this sparsity penalty to infer our final model from all the data (in addition, an outer loop involving a validation set is also used to assess the performance of our final model). The second key difference in our method is that we jointly re-optimize inferred effect sizes (i.e. estimated effect on fitness of having the RM versus the BY version of a QTL) and lead SNP positions (i.e. our best estimate of the actual causal SNP for each QTL) at each step. This further reduces the bias introduced by the greedy forward search procedure. Finally, the third key difference in our approach is to estimate the 95% credible interval around each lead SNP using a Bayesian method rather than LOD-drop methods, which is more suitable in polygenic architectures. We describe and validate this modified stepwise regression approach in detail in Appendix 3. Simulations under various QTL architectures show that this approach has a low false positive rate, accurately identifies lead SNPs and credible intervals even with moderate linkage, and generally calls fewer QTL than in the true model, only missing QTL of extremely small effect sizes. The behavior of this approach is simple and intuitive: the algorithm greedily adds QTL to the model if their expected contribution to the total phenotypic variance exceeds the bias and increasing variance of the forward search procedure, which is greatly reduced at large sample size. Thus, it may fail to identify very small effect size variants and may fail to break up composite QTL in extremely strong linkage.

Resolving the highly polygenic architecture of complex phenotypes in yeast

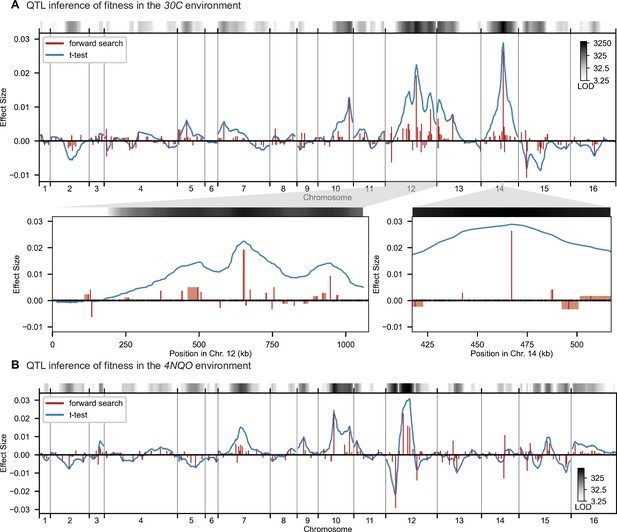

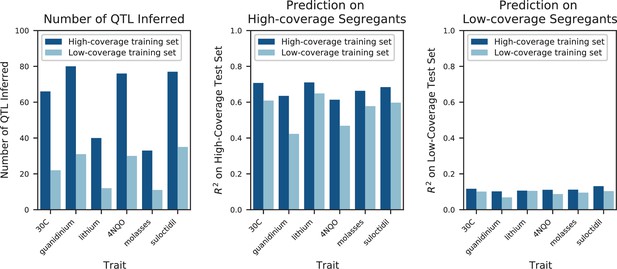

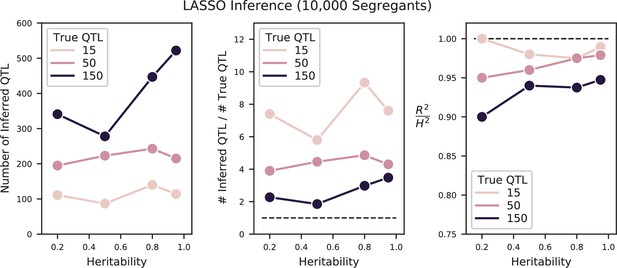

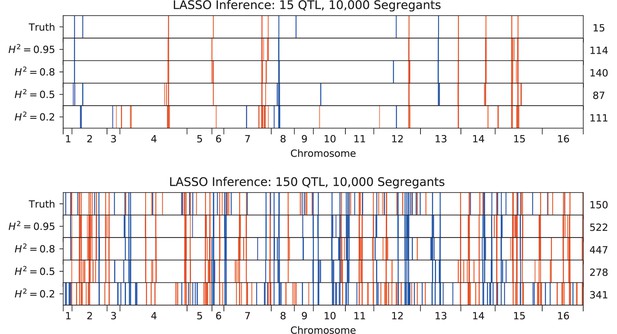

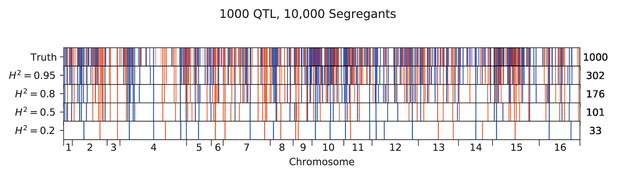

We used our modified stepwise cross-validated forward search to infer the genetic basis of the 18 phenotypes described in Table 1, assuming an additive model. We find that these phenotypes are highly polygenic: we identify well over 100 QTL spread throughout the genome for almost every trait, an order of magnitude more than that found for similar phenotypes in most earlier studies ( of SNPs in our panel; Figures 2 and 3B). This increase can be directly attributed to our large sample size: inference on a downsampled dataset of 1000 individuals detects no more than 30 QTL for any trait (see Appendix 3).

High-resolution QTL mapping.

QTL mapping for (A) YPD at 30 °C and (B) SD with 4-nitroquinoline (4NQO). Inferred QTL are shown as red bars; bar height shows effect size and red shaded regions represent credible intervals. For contrast, effect sizes inferred by a Student’s t-test at each locus are shown in blue. Gray bars at top indicate loci with log-odds (LOD) scores surpassing genome-wide significance in this t-test, with shading level corresponding to log-LOD score. See Figure 2—figure supplements 1–4 for other environments. See Supplementary file 2 for all inferred additive QTL models.

Genetic architecture and pleiotropy.

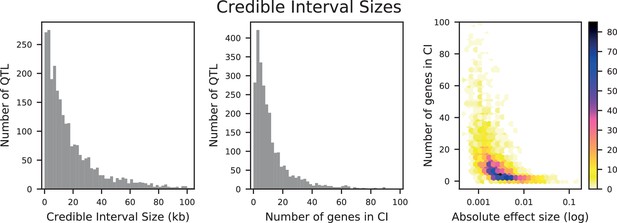

(A) Pairwise Pearson correlations between phenotype measurements, ordered by hierarchical clustering (Figure 3—source data 1). (B) Inferred genetic architecture for each trait. Each inferred QTL is denoted by a red or blue line for a positive or negative effect of the RM allele, respectively; color intensity denotes effect size on a log scale. Notable genes are indicated above. See Figure 3—figure supplement 1 for effect size comparison of the most pleiotropic genes. (C) Smoothed distribution of absolute effect sizes for each trait, normalized by the median effect for each trait. See Figure 3—figure supplement 2 for a breakdown of the distributions by QTL effect sign. (D) Distribution of the number of genes within the 95% credible interval for each QTL (Figure 3—source data 2). (E) Distribution of SNP types. “High posterior” lead SNPs are those with >50% posterior probability. (F) Fractions of synonymous SNPs, nonsynonymous SNPs, and QTL lead SNPs as a function of their frequency in the 1011 Yeast Genomes panel (Figure 3—source data 3). (G) Pairwise model similarity scores (which quantify differences in QTL positions and effect sizes between traits; see Appendix 3) across traits. (H) Pairwise model similarity scores for each temperature trait against all other temperature traits (Figure 3—source data 4). See Figure 3—figure supplement 3 for effect size comparisons between related environments. (I) Cumulative distribution functions (CDFs) of differences in effect size for each locus between each pair of traits (orange). Grey traces represent null expectations (differences between cross-validation sets for the same trait). The least and most similar trait pairs are highlighted in red and purple, respectively, and indicated in the legend.

-

Figure 3—source data 1

Phenotypic correlation across environments.

- https://cdn.elifesciences.org/articles/73983/elife-73983-fig3-data1-v2.txt

-

Figure 3—source data 2

Number of genes within confidence intervals of inferred QTL.

- https://cdn.elifesciences.org/articles/73983/elife-73983-fig3-data2-v2.txt

-

Figure 3—source data 3

Frequency of lead SNPs in 1011 Yeast Genomes panel.

- https://cdn.elifesciences.org/articles/73983/elife-73983-fig3-data3-v2.txt

-

Figure 3—source data 4

Pairwise model similarity scores across environments.

- https://cdn.elifesciences.org/articles/73983/elife-73983-fig3-data4-v2.txt

The distribution of effect sizes of detected QTL shows a large enrichment of small-effect loci, and has similar shape (though different scale) across all phenotypes (Figure 3C), consistent with an exponential-like distribution above the limit of detection. This distribution suggests that further increases in sample size would reveal a further enrichment of even smaller-effect loci. While our SNP density is high relative to the recombination rate, our sample size is large enough that there are many individuals with a recombination breakpoint between any pair of neighboring SNPs (over 100 such individuals with breakpoints between each SNP on average). This allows us to precisely fine-map many of these QTL to causal genes or even nucleotides. We find that most QTL with substantial effect sizes are mapped to one or two genes, with dozens mapped to single SNPs (Figure 3D). In many cases these genes and precise causal nucleotides are consistent with previous mapping studies (e.g. MKT1, Deutschbauer and Davis, 2005; PHO84, Perlstein et al., 2007; HAP1, Brem et al., 2002); in some cases we resolve for the first time the causal SNP within a previously identified gene (e.g. IRA2, Smith and Kruglyak, 2008; VPS70, Duitama et al., 2014). However, we note that because our SNP panel does not capture all genetic variation, such as transposon insertions or small deletions, some QTL lead positions may tag linked variation rather than being causal themselves.

The SNP density in our panel and resolution of our approach highly constrain these regions of linked variation, providing guidance for future studies of specific QTL, but as a whole we find that our collection of lead SNPs displays some characteristic features of causal variants. Across all identified lead SNPs, we observe a significant enrichment of nonsynonymous substitutions, especially when considering lead SNPs with posterior probability above 0.5 (Figure 3E; , -test, ), as expected for causal changes in protein function. Lead SNPs are also more likely to be found within disordered regions of proteins (1.22 x fold increase, , Fisher’s exact test), even when constrained to nonsynonymous variants (1.28 x fold increase beyond the enrichment for nonsynonymous variants in disordered regions, , Fisher’s exact test), indicating potential causal roles in regulation (Dyson and Wright, 2005). Lead SNP alleles, especially those with large effect size, are observed at significantly lower minor allele frequencies (MAF) in the 1011 Yeast Genomes collection (Peter et al., 2018) compared to random SNPs (Figure 3F; , Fisher’s exact test considering alleles with effect >1% and rare alleles with MAF <5%) and minor alleles are more likely to be deleterious (, permutation test) regardless of which parental allele is rarer. These results are consistent with the view that rare, strongly deleterious alleles subject to negative selection can contribute substantially to complex trait architecture (Bloom et al., 2019; Fournier et al., 2019). However, we were unable to detect strong evidence of directional selection (see Appendix 3-1.7), possibly as a consequence of high polygenicity or weak concordance between our assay environments and selection pressure in the wild.

Patterns of pleiotropy

Our eighteen assay environments range widely in their similarity to each other: some groups of traits exhibit a high degree of phenotype correlation across strains, such as rich medium at a gradient of temperatures, while other groups of traits are almost completely uncorrelated, such as molasses, rich medium with suloctidil, and rich medium with guanidinium (Figure 3A). Because many of these phenotypes are likely to involve overlapping aspects of cellular function, we expect the inferred genetic architectures to exhibit substantial pleiotropy, where individual variants are causal for multiple traits. In addition, in highly polygenic architectures, pleiotropy across highly dissimilar traits is also expected to emerge due to properties of the interconnected cellular network. For example, SNPs in regulatory genes may affect key functional targets (some of them regulatory themselves) that directly influence a given phenotype, as well as other functional targets that may, in turn, influence other phenotypes (Liu et al., 2019a).

Consistent with these expectations, we observe diverse patterns of shared QTL across traits (Figure 3B). To examine these pleiotropic patterns at the gene level, we group QTL across traits whose lead SNP is within the same gene (or in the case of intergenic lead SNPs, the nearest gene). In total, we identify 449 such pleiotropic genes with lead SNPs affecting multiple traits (see Appendix 3). These genes encompass the majority of QTL across all phenotypes, and are highly enriched for regulatory function (Table 2) and for intrinsically disordered regions, which have been implicated in regulation (, Fisher’s exact test; Dyson and Wright, 2005). The most pleiotropic genes (Figure 3—figure supplement 1) correspond to known variants frequently associated with quantitative variation in yeast (e.g. MKT1, HAP1, IRA2).

Summary of significant GO enrichment terms (Table 2—source data 1).

| Go id | Term | Corrected p-value | # Genes |

|---|---|---|---|

| GO:0000981 | RNA polymerase II transcription factor activity, sequence-specific DNA binding | 0.000026 | 30 |

| GO:0140110 | Transcription regulator activity | 0.000066 | 42 |

| GO:0000976 | Transcription regulatory region sequence-specific DNA binding | 0.000095 | 29 |

| GO:0044212 | Transcription regulatory region DNA binding | 0.000113 | 29 |

| GO:0003700 | DNA binding transcription factor activity | 0.000148 | 31 |

| GO:0001067 | Regulatory region nucleic acid binding | 0.000161 | 29 |

| GO:0043565 | Sequence-specific DNA binding | 0.000269 | 41 |

| GO:0001227 | Transcriptional repressor activity, RNA polymerase II transcription regulatory region sequence-specific DNA binding | 0.000417 | 9 |

| GO:0003677 | DNA binding | 0.000453 | 65 |

| GO:0043167 | Ion binding | 0.000999 | 143 |

| GO:1990837 | Sequence-specific double-stranded DNA binding | 0.001449 | 32 |

| GO:0000977 | RNA polymerase II regulatory region sequence-specific DNA binding | 0.007709 | 21 |

| GO:0001012 | RNA polymerase II regulatory region DNA binding | 0.007709 | 21 |

-

Table 2—source data 1

Full results of GO analysis on pleiotropic genes.

- https://cdn.elifesciences.org/articles/73983/elife-73983-table2-data1-v2.txt

The highly polygenic nature of our phenotypes highlights the difficulty in identifying modules of target genes with interpretable functions related to the measured traits (Boyle et al., 2017). However, we can take advantage of our high-powered mapping approach to explore how pleiotropy leads to diverging phenotypes in different environments. Specifically, to obtain a global view of pleiotropy and characterize the shifting patterns of QTL effects across traits, we adopt a method inspired by sequence alignment strategies to match (or to leave unmatched) QTL from one trait with QTL from another trait, in a way that depends on the similarity in their effect sizes and distance between lead SNPs (see Appendix 3). From this, for each pair of environments we can find the change in effect size for each QTL, as well as an overall metric of model similarity (essentially a Pearson’s correlation coefficient between aligned model parameters, with highest score of 1 meaning two identical models, and a score of 0 meaning no similar QTL detected in both position and effect size). We find that pairwise model similarity scores recapitulate the phenotype correlation structure (Figure 3G), including smoothly varying similarity across the temperature gradient (Figure 3H), indicating that changes in our inferred model coefficients accurately capture patterns of pleiotropy.

For most comparisons between environments, substantial effect size changes are distributed over all QTL, indicating a broad response to the environmental shift (Figure 3I). For example, while growth in Li (rich medium+ lithium) is strongly affected by a single locus directly involved in salt tolerance (three tandem repeats of the ENA cluster in S288C, corresponding to 82% of explained variance; Wieland et al., 1995), 63 of the remaining 82 QTL are also detected in 30 C (rich medium only), explaining a further 15% of variance. To some extent, these 63 QTL may represent a ‘module’ of genes with functional relevance for growth in rich medium, but their effect sizes are far less correlated than would be expected from noise or for a similar pair of environments (e.g. 30 C and 27 C, Figure 3—figure supplement 3). For the temperature gradient, while we observe high correlations between similar temperatures overall, these are not due to specific subsets of genes with simple, interpretable monotonic changes in effect size. Indeed, effect size differences between temperature pairs are typically uncorrelated; thus, QTL that were more beneficial when moving from 30C to 27C may become less beneficial when moving from 27C to 25C or 25C to 23C (Figure 3—figure supplement 3). Together, these patterns of pleiotropy reveal large numbers of regulatory variants with widespread, important, and yet somewhat unpredictable effects on diverse phenotypes, implicating a highly interconnected cellular network while obscuring potential signatures of specific functional genes or modules.

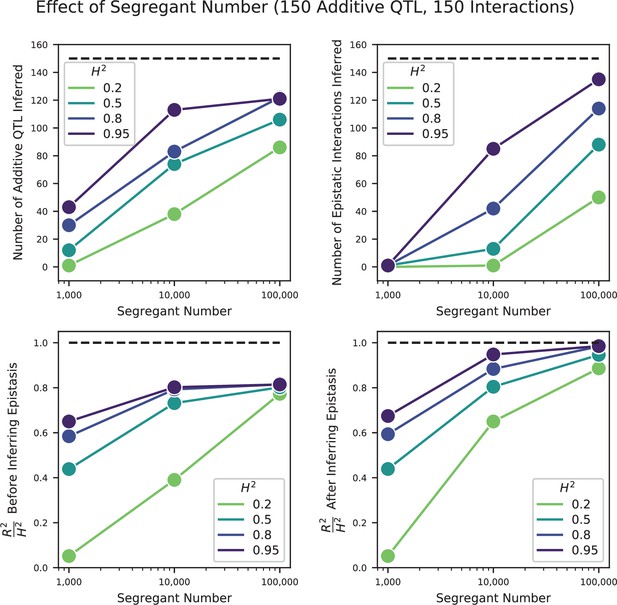

Epistasis

To characterize the structure of this complex cellular network in more detail, numerous studies have used genetic perturbations to measure epistatic interactions between genes, which in turn shed light on functional interactions (Tong et al., 2001; Horlbeck et al., 2018; Butland et al., 2008; Dixon et al., 2008; Costanzo et al., 2016; Costanzo et al., 2010). However, the role of epistasis in GWAS and QTL mapping studies remains controversial; these studies largely focus on variance partitioning to measure the strength of epistasis, as they are underpowered to infer specific interaction terms (Huang et al., 2016). We sought to leverage the large sample size and high allele frequencies of our study to infer epistatic interactions, by extending our inference method to include potential pairwise interactions among the loci previously identified as having an additive effect (see Appendix 3). Our approach builds on the modified stepwise cross-validated search described above: after obtaining the additive model, we perform a similar iterative forward search on pairwise interactions, re-optimizing both additive and pairwise effect sizes at each step and applying a second L0-norm sparsity penalty, similarly chosen by cross-validation, to terminate the model search. We note that restricting our analysis of epistasis to loci identified as having an additive effect does not represent a major limitation. This is because a pair of loci that have a pairwise interaction but no additive effects will tend to be (incorrectly) assigned additive effects in our additive-only model, since the epistatic interaction will typically lead to background-averaged associations between each locus and the phenotype. These spurious additive effects will then tend to be reduced upon addition of the pairwise interaction term.

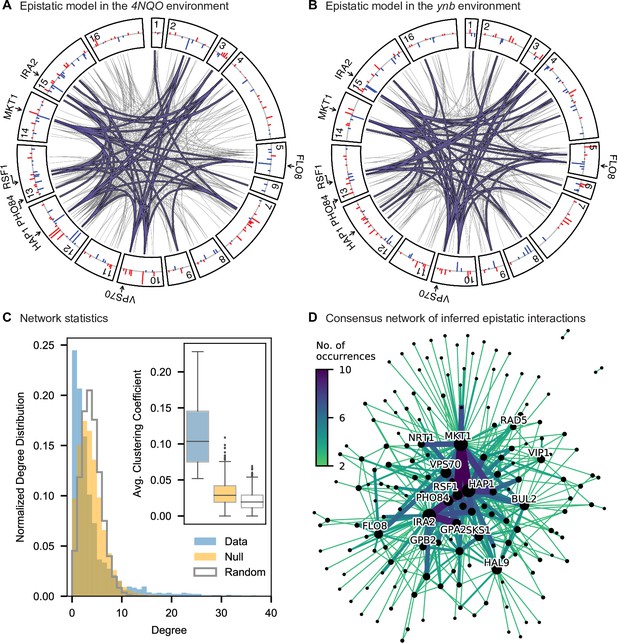

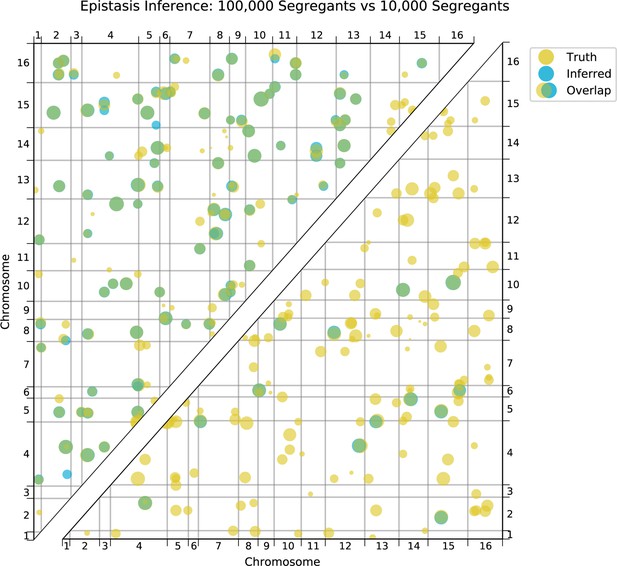

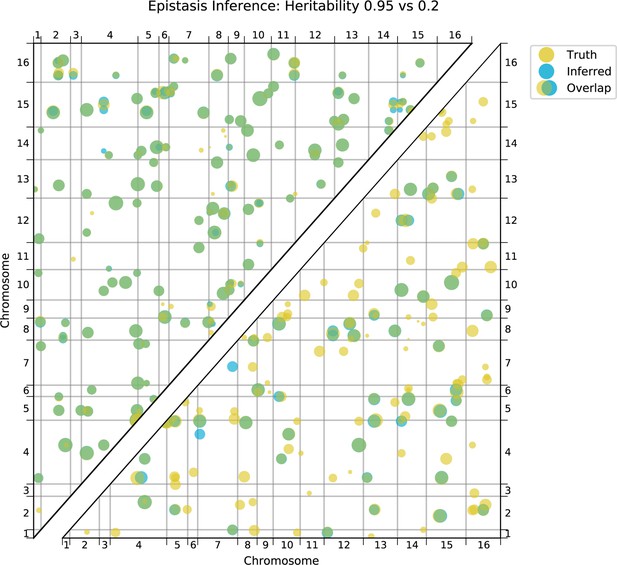

Using this approach, we detect widespread epistasis: hundreds of pairwise interactions for each phenotype (Figure 4A and B, Table 1; detected interactions are broadly consistent with results of a pairwise regression approach, as in Figure 4—figure supplement 4), which corresponds to an average of 1.7 epistatic interactions per QTL, substantially more than has been detected in previous mapping studies (Bloom et al., 2015). Most of these epistatic effects are modest, shifting predicted double mutant fitness values by a small amount in relation to additive predictions, although a small number largely exaggerate or completely suppress additive effects (Figure 4—figure supplement 5A). Overall, a slight majority of epistatic interactions are compensatory (Figure 4—figure supplement 5B), and nearly 55% of epistatic interactions have a positive sign (i.e. RM/RM and BY/BY genotypes are more fit than the additive expectation; Figure 4—figure supplement 5C). Finally, our procedure picks more epistatic interactions among intra- than between inter-chromosomal pairs of QTL (2.9% vs 2.3% among all environments; , ).

Pairwise epistasis.

(A, B) Inferred pairwise epistatic interactions between QTL (with additive effects as shown in outer ring) for (A) the 4NQO environment and (B) the ynb environment. Interactions that are also observed for at least one other trait are highlighted in purple. See Figure 4—figure supplements 1–3 for other environments, Figure 4—figure supplement 4 for a simpler pairwise regression method, and Figure 4—figure supplement 5 for a breakdown of epistatic effects and comparison to additive effects. (C) Network statistics across environments (Figure 4—source data 1). The pooled degree distribution for the eighteen phenotype networks is compared with 50 network realizations generated by an Erdos-Renyi random model (white) or an effect-size-correlation-preserving null model (orange; see Appendix 3). Inset: average clustering coefficient for the eighteen phenotypes, compared to 50 realizations of the null and random models. (D) Consensus network of inferred epistatic interactions. Nodes represent genes (with size scaled by degree) and edges represent interactions that were detected in more than one environment (with color and weight scaled by the number of occurrences). Notable genes are labeled. See Figure 4—figure supplement 6 for the same consensus network restricted to either highly-correlated or uncorrelated traits.

-

Figure 4—source data 1

Network statistics of observed and simulated epistatic networks.

- https://cdn.elifesciences.org/articles/73983/elife-73983-fig4-data1-v2.txt

To interpret these epistatic interactions in the context of cellular networks, we can represent our model as a graph for each phenotype, where nodes represent genes with QTL lead SNPs and edges represent epistatic interactions between those QTL (this perspective is distinct from and complementary to Costanzo et al., 2010, where nodes represent gene deletions and edges represent similar patterns of interaction). Notably, in contrast to a random graph, the epistatic graphs across phenotypes show heavy-tailed degree distributions, high clustering coefficients, and small average shortest paths (∼three steps between any pair of genes; Figure 4C); these features are characteristic of the small-world networks posited by the ‘omnigenic’ view of genetic architecture (Boyle et al., 2017). These results hold even when accounting for ascertainment bias (i.e. loci with large additive effects have more detected epistatic interactions; see Appendix 3).

We also find that hundreds of epistatic interactions are repeatedly found across environments (Figure 4D and Figure 4—figure supplement 6). Overall, epistatic interactions are more likely to be detected in multiple environments than expected by chance, even when considering only uncorrelated environments (simulation-based ; see Appendix 3), as expected if these interactions accurately represent the underlying regulatory network. Considering interactions found in all environments, we see a small but significant overlap of detected interactions with previous genome-wide deletion screens (Costanzo et al., 2016; , , ; see Appendix 3). Taken together, these results suggest that inference of epistatic interactions in a sufficiently high-powered QTL mapping study provides a consistent and complementary method to reveal both global properties and specific features of underlying functional networks.

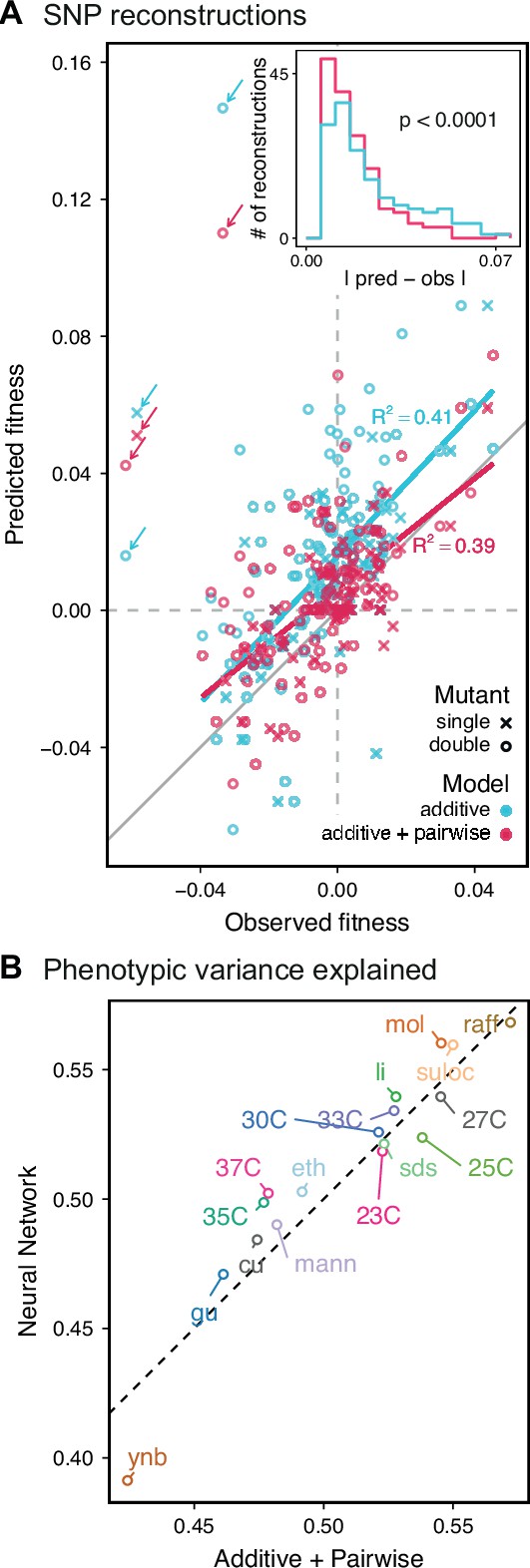

Validating QTL inferences with reconstructions

We next sought to experimentally validate the specific inferred QTL and their effect sizes from our additive and additive-plus-pairwise models. To do so, we reconstructed six individual and nine pairs of RM SNPs on the BY background and measured their competitive fitness in 11 of the original 18 conditions in individual competition assays (although note that for technical reasons these measurement conditions are not precisely identical to those used in the original bulk fitness assays; see Materials and methods). These mutations were chosen because they represent a mixture of known and unknown causal SNPs in known QTL, were amenable to the technical details of our reconstruction methods, and had a predicted effect size larger than the detection limit of our low-throughput assays to measure competitive fitness (approximately 0.5%) in at least one environment. We find that the QTL effects inferred with the additive-only models are correlated with the phenotypes of these reconstructed genotypes, although the predicted effects are systematically larger than the measured phenotypes (Figure 5A, cyan). To some extent, these errors may arise from differences in measurement conditions, undetected smaller-effect linked loci that bias inferred additive effect sizes, and from the confidence intervals around the lead SNP, which introduce uncertainty about the identity of the precise causal locus, among other factors. However, this limited power is also somewhat unsurprising even if our inferred lead SNPs are correct, because the effect sizes inferred from the additive-only model measure the effect of a given locus averaged across the F1 genetic backgrounds in our segregant panel. Thus, if there is significant epistasis, we expect the effect of these loci in the specific strain background chosen for the reconstruction (the BY parent in this case) to differ from the background-averaged effect inferred by BB-QTL.

Evaluating model performance.

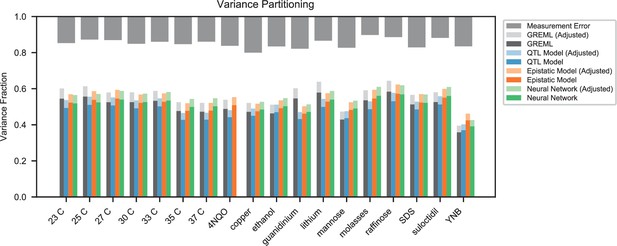

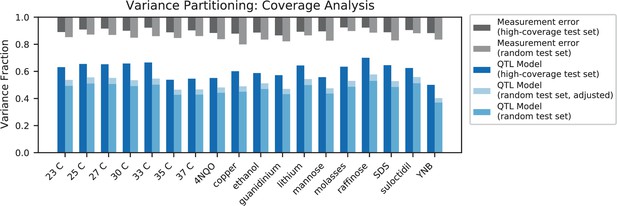

(A) Comparison between the measured fitness of reconstructions of 6 single (crosses) and nine double mutants (circles) in 11 environments, and their fitness in those environments as predicted by our inferred additive-only (cyan) or additive-plus-pairwise-epistasis models (magenta). The one-to-one line is shown in gray. values correspond to to shown fitted linear regressions for each type of model (colored lines), excluding MKT1 mutants measured in gu environment (outliers indicated by arrows). Inset shows the histogram of the absolute difference between observed and predicted reconstruction fitness under our two models, with the -value from the permutation test of the difference between these distributions indicated. See Figure 5—figure supplements 1 and 2 for a full breakdown of the data, and Figure 5—source data 1 for measured and predicted fitness values. (B) Comparison between estimated phenotypic variance explained by the additive-plus-pairwise-epistasis model and a trained dense neural network of optimized architecture.

-

Figure 5—source data 1

SNP reconstructions’ fitness measurements and predictions.

- https://cdn.elifesciences.org/articles/73983/elife-73983-fig5-data1-v2.txt

In agreement with this interpretation, we find that the predictions from our additive-plus-pairwise inference agree better with the measured values in our reconstructed mutants (Figure 5A, magenta). Specifically, we find that the correlation between predicted and measured phenotypes is similar to the additive-only model, but the systematic overestimates of effect sizes are significantly reduced (Figure 5A, inset; from permutation test; see Materials and Methods). This suggests a substantial effect of nonlinear terms, although the predictive power of our additive-plus-pairwise model remains modest. As above, this limited predictive power could be a consequence of undetected linked loci or errors in the identification of interacting loci. However, it may also indicate the presence of further epistasis of higher order than the pairwise terms we infer. To explore these potential effects of higher-order interactions, we trained a dense neural network to jointly predict 17 out of our 18 phenotypes from genotype data (see Appendix 3). The network architecture involves three densely connected layers, allowing it to capture arbitrary nonlinear mappings. Indeed, we find that this neural network approach does explain slightly more phenotypic variance (on average 1% more variance than the additive-plus-pairwise QTL model, Figure 5B; see Appendix 4), although specific interactions and causal SNPs are harder to interpret in this case. Together, these results suggest that although our ability to pinpoint precise causal loci and their effect sizes is likely limited by a variety of factors, the models with epistasis do more closely approach the correct genetic architecture despite explaining only marginally more variance than the additive model (Appendix 4—figure 1), as suggested by previous studies (Forsberg et al., 2017).

Discussion

The BB-QTL mapping approach we have introduced in this study increases the scale at which QTL mapping can be performed in budding yeast, primarily by taking advantage of automated liquid handling techniques and barcoded phenotyping. While the initial construction of our segregant panel involved substantial brute force, this has now generated a resource that can be easily shared (particularly in pooled form) and used for similar studies aiming to investigate a variety of related questions in quantitative genetics. In addition, the approaches we have developed here provide a template for the systematic construction of additional mapping panels in future work, which would offer the opportunity to survey the properties of a broader range of natural variation. While our methods are largely specific to budding yeast, conceptually similar high-throughput automated handling systems and barcoding methods may also offer promise in studying quantitative genetics in other model organisms, though substantial effort would be required to develop appropriate techniques in each specific system.

Here, we have used our large segregant panel to investigate the genetic basis of 18 phenotypes, defined as competitive fitness in a variety of different liquid media. The increased power of our study reveals that these traits are all highly polygenic: using a conservative cross-validation method, we find more than 100 QTL at high precision and low false-positive rate for almost every environment in a single F1 cross. Our detected QTL include many of the key genes identified in earlier studies, along with many novel loci. These QTL overall are consistent with statistical features observed in previous studies. For example, we find an enrichment in nonsynonymous variants among inferred causal loci in regulatory genes, and a tendency for rare variants (as defined by their frequency in the 1,011 Yeast Genomes collection; Peter et al., 2018) to have larger effect sizes.

While the QTL we detect do explain most of the narrow-sense heritability across all traits (Appendix 4—figure 1), this does not represent a substantial increase relative to the heritability explained by earlier, smaller studies with far fewer QTL detected (Bloom et al., 2013; Bloom et al., 2015; Bloom et al., 2019). Instead, the increased power of our approach allows us to dissect multiple causal loci within broad regions previously detected as a single ‘composite’ QTL (Figure 2A, zoom-ins), and to detect numerous novel small-effect QTL. Thus, our results suggest that, despite their success in explaining observed phenotypic heritability, these earlier QTL mapping studies in budding yeast fail to accurately resolve the highly polygenic nature of these phenotypes. This, in turn, implies that the apparent discrepancy in the extent of polygenicity inferred by GWAS compared to QTL mapping studies in model organisms arises at least in part as an artifact of the limited sample sizes and power of the latter.

Our finding that increasing power reveals increasingly polygenic architectures of complex traits is broadly consistent with several other recent studies that have improved the scale and power of QTL mapping in yeast in different ways. For example, advanced crosses have helped to resolve composite QTL into multiple causal loci (She and Jarosz, 2018), and multiparental or round-robin crosses have identified numerous additional causal loci by more broadly surveying natural variation in yeast (Bloom et al., 2019; Cubillos et al., 2013). In addition, recent work has used very large panels of diploid genotypes to infer highly polygenic trait architectures, though this study involves a much more permissive approach to identifying QTL that may lead to a substantial false positive rate (Jakobson and Jarosz, 2019). Here, we have shown that by simply increasing sample size we can both resolve composite QTL into multiple causal loci and also identify numerous additional small-effect loci that previous studies have been underpowered to detect. The distribution of QTL effect sizes we infer is consistent with an exponential distribution up to our limit of detection, suggesting that there may be many more even smaller-effect causal loci that could be revealed by further increases in sample size.

By applying BB-QTL mapping to eighteen different fitness traits, we explored how the effects of individual loci shift across small and large environmental perturbations. Quantifying the structure of these pleiotropic effects is technically challenging, particularly for many QTL of modest effect that are not resolved to a single SNP or gene. In these cases, it is difficult to determine whether a particular region contains a single truly pleiotropic locus, or multiple linked variants that each influence a different trait. While we have used one particular approach to this problem, other choices are also possible, and ideally future work to jointly infer QTL using data from all phenotypes simultaneously could provide a more rigorous method for identifying pleiotropic loci. However, we do find that the structure of the pleiotropy in our inferred models largely recapitulates the observed correlations between phenotypes, suggesting that the causal loci we identify are largely sufficient to explain these patterns. Many of the same genes are implicated across many traits, often with similar strong effect sizes in distinct environments, and as we might expect these highly pleiotropic QTL are enriched for regulatory function. However, dividing QTL into modules that affect subsets of environments, predicting how their effect sizes change across environments (even our temperature gradient), and identifying core or peripheral genes (as in Boyle et al., 2017) remains difficult. Future work to assay a larger number and wider range of phenotypes could potentially provide more detailed insight into the structure of relationships between traits and how they arise from shared genetic architectures.

We also leveraged the statistical power of our approach to explore the role of epistatic interactions between QTL. Previous studies have addressed this question through the lens of variance partitioning, concluding that epistasis contributes less than additive effects to predicting phenotype (Bloom et al., 2015). However, it is a well-known statistical phenomenon that variance partitioning alone cannot determine the relative importance of additive, epistatic, or dominance factors in gene action or genetic architectures (Huang et al., 2016). Here, we instead explore the role of epistasis by inferring specific pairwise interaction terms and analyzing their statistical and functional patterns. We find that epistasis is widespread, with nearly twice as many interaction terms as additive QTL. The resulting interaction graphs show characteristic features of biological networks, including heavy-tailed degree distributions and small shortest paths, and we see a significant overlap with interaction network maps from previous studies despite the different sources of variation (naturally occurring SNPs versus whole-gene deletions). Notably, the set of genes with the most numerous interactions overlaps with the set of highly pleiotropic genes, which are themselves enriched for regulatory function. Together, these findings indicate that we are capturing features of underlying regulatory and functional networks, although we are far from revealing the complete picture. In particular, we expect that we fail to detect many interactions that have small effect sizes below our detection limit, that the interactions we observe are limited by our choice of phenotypes, and that higher-order interactions may also be widespread.

To validate our QTL inference, we reconstructed a small set of single and double mutations by introducing RM alleles into the BY parental background. We find that our ability to predict the effects of these putatively causal loci remains somewhat limited: the inferred effect sizes in our additive plus pairwise epistasis models have relatively modest power to predict the fitness effects of reconstructed mutations and pairs of mutations. Thus, despite the unprecedented scale and power of our study, we still cannot claim to precisely infer the true genetic architecture of complex traits. This failure presumably arises in part from limitations to our inference, which could lead to inaccuracies in effect sizes or the precise locations of causal loci. In addition, the presence of higher order epistatic interactions (or interactions with the mitochondria) would imply that we cannot expect to accurately predict phenotypes for genotypes far outside of our F1 segregant panel, such as single- and double-SNP reconstructions on the BY genetic background. While both of these sources of error could in principle be reduced by further increases in sample size and power, it is unlikely that substantial improvements are likely to be realized in the near future.

However, despite these limitations, our BB-QTL mapping approach helps bridge the gap between well-controlled laboratory studies and high-powered, large-scale GWAS, revealing that complex trait architecture in model organisms is indeed influenced by large numbers of previously unobserved small-effect variants. We examined in detail how this architecture shifts across a spectrum of related traits, observing that while pleiotropy is common, changes in effects are largely unpredictable, even for similar traits. Further, we characterized specific epistatic interactions across traits, revealing not only their substantial contribution to phenotype but also the underlying network structure, in which a subset of genes occupy central roles. Future work in this and related systems is needed to illuminate the landscape of pleiotropy and epistasis more broadly, which will be crucial not merely for phenotype prediction but for our fundamental understanding of cellular organization and function.

Materials and methods

| Reagent type (species) or resource | Designation | Source or reference | Identifiers | Additional information |

|---|---|---|---|---|

| Strain, strain background (S. cerevisiae) | BY4741 | Baker Brachmann et al., 1998 | – | BY parent background |

| Strain, strain background (S. cerevisiae) | RM11-1a | Brem et al., 2002 | – | RM parent background |

| Strain, strain background (S. cerevisiae) | YAN696 | This paper | – | BY parent |

| Strain, strain background (S. cerevisiae) | YAN695 | This paper | – | RM parent |

| Strain, strain background (S. cerevisiae) | BB-QTL F1 strain library | This paper | – | 100,000 strains of barcoded BYxRM F1 produced, genotyped and characterized in this paper |

| Strain, strain background (E. coli) | BL21(DE3) | NEB | NEB:C2527I | Zymolyase and barcoded Tn-5 expression system |

| Recombinant DNA reagent | pAN216a pGAL1-HO pSTE2-HIS3 pSTE3 LEU2 (plasmid) | This paper | – | For MAT-type switching |

| Recombinant DNA reagent | pAN3H5a 1/2URA3 KanP1 ccdB LoxPR (plasmid) | This paper | – | Type one barcoding plasmid, without barcode library |

| Recombinant DNA reagent | pAN3H5a LoxPL ccdB HygP1 2/2URA3 (plasmid) | This paper | – | Type two barcoding plasmid, without barcode library |

| Sequence-based reagent | Custom Tn5 adapter oligos | This paper | Tn5-L and -R | See Materials and methods |

| Sequence-based reagent | Custom sequencing adapter primers | This paper | P5mod and P7mod | See Materials and methods |

| Sequence-based reagent | Custom barcode amplification primers | This paper | P1 and P2 | See Materials and methods |

| Sequence-based reagent | Custom sequencing primers for Illumina | This paper | Custom_read_1 through 4 | See Materials and methods |

| Software, algorithm | Custom code for genotype inference | This paper | – | See code repository |

| Software, algorithm | Custom code for phenotype inference | This paper | – | See code repository |

| Software, algorithm | Custom code for compressed sensing | This paper | – | See code repository |

| Software, algorithm | Custom code for qtl inference | This paper | – | See code repository |

Design and construction of the barcoded cross

A key design choice in any QTL mapping study is what genetic variation will be included (i.e. the choice of parental strains) and how that variation will be arranged (i.e. the cross design). In choosing strains, wild isolates may display more genotypic and phenotypic diversity than lab-adapted strains, but may also have poor efficiency during the mating, sporulation, and transformation steps required for barcoding. In designing the cross, one must balance several competing constraints, prioritizing different factors according to the goals of the particular study. Increasing the number of parental strains involved increases genetic diversity, but can also increase genotyping costs (see Appendix 1); increasing the number of crosses or segregants improves fine-mapping resolution by increasing the number of recombination events, but also increases strain production time and costs. For a study primarily interested in precise fine-mapping of strong effects, a modest number of segregants from a deep cross may be most appropriate She and Jarosz, 2018; for a study interested in a broad view of diversity across natural isolates, a modest number of segregants produced from a shallow multi-parent cross may be preferred (Bloom et al., 2019). However, we are primarily interested in achieving maximum statistical power to map large numbers of small-effect loci; for this, we choose to map an extremely large number of segregants from a simple, shallow cross. We begin in this section by describing our choice of strains, cross design, and barcoding strategy.

Strains

The two parental strains used in this study, YAN696 and YAN695, are derived from the BY4741 (Baker Brachmann et al., 1998) (S288C: MATa, his3Δ1, ura3Δ0, leu2Δ0, met17Δ0) and RM11-1a (haploid derived from Robert Mortimer’s Bb32(3): MATa, ura3Δ0, leu2Δ0, ho::KanMX; Brem et al., 2002) backgrounds respectively, with several modifications required for our barcoding strategy. We chose to work in these backgrounds (later denoted as BY and RM) due to their history in QTL mapping studies in yeast (Ehrenreich et al., 2010; Bloom et al., 2013; Brem et al., 2002), which demonstrate a rich phenotypic diversity in many environments.

The ancestral RM11-1a is known to incompletely divide due to the AMN1(A1103) allele (Yvert et al., 2003). To avoid this, we introduced the A1103T allele by Delitto Perfetto (Storici et al., 2001), yielding YAN497. This strain also contains an HO deletion that is incompatible with HO-targeting plasmids (Voth et al., 2001). We replaced this deletion by introducing the S288C version of HO with the NAT marker (resistance to nourseothricin; Goldstein and McCusker, 1999) driven by the TEF promoter from Lachancea waltii, and terminated with tSynth7 (Curran et al., 2015) (ho::LwpTEF-Nat-tSynth7), creating YAN503. In parallel, a MATα strain was created by converting the mating type of the ancestral RM11-1a using a centromeric plasmid carrying a galactose-inducible functional HO endonuclease (pAN216a_pGAL1-HO_pSTE2-HIS3_pSTE3_LEU2), creating YAN494. In this MATα strain, the HIS3 gene was knocked out with the his3d1 allele of BY4741, generating YAN501. This strain was crossed with YAN503 to generate a diploid (YAN501xYAN503) and sporulated to obtain a spore containing the desired set of markers (YAN515: MATα, ura3Δ0, leu2Δ0, his3Δ1, AMN1(A1103T), ho::LwpTEF-NAT-tSynth7). A ‘landing pad’ for later barcode insertion was introduced into this strain by swapping the NAT marker with pGAL1-Cre-CaURA3MX creating YAN616. This landing pad contains the Cre recombinase under the control of the galactose promoter and the URA3 gene from Candida albicans driven by the TEF promoter from Ashbya gossypii. This landing pad can be targeted by HO-targeting plasmids containing barcodes (see Figure 1—figure supplement 1). To allow future selection for diploids, the G418 resistance marker was introduced between the Cre gene and the CaURA3 gene, yielding the final RM11-1a strain YAN695.

The BY4741 strain was first converted to methionine prototrophy by transforming the MET17 gene into Δmet17, generating YAN599. The CAN1 gene was subsequently replaced with a MATa mating type reporter construct (Tong and Boone, 2006) (pSTE2-SpHIS5) which expresses the HIS5 gene from Schizosaccharomyces pombe (orthologous to the S. cerevisiae HIS3) in MATa cells. A landing pad was introduced into this strain with CaURA3MX-NAT-pGAL1-Cre, yielding YAN696; this landing pad differs from the one in YAN695 by the location of the pGAL1-Cre and the presence of a NAT marker rather than a G418 marker (see Figure 1—figure supplement 2).

Construction of barcoding plasmids

Request a detailed protocolThe central consideration in designing our barcoding strategy was ensuring sufficient diversity, such that no two out of 100,000 individual cells sorted from a pool will share the same barcode. To ensure this, the total number of barcodes in the pool must be several orders of magnitude larger than 105, which is infeasible even with maximal transformation efficiency. Our solution employs combinatorics: each spore receives two barcodes, one from each parent, such that mating two parental pools of ∼104 unique barcodes produces a pool of ∼108 uniquely barcoded spores. This requires efficient barcoding of the parental strains, efficient recombination of the two barcodes onto the same chromosome in the mated diploids, and efficient selection of double-barcoded haploids after sporulation.

Our barcoding system makes use of two types of barcoding plasmids, which we refer to as Type 1 and Type 2. Both types are based on the HO-targeting plasmid pAN3H5a (Figure 1—figure supplement 1), which contains the LEU2 gene for selection as well as homologous sequences that lead to integration of insert sequences with high efficiency. The Type 1 barcoding plasmid has the configuration pAN3H5a–1/2URA3–KanP1–ccdB–LoxPR, while Type 2 has the configuration pAN3H5a–LoxPL–ccdB–HygP1–2/2URA3. Here the selection marker URA3 is split across the two different plasmids: 1/2URA3 contains the first half of the URA3 coding sequence and an intron 5’ splice donor, while 2/2URA3 contains the 3’ splice acceptor site and the second half of the URA3 coding sequence. Thus we create an artificial intron within URA3, using the 5’ splice donor from RPS17a and the 3’ splice acceptor from RPS6a (Plass, 2020), that will eventually contain both barcodes. KanP1 and HygP1 represent primer sequences. The ccdB gene provides counter-selection for plasmids lacking barcodes (see below). Finally, LoxPR and LoxPL are sites in the Cre-Lox recombination system (Albert et al., 1995) used for recombining the two barcodes in the mated diploids. These sites contain arm mutations on the right and left side, respectively, that render them partially functional before recombination; after recombination, the site located between the barcodes is fully functional, while the one on the opposite chromosome has slightly reduced recombination efficiency (Nguyen Ba et al., 2019).

We next introduced a diverse barcode library into the barcoding plasmids using a Golden Gate reaction (Engler, 2009), exploiting the fact that the ccdB gene (with BsaI site removed) is flanked by two BsaI restriction endonuclease sites. Barcodes were ordered as single-stranded oligos from Integrated DNA Technologies (IDT) with degenerate bases (ordered as ‘hand-mixed’) flanked by priming sequences: P_BC1 = CTAGTT ATTGCT CAGCGG AGGTCT CAtact NNNatN NNNNat NNNNNg cNNNcg ctAGAG ACCGTC ATAGCT GTTTCC TG, P_BC2 = CTAGTT ATTGCT CAGCGG AGGTCT CAtact NNNatN NNNNgc NNNNNa tNNNcg ctAGAG ACCGTC ATAGCT GTTTCC TG. These differ only in nucleotides inserted at the 16 degenerate bases (represented by ‘N’), which comprise the barcodes; these barcodes are flanked by two BsaI sites that leave ‘tact’ and ‘cgct’ as overhangs. Conversion of the oligos to double-stranded DNA and preparation of the Escherichia coli library was performed as in Nguyen Ba et al., 2019. Briefly, Golden Gate reactions were carried out to insert P_BC1 and P_BC2 barcodes into Type 1 and Type 2 barcoding plasmids, respectively. Reactions were purified and electroporated into E. coli DH10β cells (Invitrogen) and recovered in about 1 L of molten LB (1% tryptone, 0.5% yeast extract) containing 0.3% SeaPrep agarose (Lonza) and 100 µg/mL ampicillin. The mixture was allowed to gel on ice for an hour and then placed at 37 °C overnight for recovery of the transformants. We routinely observed over 106 transformants from this procedure, and the two barcoded plasmid libraries were recovered by standard maxiprep (Figure 1—figure supplement 2a).

Parental barcoding procedure

Request a detailed protocolDistinct libraries of barcoded parental BY and RM strains were generated by transforming parental strains with barcoded plasmid libraries (Figure 1—figure supplement 2b): YAN696 (BY) with Type 1, and YAN695 (RM) with Type 2. Barcoded plasmids were digested with PmeI (New England Biolabs) prior to transformation, and transformants were selected on several SD –leu agar plates (6.71 g/L yeast nitrogen base, complete amino acid supplements lacking leucine, 2% dextrose). In total, 23 pools of ∼1000 transformants each were obtained for BY, and 12 pools of ∼1,000 transformants each were obtained for RM. After scraping the plates, we grew these pools overnight in SD –leu +5 FOA (6.71 g/L yeast nitrogen base, complete amino acid supplements lacking leucine, 2% dextrose, 1 g/L 5-fluoroorotic acid) to select for integration at the correct locus. Each of the BY and RM libraries were kept separate, and for each library we sequenced the barcode locus on an Illumina NextSeq to confirm the diversity and identity of parental barcodes. This allows for 23 × 12 distinct sets of diploid pools with approximately 1 million possible barcode combinations each, for a total of ∼3 × 108 barcodes.

Generation of 100,000 barcoded F1 segregants

Request a detailed protocolEach of the 23 × 12 combinations of BY and RM barcoded libraries was processed separately, from mating through sorting of the resulting F1 haploids (Figure 1—figure supplement 2b). For each distinct mating, the two parental libraries were first grown separately in 5 mL YPD (1% Difco yeast extract, 2% Bacto peptone, 2% dextrose) at 30 °C. The overnight cultures were plated on a single YPD agar plate and allowed to mate overnight at room temperature. The following day, the diploids were scraped and inoculated into 5 mL YPG (1% Difco yeast extract, 2% Bacto peptone, 2% galactose) containing 200 µg/mL G418 (GoldBio) and 10 µg/mL Nourseothricin (GoldBio) at a density of approximately cells/mL and allowed to grow for 24 hr at 30 °C. The next day, the cells were again diluted 1:25 into the same media and allowed to grow for 24 hr at 30 °C. This results in ∼10 generations of growth in galactose-containing media, which serves to induce Cre recombinase to recombine the barcoded landing pads, generating Ura+ cells (which therefore contain both barcodes on the same chromosome) at very high efficiency.

Recombinants were selected by 10 generations of growth (two cycles of 1:25 dilution and 24 hr of growth at 30 °C) in SD –ura (6.71 g/L Yeast Nitrogen Base with complete amino acid supplements lacking uracil, 2% dextrose). Cells were then diluted 1:25 into pre-sporulation media (1% Difco yeast extract, 2% Bacto peptone, 1% potassium acetate, 0.005% zinc acetate) and grown for 24 hr at room temperature. The next day, the whole culture was pelleted and resuspended into 5 mL sporulation media (1% potassium acetate, 0.005% zinc acetate) and grown for 72 hr at room temperature. Sporulation efficiency typically reached >75%.

Cells were germinated by pelleting approximately 100 µL of spores and digesting their asci with 50 µL of 5 mg/mL Zymolyase 20T (Zymo Research) containing 20 mM DTT for 15 min at 37 °C. The tetrads were subsequently disrupted by mild sonication (3 cycles of 30 s at 70% power), and the dispersed spores were recovered in 100 mL of molten SD –leu –ura –his + canavanine (6.71 g/L Yeast Nitrogen Base, complete amino acid supplements lacking uracil, leucine and histidine, 50 µg/mL canavanine, 2% dextrose) containing 0.3% SeaPrep agarose (Lonza) spread into a thin layer (about 1 cm deep). The mixture was allowed to set on ice for an hour, after which it was kept for 48 hr at 30 °C to allow for dispersed growth of colonies in 3D. This procedure routinely resulted in ∼106 colonies of uniquely double-barcoded MATa F1 haploid segregants for each pair of parental libraries.

F1 segregants obtained using our approach are expected to be prototrophic haploid MATa cells, with approximately half their genome derived from the BY parent and half from the RM parent, except at marker loci (see Figure 1—figure supplement 3).

Sorting F1 segregants into single wells

Request a detailed protocolAfter growth, germinated cells in soft agar were mixed by thorough shaking. Fifty µL of this suspension was inoculated in 5 mL SD –leu –ura –his + canavanine and allowed to grow for 2–4 hr at 30 °C. The cells were then stained with DiOC6(3) (Sigma) at a final concentration of 0.1 µM and incubated for 1 hr at room temperature. DiOC6(3) is a green-fluorescent lipophilic dye that stains for functional mitochondria (Petit et al., 1996). We used flow cytometry to sort single cells into individual wells of polypropylene 96-well round-bottom microtiter plates containing 150 µL YPD per well. During sorting we applied gates for front and side scatter to select for dispersed cells as well as a FITC gate for functional mitochondria. From each mating, we sorted 384 single cells into 4 96-well plates; because the possible diversity in each mating is about 106 unique barcodes, the probability that two F1 segregants share the same barcode is extremely low.

The single cells were then allowed to grow unshaken for 48 hr at 30 °C, forming a single colony at the bottom of each well. The plates were then scanned on a flatbed scanner and the scan images were used to identify blank wells. We observed 4843 blanks among a total of 105,984 wells (sorting efficiency of ≥95%), resulting in a total of 101,141 F1 segregants in our panel. Plates were then used for archiving, genotyping, barcode association, and phenotyping as described below.

We expect F1 segregants obtained using our approach to be prototrophic haploid MATa cells, with approximately half their genome derived from the BY parent and half from the RM parent, except at marker loci in chromosome III, IV, and V (see Figure 1—figure supplement 3 for allele frequencies).

Reference parental strains

Request a detailed protocolAlthough not strictly necessary for future analysis, it is often informative to phenotype the parental strains in the same assay as the F1 segregants. However, the parental strains described above (YAN695 and YAN696) do not contain the full recombined barcode locus and are thus not compatible with bulk-phenotyping strategies described in Appendix 2. We therefore created reference strains whose barcode locus and selection markers are identical to the F1 segregants, while their remaining genotypes are identical to the parental strains.

The parental BY strain YAN696 required little modification: we simply transformed its landing pad with a known double barcode (barcode sequence ATTTGACCCAAAGCTT – GGCATGGCGCCGTACG). In contrast, the parental RM strain is of the opposite mating type to the F1 segregants and differs at the CAN1 locus. From the YAN501xYAN503 diploid, we generated a MATa spore containing otherwise identical genotype as YAN515, producing YAN516. The CAN1 locus of this strain was replaced with the MATa mating type reporter as described previously (pSTE2-SpHIS5) producing YAN684. Finally, we transformed the landing pad with a known double barcode (barcode sequence AGAAGAAGTCACCGTA – TACTACGTCTTATTTA). We refer to these strains as RMref and BYref in Appendix 2.

Whole-genome sequencing of all F1 segregants

The SNP density in the BYxRM cross is such that there is on average one SNP every few hundred basepairs (i.e. on the order of one SNP per sequencing read), making whole-genome sequencing an attractive genotyping strategy. However, there are obvious time and cost constraints to preparing 100,000 sequencing libraries and sequencing 100,000 whole genomes to high coverage. We overcome these challenges by creating a highly automated and multiplexed library preparation pipeline, and by utilizing low-coverage sequencing in combination with genotype imputation methods. In this section we describe the general procedure for producing sequencing libraries, while sections below describe how we automate the procedure in 96-well format using liquid handling robots.

Cell lysis and zymolyase purification

Request a detailed protocolTypical tagmentase-based library preparation protocols start with purified genomic DNA of normalized concentration (Baym et al., 2015). However, we found that the transposition reaction is efficient in crude yeast lysate, provided that the yeast cells are lysed appropriately. This approach significantly reduces costs and increases throughput of library preparation.